Finding CRO Opportunities using SEO Crawlers

Humans are still needed, but, boy, can crawlers help

The longer I do CRO, the more I realize the importance of different types of research. For many years, I was heavy (and still am to some degree) into quantitative data. Achieving clean and reliable data collection into near-perfect Google Analytics setups. That will lead to the best CRO programs, right? It sure does start you off in a great spot, but it’s only a start.

In this post, I’m going to share an internal search results workflow I’ve developed over the years from my analytics and SEO days that will help speed up your research into areas your CRO program should focus on.

Meet Screaming Frog.

Search Results (Internal Site Search) Deep Dive

It would be easy for me to show you intent mappings (for both searcher intent and SERP intent) to show when relevancy is missing (for both keywords and page types). Perhaps that will come later, but I think that’s been done quite a bit.

Instead, I want to talk about something even closer to conversion, your internal site search results.

Site search is one of the bigger signals that your users really want something.

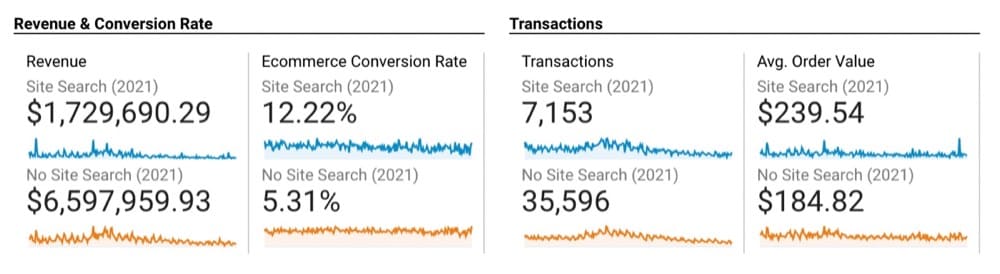

We can see the difference here from this pool supplies site from users who search vs. those who don’t:

130% more conversions when users search isn’t overly surprising, but it does show the power of providing the best search experience possible.

Now let’s dig into the search terms to make sure the experience is optimized.

My first recommendation is to download all your search terms (the last 12 months usually make the most sense unless you are highly seasonal).

Next, run them through a cluster tool to understand how people search at a higher level (this will give you better direction than combing through thousands of KWs).

(Before clustering, make sure you standardize and clean your KWs for misspellings and/or different case handling.)

Use =trim(clean(lower(cell-number))) for your formula to clean those up in Google Sheets.

Now, copy and paste them into Keyword Grouper Pro from MarketBold (this is free).

To form a group, you will estimate how many based on the total number of keywords you have I’m using 1,000 keywords to start, so guessing that 10 per group will give me the right amount of groups here. You never really know how many groups you want, but you should have enough to be representative of your users searching based on how many you are uploading (you’ll get better at this with use).

In seeing the keywords clustered, I would group them into a few different categories based on how people search:

- Part numbers (doesn’t get much more bottom of the funnel than that)

- Brand names

- Broad category types

Now, I can be more targeted with the GA search terms to know what to look for.

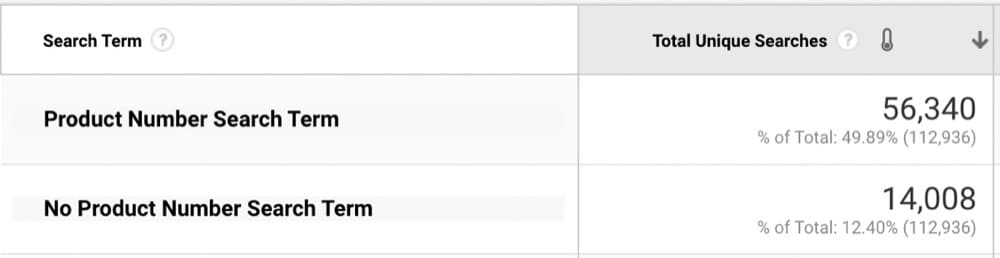

In this scenario, since users searching product numbers have high buying intent, I would want to start there (and not just look at the top “x” search results). With some simple Regex to isolate only product numbers (more than one number in the search terms), we find out that 80% of the searches contain a product number 😳

Now we’ll want to export our search results from Google Analytics. If you have a lot of search terms, you might need to use the API, or a tool like the Google Analytics Google Sheets add-on (it’s free).

Inspecting the Search Results (What Are We Looking For?)

Admittedly, this is where I first started to make sure I wasn’t going down a rabbit hole. But having done this too many times to count now, I knew what I was looking for 🙂

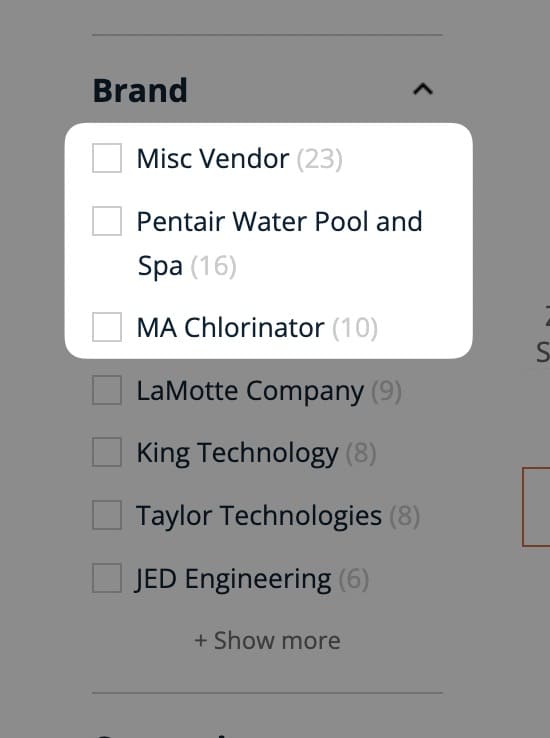

The “problem areas” here will vary based on what platform and search provider you are using. For this scenario, I’m mainly interested in:

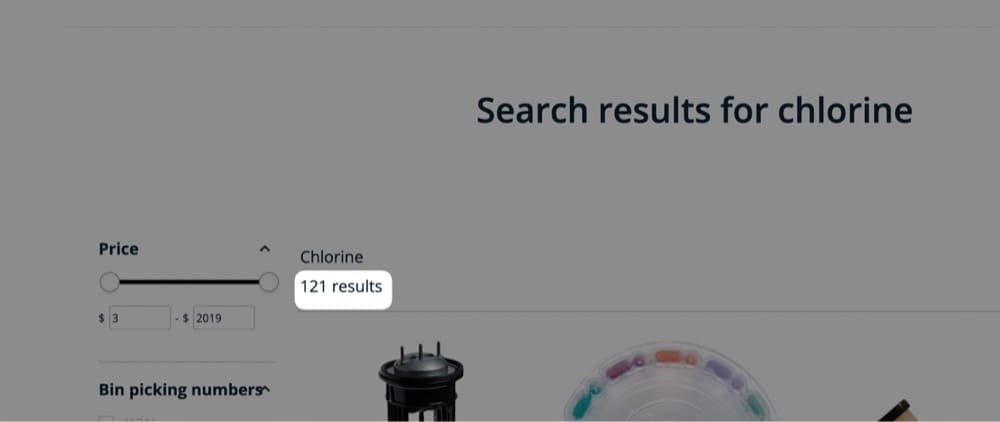

- the number of results displayed

Shown here:

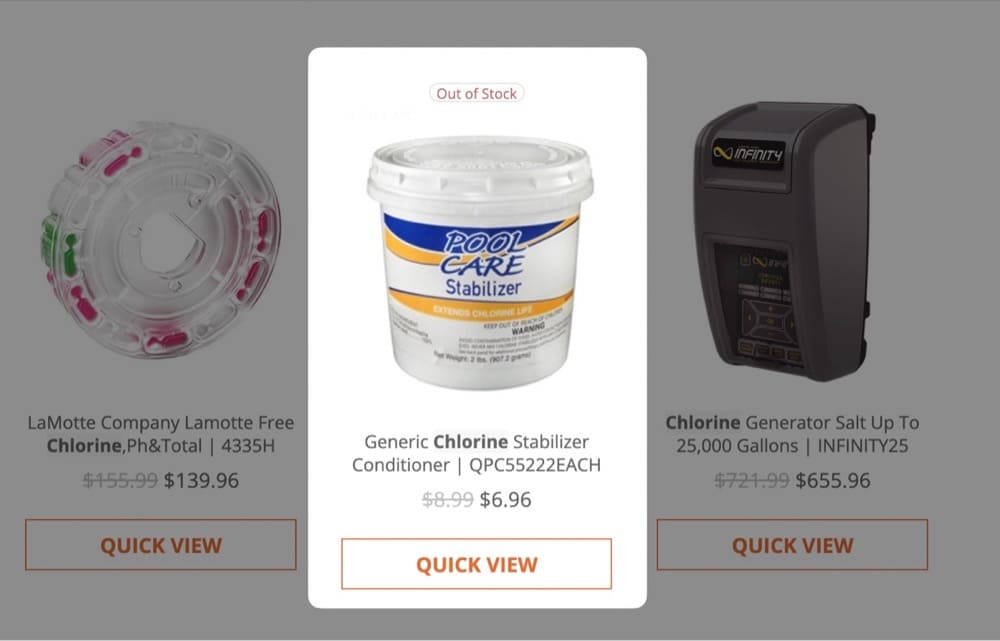

- Items that are showing out of stock

Shown here:

- The top 3 categories that the search results fall under (this could be achieved with a VLOOKUP later on, but I’d prefer to do this here/all at once)

Shown here:

The following questions help me understand the data:

- Are we showing the right amount of search results for these product number searches?

With almost all other types of searches, having only one result would be less than optimal, but based on these searches, it makes sense to show just 1 result. But quite important that we are seeing that one result, however!

- What search results have the most out of stuck products returned?

Also a bit more relevant for searches that return a lot of results.

- What categories get the most…

- Product searches?

- Have the most out-of-stock results?

- Have the lowest amount of results returned (which more than likely will result in higher exits)?

Using Screaming Frog to Mimic Search Results

Now comes the fun part — using Screaming Frog!

I won’t be covering any aspects of how to use Screaming Frog. It’s a very complex tool and even though I’ve been using it for close to a decade, there is still more I could probably be doing with it.

They have great guides on their site (which is also where you download it at).: https://www.screamingfrog.co.uk/seo-spider/user-guide/ (cost is $151.77 per year and is incredibly underpriced for what it can do.)

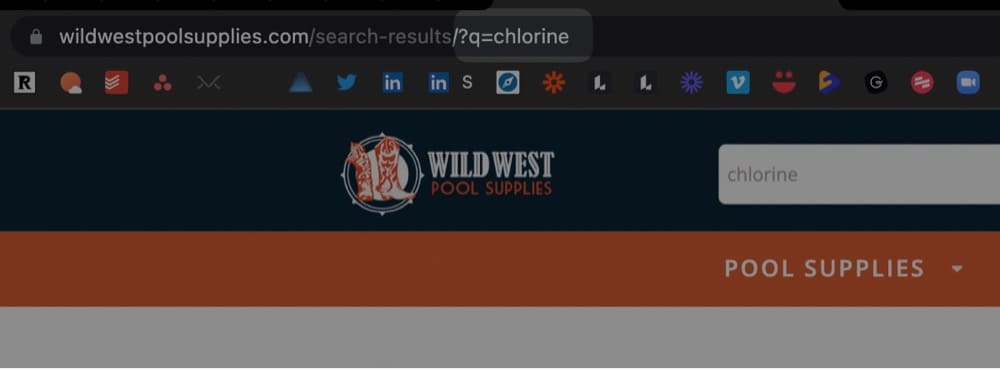

The last “attention to detail” piece is to look at your site’s URL after you search to see the pattern:

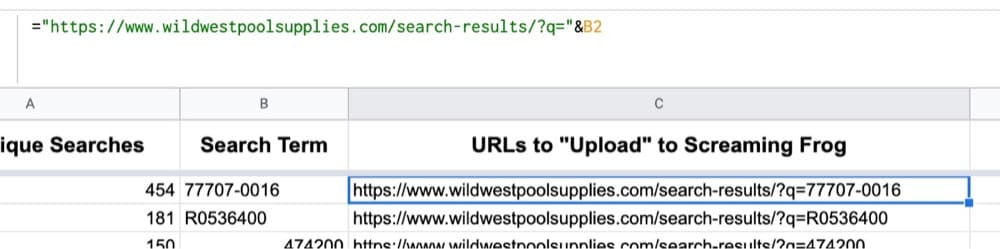

This is important to know, as we will be appending the search term from Google Analytics to the site URLs to match this pattern. We’ll be mimicking a user searching for this with the crawler and extracting the areas of interest we covered already (search results, out-of-stock, and categories).

- Grab everything before your search term in the URL:

- Combine that URL string with the search term:

Note: if you have spaces in your search terms, you will have to use advanced Regex or JS to add a %20 in the URL. I personally use TextSoap for these manipulations.

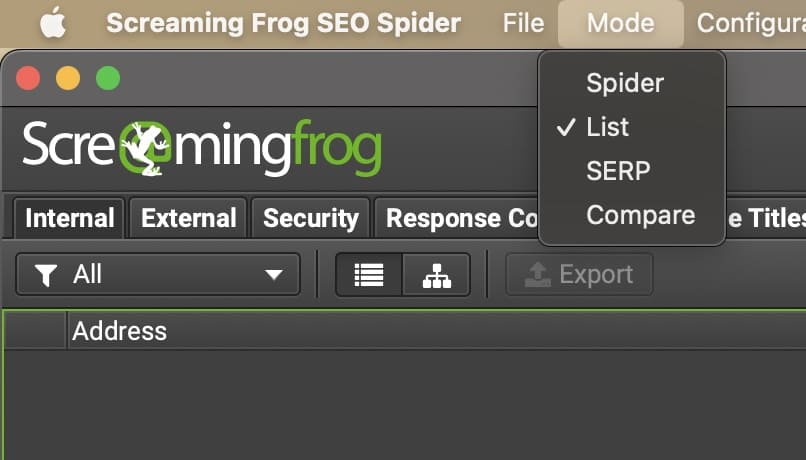

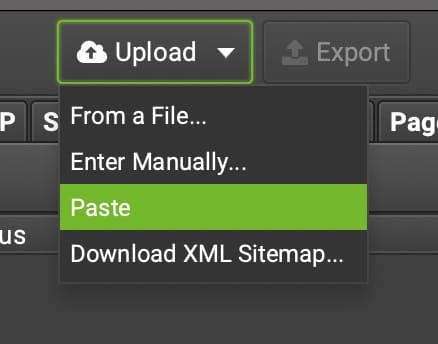

- Open up Screaming Frog and switch to the List mode:

- Choose how you want to upload your list of URLs (don’t paste ‘em if you have over 10k, will take forever to load):

- Before you run your crawler, you’ll want to figure out a way to extract the needed elements from the site.

I’ll show the search results here in SF and the rest you can choose how you want to extract.

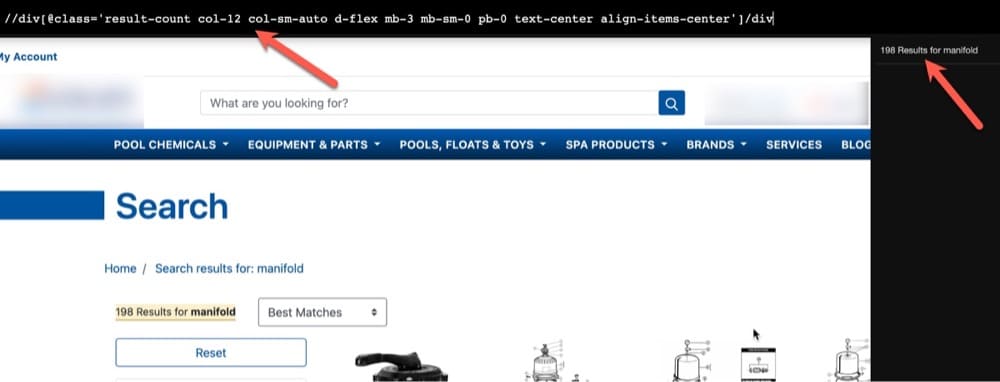

1. You’ll want to extract the number of search results to know how many results are coming back when this keyword is searched. To do that, we’ll use XPATH (my language of choice usually in SF).

2. I usually like to try the easiest way possible first, then only go into inspecting the element when needed. #WorkSmarter with the XPather chrome extension.

3. This gives me the XPATH I need, along with a preview of the “output” of what I’ll more than likely see in Screaming Frog.

4. Note: I’m not going to worry about only extracting the number of the results. That’s a 5-second cleanup in Google Sheets.

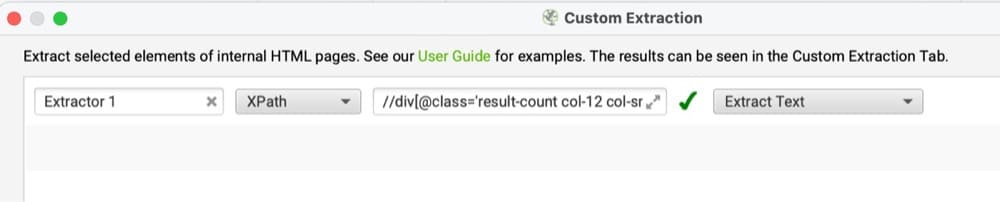

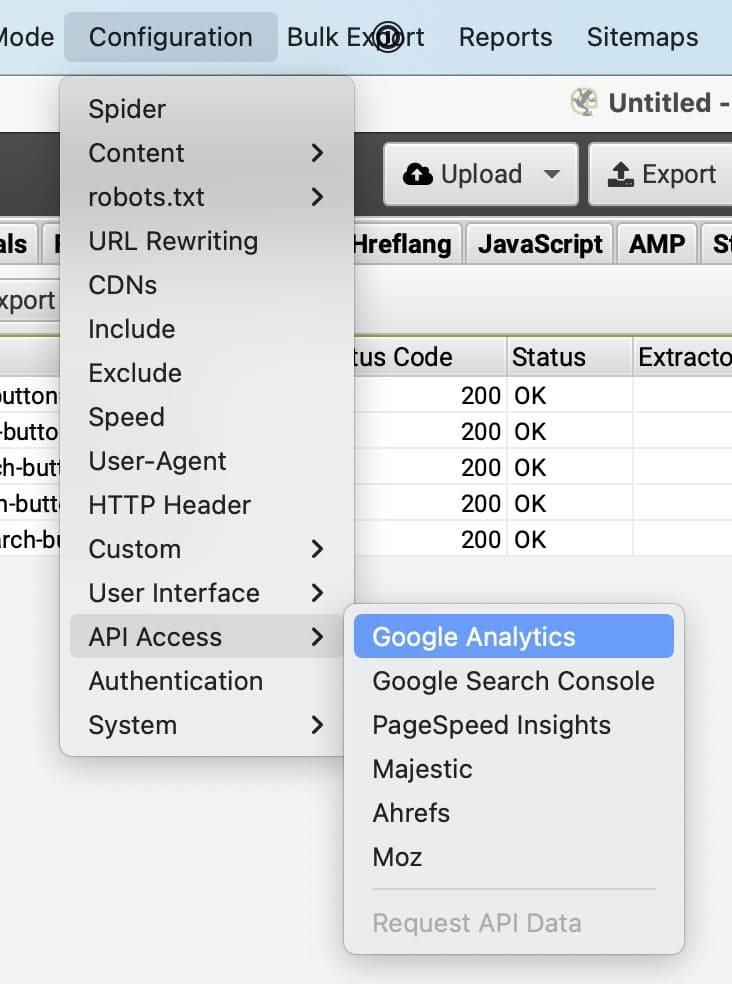

5. Now I can enter this into SF under the Configuration → Custom → Extraction section, seen here:

6. I will change the output to Extract Text to clean it up more and leave out unnecessary HTML.

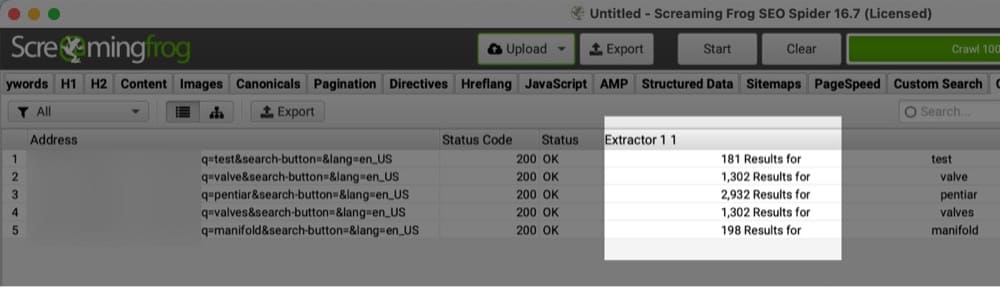

7. Once you click OK, you will be able to crawl the specified URLs that you previously (or maybe not yet) uploaded. (Again, the reason we uploaded URLs was to avoid crawling the entire site and only crawl the search terms we care about.)

8. Here is a sample of the output for a handful of URLs and the # of search results those search terms returned:

9. Next, you would probably want to know how often those searches are happening. This is where you can connect Google Analytics to see the pageview counts for the corresponding URLs.

10. I personally find it helpful here to create a “ratio” of high search pages to low # of results returned.

Also, is there a correlation between a low # of results and high exits on those pages? (I see this occurring when there are fewer than 10 results, which would mean the search results don’t look “full” as most sites have no more than 5 wide per row.)

11. Other helpful data points you might use here (based on how your search results and faceted navigation is displayed):

What search results return the most out-of-stock results? (If you can’t take any action, like getting notified when that product is in stock, you might want to think about removing this from the search results.)

Mapping search terms back to categories (and sub-categories if relevant). Helps understand the demand over time from users and fuels lots of different insights – from new tests to personalization for ambiguous keywords in how the sorting of results works.

Product # searches, like we showed earlier. Most people aren’t going to perform multiple searches if their product # doesn’t return anything. That might not be an easy fix immediately, but you could show an exit intent pop-up to get notified of when that product is back in stock.

Use the URL as a variable to pass in whatever form you are using for your pop-up provider so you know what product # they are after.

I could go on here, but hopefully, these examples helped paint a better picture of how you can scrape your own search results for better insights into the type of experience they are providing users with.

Note: You might have noticed I switched sites I’m extracting from here. When I started this blog post, the first site was using a different search provider, then it changed, and I wanted to show a better example based on XPATH vs. diving into other methods (this is usually the most reliable method, along with CSS selectors).

Second note: Filter out the IP address of your crawler in Google Analytics so you don’t count yourself in your pageview #s. If you are firing your pageview tag 2-3 seconds after your window loads, you don’t need to worry about crawler or bot traffic like this (and kudos to you, that’s a much more useful truth of data 👏).

Scaling + Areas of Automating This Process

Some of you might say, “Well this isn’t automated, I don’t want to be doing this manually.”

That is a totally valid point. I personally don’t mind because most of my clients don’t have millions of search results returned to warrant doing this weekly (or more). This is usually a monthly or quarterly task I do in conjunction with Google Analytics to see if the trends and patterns are changing.

If you are more technical, you could stick with Screaming Frog and set up a VPS solution to run automated crawls in the cloud, pushing the results to Google BigQuery upon completion. SF has a nice guide on that.

There are other solutions out there that are more “turn-key” and definitely less hands-on, like ContentKing (I have used them in the past), which also allows for “alerts” if certain thresholds are hit. (Think product number searches with no results returned = email or Slack notification).

Other Areas to Look For With an SEO Crawler

Hopefully this has opened your eyes a bit more to the possibilities of using SEO crawlers for gaining optimization insights. (Screaming Frog being just one of many, but the one I recommend).

Internal search results are just a small part of what I use crawlers for. There is so much more that crawlers can find for you. I use them in many different ways when working with my clients.

If you have specific questions or comments about this or anything else CRO-related, don’t hesitate to reach out to me via LinkedIn, Twitter, or via email: [email protected].

Written By

Ryan Levander

Edited By

Carmen Apostu