Testing Mind Map Series: How to Think Like a CRO Pro (Part 40)

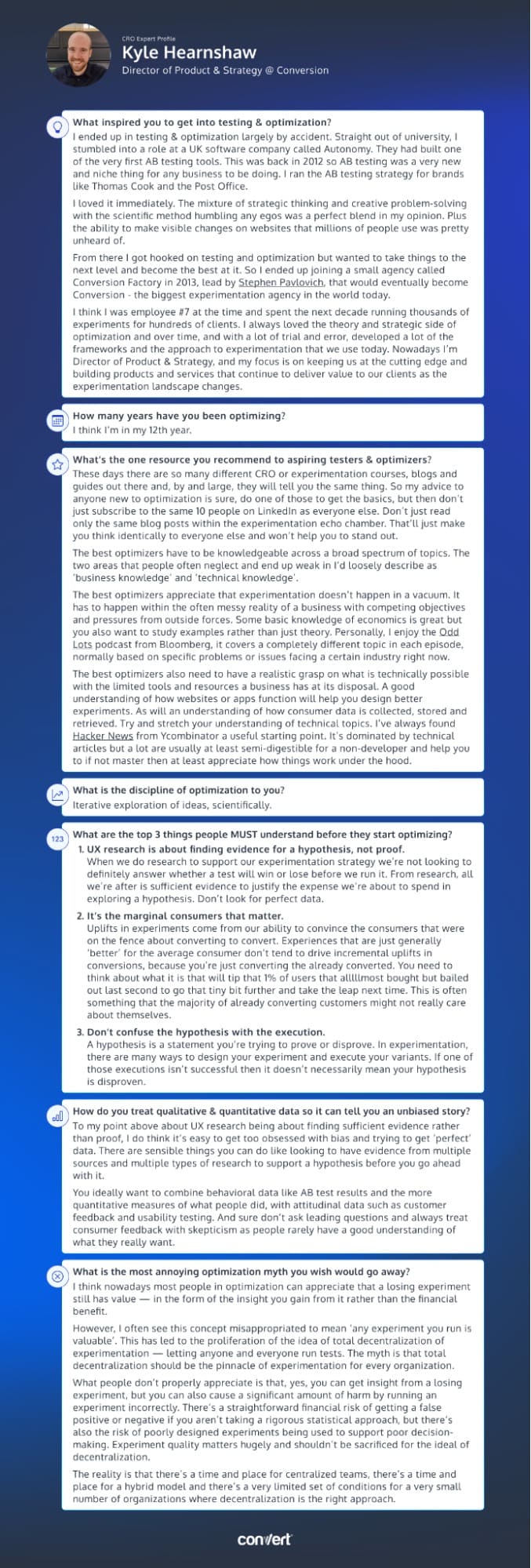

Interview with Kyle Hearnshaw

Our guest today is Kyle Hearnshaw, Director of Product & Strategy at Conversion. Kyle veers away from the common advice given to aspiring testers and optimizers. Kyle urges us to move beyond the echo chambers of experimentation. His two go-to resources, the Odd Lots podcast and Hacker News, serve up a varied mix of business and technical knowledge.

To Kyle, optimization isn’t trapped in rigid definitions. He sees it as an “iterative exploration of ideas, scientifically.” It’s less about crafting perfect experiments and more about finding the kind of evidence that stands up to scrutiny.

One of the most grating myths, according to Kyle, is the idea of total decentralization in experimentation. This is a misunderstood interpretation of the inherent value of a losing experiment. Kyle warns against poorly executed experiments that can do more harm than good.

Now, let’s dive into Kyle’s optimization world…

Kyle, tell us about yourself. What inspired you to get into testing & optimization?

I ended up in testing & optimization largely by accident. Straight out of university, I stumbled into a role at a UK software company called Autonomy. They had built one of the very first AB testing tools. This was back in 2012 so AB testing was a very new and niche thing for any business to be doing. I ran the AB testing strategy for brands like Thomas Cook and the Post Office.

I loved it immediately. The mixture of strategic thinking and creative problem-solving with the scientific method humbling any egos was a perfect blend in my opinion. Plus the ability to make visible changes on websites that millions of people use was pretty unheard of.

From there I got hooked on testing and optimization but wanted to take things to the next level and become the best at it. So I ended up joining a small agency called Conversion Factory in 2013, lead by Stephen Pavlovich, that would eventually become Conversion – the biggest experimentation agency in the world today.

I think I was employee #7 at the time and spent the next decade running thousands of experiments for hundreds of clients. I always loved the theory and strategic side of optimization and over time, and with a lot of trial and error, developed a lot of the frameworks and the approach to experimentation that we use today. Nowadays I’m Director of Product & Strategy, and my focus is on keeping us at the cutting edge and building products and services that continue to deliver value to our clients as the experimentation landscape changes.

How many years have you been optimizing for?

I think I’m in my 12th year.

What’s the one resource you recommend to aspiring testers & optimizers?

These days there are so many different CRO or experimentation courses, blogs and guides out there and, by and large, they will tell you the same thing. So my advice to anyone new to optimization is sure, do one of those to get the basics, but then don’t just subscribe to the same 10 people on LinkedIn as everyone else. Don’t just read only the same blog posts within the experimentation echo chamber. That’ll just make you think identically to everyone else and won’t help you to stand out.

The best optimizers have to be knowledgeable across a broad spectrum of topics. The two areas that people often neglect and end up weak in I’d loosely describe as ‘business knowledge’ and ‘technical knowledge’.

The best optimizers appreciate that experimentation doesn’t happen in a vacuum. It has to happen within the often messy reality of a business with competing objectives and pressures from outside forces. Some basic knowledge of economics is great but you also want to study examples rather than just theory. Personally, I enjoy the Odd Lots podcast from Bloomberg, it covers a completely different topic in each episode, normally based on specific problems or issues facing a certain industry right now.

The best optimizers also need to have a realistic grasp on what is technically possible with the limited tools and resources a business has at its disposal. A good understanding of how websites or apps function will help you design better experiments. As will an understanding of how consumer data is collected, stored and retrieved. Try and stretch your understanding of technical topics. I’ve always found Hacker News from Ycombinator a useful starting point. It’s dominated by technical articles but a lot are usually at least semi-digestible for a non-developer and help you to if not master then at least appreciate how things work under the hood.

Answer in 5 words or less: What is the discipline of optimization to you?

Iterative exploration of ideas, scientifically.

What are the top 3 things people MUST understand before they start optimizing?

UX research is about finding evidence for a hypothesis, not proof. When we do research to support our experimentation strategy we’re not looking to definitely answer whether a test will win or lose before we run it. From research, all we’re after is sufficient evidence to justify the expense we’re about to spend in exploring a hypothesis. Don’t look for perfect data.

It’s the marginal consumers that matter. Uplifts in experiments come from our ability to convince the consumers that were on the fence about converting to convert. Experiences that are just generally ‘better’ for the average consumer don’t tend to drive incremental uplifts in conversions, because you’re just converting the already converted. You need to think about what it is that will tip that 1% of users that alllllmost bought but bailed out last second to go that tiny bit further and take the leap next time. This is often something that the majority of already converting customers might not really care about themselves.

Don’t confuse the hypothesis with the execution. A hypothesis is a statement you’re trying to prove or disprove. In experimentation, there are many ways to design your experiment and execute your variants. If one of those executions isn’t successful then it doesn’t necessarily mean your hypothesis is disproven.

How do you treat qualitative & quantitative data to minimize bias?

To my point above about UX research being about finding sufficient evidence rather than proof, I do think it’s easy to get too obsessed with bias and trying to get ‘perfect’ data. There are sensible things you can do like looking to have evidence from multiple sources and multiple types of research to support a hypothesis before you go ahead with it.

You ideally want to combine behavioral data like AB test results and the more quantitative measures of what people did, with attitudinal data such as customer feedback and usability testing. And sure don’t ask leading questions and always treat consumer feedback with skepticism as people rarely have a good understanding of what they really want.

What is the most annoying optimization myth you wish would go away?

I think nowadays most people in optimization can appreciate that a losing experiment still has value — in the form of the insight you gain from it rather than the financial benefit.

However, I often see this concept misappropriated to mean ‘any experiment you run is valuable’. This has led to the proliferation of the idea of total decentralization of experimentation — letting anyone and everyone run tests. The myth is that total decentralization should be the pinnacle of experimentation for every organization.

What people don’t properly appreciate is that, yes, you can get insight from a losing experiment, but you can also cause a significant amount of harm by running an experiment incorrectly. There’s a straightforward financial risk of getting a false positive or negative if you aren’t taking a rigorous statistical approach, but there’s also the risk of poorly designed experiments being used to support poor decision-making. Experiment quality matters hugely and shouldn’t be sacrificed for the ideal of decentralization.

The reality is that there’s a time and place for centralized teams, there’s a time and place for a hybrid model and there’s a very limited set of conditions for a very small number of organizations where decentralization is the right approach.

Download the infographic above and add it to your swipe file for a little inspiration when you’re feeling stuck!

Thank you for joining us for this exclusive interview with Kyle! We hope you’ve gained some valuable insights from their experiences and advice, and we strongly encourage you to put them into action in your own optimization efforts.

Check back once a month for upcoming interviews! And if you haven’t already, check out our past interviews with CRO pros Gursimran Gujral, Haley Carpenter, Rishi Rawat, Sina Fak, Eden Bidani, Jakub Linowski, Shiva Manjunath, Deborah O’Malley, Andra Baragan, Rich Page, Ruben de Boer, Abi Hough, Alex Birkett, John Ostrowski, Ryan Levander, Ryan Thomas, Bhavik Patel, Siobhan Solberg, Tim Mehta, Rommil Santiago, Steph Le Prevost, Nils Koppelmann, Danielle Schwolow, Kevin Szpak, Marianne Stjernvall, Christoph Böcker, Max Bradley, Samuel Hess, Riccardo Vandra, Lukas Petrauskas, Gabriela Florea, Sean Clanchy, Ryan Webb, Tracy Laranjo, Lucia van den Brink, LeAnn Reyes, Lucrezia Platé, Daniel Jones, and our latest with May Chin.

Written By

Kyle Hearnshaw

Edited By

Carmen Apostu