Testing Mind Map Series: How to Think Like a CRO Pro (Part 71)

Interview with Eddie Aguilar

Every couple of weeks, we get up close and personal with some of the brightest minds in the CRO and experimentation community.

We’re on a mission to discover what lies behind their success. Get real answers to your toughest questions. Share hidden gems and unique insights you won’t find in the books. Condense years of real-world experience into actionable tactics and strategies.

This week, we’re chatting with Eddie Aguilar, agency founder at Blazing Growth and a 16-year digital optimization veteran who’s been a Convert power user since its beta days.

Eddie, tell us about yourself. What inspired you to get into testing & optimization?

I was a full stack developer for quite some time—about 3 years professionally—before I decided to make the switch to optimization full time. Before that, I was optimizing my sports gameplay in high school. I used to play Starcraft at a professional level and was paid to play. I took that same approach when working on my code in the first three years of my career. Eventually, I realized I could use this skill set to optimize user journeys and web experiences.

My first real optimization job was for an insurance lead gen company, where I worked under a nuclear scientist as my director (he mentioned he made more money doing optimization for agencies than actual nuclear science, absurd to think now). He introduced me further into the world of optimization and A/B testing, after which I strictly focused my career on Optimization, Experimentation, and CRO.

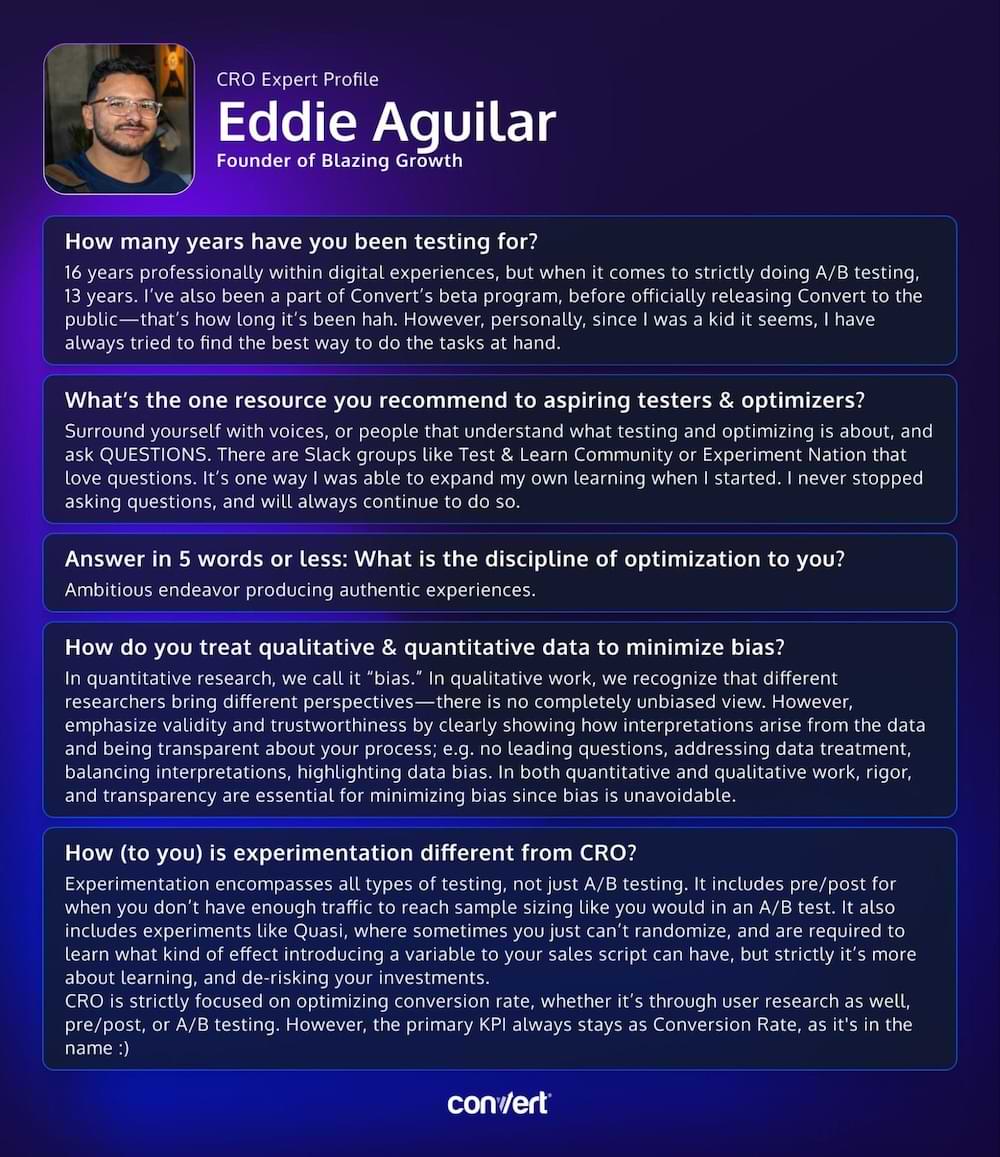

How many years have you been testing for?

16 years professionally within digital experiences, but when it comes to strictly doing A/B testing, 13 years. I’ve also been a part of Convert’s beta program, before officially releasing Convert to the public—that’s how long it’s been hah. However, personally, since I was a kid it seems, I have always tried to find the best way to do the tasks at hand.

What’s the one resource you recommend to aspiring testers & optimizers?

Surround yourself with voices, or people that understand what testing and optimizing is about, and ask QUESTIONS. There are Slack groups like Test & Learn Community or Experiment Nation that love questions. It’s one way I was able to expand my own learning when I started. I never stopped asking questions, and will always continue to do so.

Answer in 5 words or less: What is the discipline of optimization to you?

Ambitious endeavor producing authentic experiences.

What are the top 3 things people MUST understand before they start optimizing?

Data sources: Where does all this data come from? Are they going to leverage the data reports that the testing tools provide? Are they going to ship the data off to another analytics tool and report there? Without an understanding of the data, how it’s generated, and where it ends up, you’re in for a rude awakening when you have major false positive errors and massive data discrepancies across the board. This leads me to my next point: have at least a basic understanding of the technical aspects of experimentation.

Technical implications: What seems like it will take 3 hours rarely does. Building experiments requires some form of technical knowledge. This knowledge doesn’t necessarily have to be your own—it can come from someone on your team—but you need to understand how long it may take to build a hero banner experiment.

As my good friend Cory Underwood once said:

“People believe that a ‘simple’ UI change always requires a low amount of development effort. Example: Change the Hero image. Simple, right? Then – Change the Hero Image based on a calculation using a weather forecast for the user’s zip code 4 days from now – and they believe it’s the same level of effort.”

These are totally different levels of effort. Changing a background versus changing the background based on geography requires completely different technical skill sets. One can be done using a low-level understanding of JavaScript, while the other requires expert-level skills for manipulating the DOM based on API calls, user locations (which can be PII, causing even more issues), etc.

Understanding how to actually formulate a hypothesis: When you understand both data sources and technical implications, it helps you formulate a very executable hypothesis. You’ll see the difference between: “If we change the hero background, then we expect to see more engagement” and “If we change the hero background based on geography, then we expect to see more engagement because the background is personalized to the user’s location”. With a clearer hypothesis, you can provide more detailed execution instructions to your developers, rather than going in circles about what is to be included in the experiment. This builds the basis on which your testing plan can thrive.

How do you treat qualitative & quantitative data to minimize bias?

In quantitative research, we call it “bias.” In qualitative work, we recognize that different researchers bring different perspectives—there is no completely unbiased view. However, emphasize validity and trustworthiness by clearly showing how interpretations arise from the data and being transparent about your process; e.g. no leading questions, addressing data treatment, balancing interpretations, highlighting data bias. In both quantitative and qualitative work, rigor, and transparency are essential for minimizing bias since bias is unavoidable.

How (to you) is experimentation different from CRO?

Experimentation encompasses all types of testing, not just A/B testing. It includes pre/post for when you don’t have enough traffic to reach sample sizing like you would in an A/B test. It also includes experiments like Quasi, where sometimes you just can’t randomize, and are required to learn what kind of effect introducing a variable to your sales script can have, but strictly it’s more about learning, and de-risking your investments.

CRO is strictly focused on optimizing conversion rate, whether it’s through user research as well, pre/post, or A/B testing. However, the primary KPI always stays as Conversion Rate, as it’s in the name 🙂

Talk to us about some of the unique experiments you’ve run over the years.

I’ve been grateful to run many unique experiments over the years, as my expertise now involves creating highly complex experiments outside of just the digital spectrum, but more a part of an entire business’s growth.

However, one that always stands out is an experiment I ran on Convert when it was still in its early stages. The client was US Polo Assn, and we were testing around mobile checkouts, and introducing a way to “add to cart” from the category page, vs within product pages. While the experiment proved successful, Convert at the time had no real way of letting us know revenue-wise of any outliers, or how revenue was actually performing unless we manually pushed that data back to Convert. However, as we started to dig more into the results, we realized one thing: we needed to build the revenue event mechanism to exclude outliers. What we saw was that we had given resellers—people/companies who purchased in large volumes—a way to buy clothing much more easily during sales periods. This threw everyone off, we needed to rerun the test to validate for regular users, and not resellers, so the mechanism now had a way to exclude revenue and users who were purchasing over $1,000 worth of items. But we also learned that we needed to provide resellers a way to make purchases that doesn’t mix in with their regular public-facing website.

Cheers for reading! If you’ve caught the CRO bug… you’re in good company here. Be sure to check back often, we have fresh interviews dropping twice a month.

And if you’re in the mood for a binge read, have a gander at our earlier interviews with Gursimran Gujral, Haley Carpenter, Rishi Rawat, Sina Fak, Eden Bidani, Jakub Linowski, Shiva Manjunath, Deborah O’Malley, Andra Baragan, Rich Page, Ruben de Boer, Abi Hough, Alex Birkett, John Ostrowski, Ryan Levander, Ryan Thomas, Bhavik Patel, Siobhan Solberg, Tim Mehta, Rommil Santiago, Steph Le Prevost, Nils Koppelmann, Danielle Schwolow, Kevin Szpak, Marianne Stjernvall, Christoph Böcker, Max Bradley, Samuel Hess, Riccardo Vandra, Lukas Petrauskas, Gabriela Florea, Sean Clanchy, Ryan Webb, Tracy Laranjo, Lucia van den Brink, LeAnn Reyes, Lucrezia Platé, Daniel Jones, May Chin, Kyle Hearnshaw, Gerda Vogt-Thomas, Melanie Kyrklund, Sahil Patel, Lucas Vos, David Sanchez del Real, Oliver Kenyon, David Stepien, Maria Luiza de Lange, Callum Dreniw, Shirley Lee, Rúben Marinheiro, Lorik Mullaademi, Sergio Simarro Villalba, Georgiana Hunter-Cozens, Asmir Muminovic, Edd Saunders, Marc Uitterhoeve, Zander Aycock, Eduardo Marconi Pinheiro Lima, Linda Bustos, Marouscha Dorenbos, Cristina Molina, Tim Donets, Jarrah Hemmant, Cristina Giorgetti, Tom van den Berg, Tyler Hudson, Oliver West, Brian Poe, and Carlos Trujillo.

Written By

Eddie Aguilar

Edited By

Carmen Apostu