Testing Mind Map Series: How to Think Like a CRO Pro (Part 45)

Interview with David Sanchez del Real

Meet David Sanchez del Real, a guy who’s been hooked on solving puzzles since he was a kid tinkering with busted TVs. These days, he’s swapped circuit boards for data sets and is the Head of Optimization at AWA digital.

David is a firm believer in the “scientifically challenging intuition” approach to optimization. For him, it’s not just about the numbers; it’s about the narrative they tell, the hypotheses they validate or debunk, and the ever-elusive causality they reveal.

So, buckle up as we delve into the mind of a man who’s made it his mission to make the world more data-driven, one experiment at a time.

David, tell us about yourself. What inspired you to get into testing & optimization?

I was always obsessed with understanding problems, and daring (or stupid) enough to try and and iterate. The kind of kid that would open a broken TV just to see if I can fix it.

Critical thinking combined with a need to fix everything, if you will.

I think that most CROs in my generation fell into it somehow. I’m by no means a pioneer, but when I started almost a decade ago, CRO and Experimentation were not household terms. For me, it was a combination of personality traits and lucky chances.

My first contact with CRO dates back to 2014, working as a Digital Strategist for a small Agency. We did mostly Analytics, SEO, PPC, and content. I loved analytics especially, because it was like detective work: trying to find clues and inconsistencies to explain what was going on.

It bothered me greatly that we were able to bring a lot of traffic to our client’s sites but the impact on their revenue didn’t necessarily follow. More importantly, it bothered me that when results were good we could claim “we did great” and if they weren’t we would blame seasonality. I needed to understand direct causality… and solve the problem.

As a trial in one of my accounts, we started running a few small experiments using what we knew from customer service logs, speaking to some employees about their customers, and a couple of simple surveys. I loved it immediately, but despite the success, we never went deep into it. It wasn’t “our bread and butter”, I was told.

After a time, when I had decided to move on, luck knocked on my door: someone at Conversion.com came across my profile on LinkedIn, thought it looked interesting, and booked me in for an interview. Fast forward to my notice period and there I was, learning from people like Kyle Hearnshaw (who was featured recently in this series) and the team.

And the rest is history. Since then, I’ve working with and building CRO teams, now heading the team at AWA.

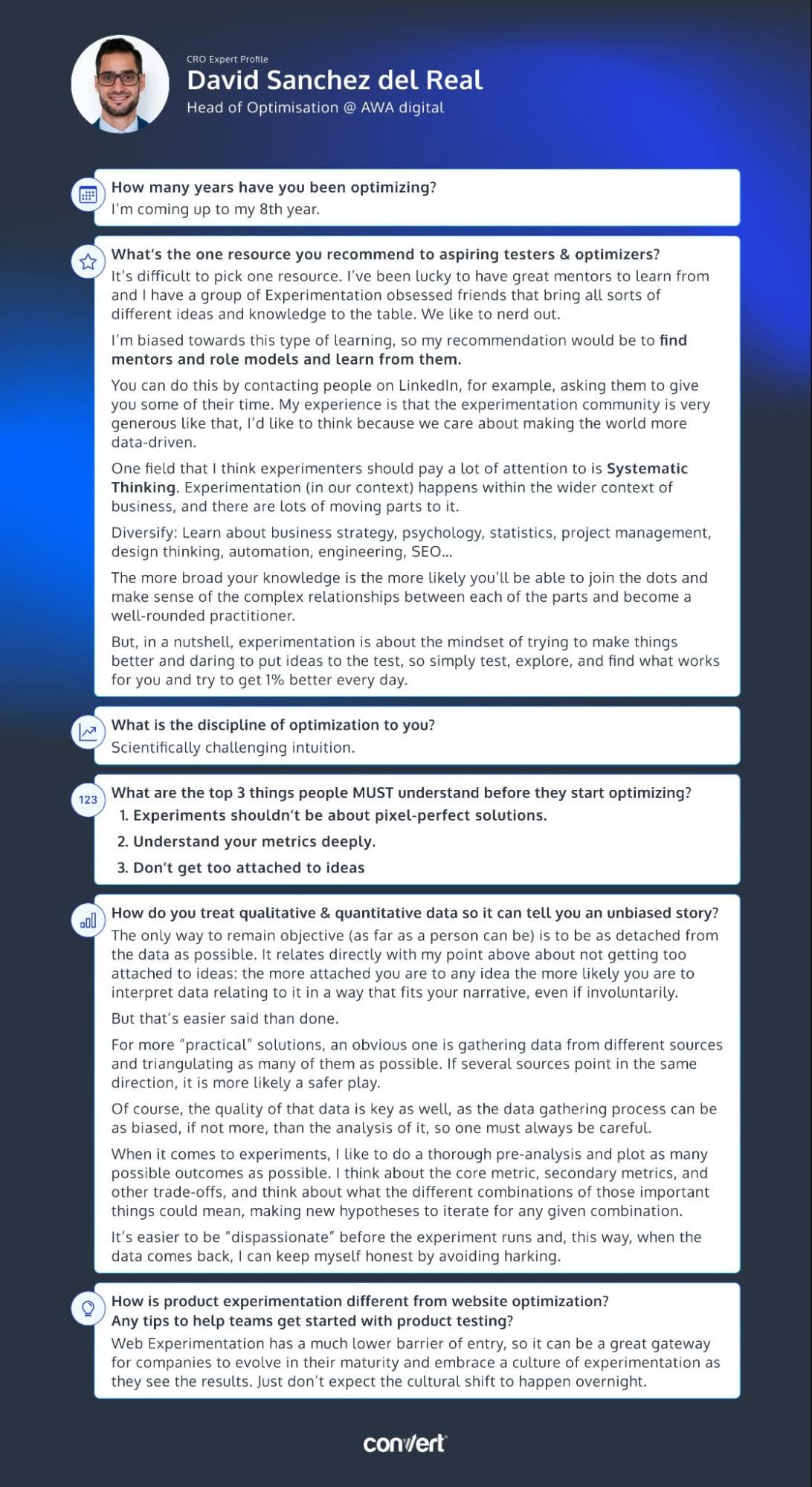

How many years have you been optimizing for?

I’m coming up to my 8th year.

What’s the one resource you recommend to aspiring testers & optimizers?

It’s difficult to pick one resource. I’ve been lucky to have great mentors to learn from and I have a group of Experimentation obsessed friends that bring all sorts of different ideas and knowledge to the table. We like to nerd out.

I’m biased towards this type of learning, so my recommendation would be to find mentors and role models and learn from them.

You can do this by contacting people on LinkedIn, for example, asking them to give you some of their time. My experience is that the experimentation community is very generous like that, I’d like to think because we care about making the world more data-driven.

One field that I think experimenters should pay a lot of attention to is Systematic Thinking. Experimentation (in our context) happens within the wider context of business, and there are lots of moving parts to it.

Diversify: Learn about business strategy, psychology, statistics, project management, design thinking, automation, engineering, SEO…

The more broad your knowledge is the more likely you’ll be able to join the dots and make sense of the complex relationships between each of the parts and become a well-rounded practitioner.

But, in a nutshell, experimentation is about the mindset of trying to make things better and daring to put ideas to the test, so simply test, explore, and find what works for you and try to get 1% better every day.

Answer in 5 words or less: What is the discipline of optimization to you?

Scientifically challenging intuition

What are the top things people MUST understand before they start optimizing?

- Experiments shouldn’t be about pixel-perfect solutions.

Your experiments are a way to validate your hypotheses, and they should do this as quickly and efficiently as possible. Resources are limited.

Most experiments fail, so if you obsess about trying to build a perfect solution for every experiment, you’ll be wasting a lot of effort on ideas that don’t have merit. One hypothesis may be executed in many different ways, so try to find the one that requires the lowest effort to prove first, and refine it once you know you’re on the right track. Just don’t throw away a hypothesis because your first execution lost, though.

- Understand your metrics deeply

An increase in conversion rate may bring an increase in revenue, and even both combined may bring a decrease in profitability.

Make sure that you’re very clear on the metrics you’re trying to move and their potential trade-offs and build your hypotheses around these. If not, you could be harming the business while celebrating your wins.

Creating an Overall Evaluation Criteria (OEC) may be ideal, but when you’re starting, just paying close attention should be enough.

- Don’t get too attached to ideas

Humans are very bad at estimating outcomes, and that’s why most experiments fail. Experimentation is very humbling because it shows you this over and over again, but it can be soul-crushing if you just want to be right.

Leave your ego at home.

How do you treat qualitative & quantitative data to minimize bias?

The only way to remain objective (as far as a person can be) is to be as detached from the data as possible. It relates directly with my point above about not getting too attached to ideas: the more attached you are to any idea the more likely you are to interpret data relating to it in a way that fits your narrative, even if involuntarily.

But that’s easier said than done.

For more “practical” solutions, an obvious one is gathering data from different sources and triangulating as many of them as possible. If several sources point in the same direction, it is more likely a safer play.

Of course, the quality of that data is key as well, as the data gathering process can be as biased, if not more, than the analysis of it, so one must always be careful.

When it comes to experiments, I like to do a thorough pre-analysis and plot as many possible outcomes as possible. I think about the core metric, secondary metrics, and other trade-offs, and think about what the different combinations of those important things could mean, making new hypotheses to iterate for any given combination.

It’s easier to be “dispassionate” before the experiment runs and, this way, when the data comes back, I can keep myself honest by avoiding harking.

How is product experimentation different from website optimization? Any tips to help teams get started with product testing?

We could think of Website Experimentation as a small part of Product Experimentation, in the same way (controversial, I know) that we could understand CRO as a small part of Experimentation, or UI/UX design are part of Product design.

Strictly speaking, Web Experimentation would deal solely with the website itself, the digital interface, whereas Product Experimentation could involve testing all aspects of a company’s offering, from improving features to introducing new ones, its pricing, delivery, and aftersales, etc. It’s Full-Stack experimentation on steroids.

Some lines may seem blurry, like whether improving the algorithm on a product like Spotify’s recommendations is a web experiment or a product experiment, but from my point of view, if it’s not to do with the interface itself, it’s product experimentation.

Therefore, and linking back to a previous Convert article, we could argue that Innovation (Exploration) is much more likely to happen with Product Experimentation, whereas Exploitation is largely in the realm of Web optimisation.

Properly executed, Product Experimentation can lead to truly transformative outcomes for a business as its depth and breadth are much larger, but it can be more costly and resource-intensive since the risks are much higher.

Web Experimentation has a much lower barrier of entry, so it can be a great gateway for companies to evolve in their maturity and embrace a culture of experimentation as they see the results. Just don’t expect the cultural shift to happen overnight.

Download the infographic above and add it to your swipe file for a little inspiration when you’re feeling stuck!

Our thanks go out to David for taking part in this interview! To our lovely readers, we hope you found the insights useful and encourage you to apply them in your own optimization efforts.

Don’t forget to check back twice a month for more enlightening interviews! And if you haven’t already, check out our past interviews with CRO pros Gursimran Gujral, Haley Carpenter, Rishi Rawat, Sina Fak, Eden Bidani, Jakub Linowski, Shiva Manjunath, Deborah O’Malley, Andra Baragan, Rich Page, Ruben de Boer, Abi Hough, Alex Birkett, John Ostrowski, Ryan Levander, Ryan Thomas, Bhavik Patel, Siobhan Solberg, Tim Mehta, Rommil Santiago, Steph Le Prevost, Nils Koppelmann, Danielle Schwolow, Kevin Szpak, Marianne Stjernvall, Christoph Böcker, Max Bradley, Samuel Hess, Riccardo Vandra, Lukas Petrauskas, Gabriela Florea, Sean Clanchy, Ryan Webb, Tracy Laranjo, Lucia van den Brink, LeAnn Reyes, Lucrezia Platé, Daniel Jones, May Chin, Kyle Hearnshaw, Gerda Vogt-Thomas, Melanie Kyrklund, Sahil Patel, and our latest with Lucas Vos.

Written By

David Sanchez del Real

Edited By

Carmen Apostu