Testing Mind Map Series: How to Think Like a CRO Pro (Part 55)

Interview with Asmir Muminovic

Every couple of weeks, we get up close and personal with some of the brightest minds in the CRO and experimentation community.

We’re on a mission to discover what lies behind their success. Get real answers to your toughest questions. Share hidden gems and unique insights you won’t find in the books. Condense years of real-world experience into actionable tactics and strategies.

This week, we’re chatting with Asmir Muminovic, the Founder & CEO at WeConvert, a leading conversion rate optimization agency with offices in the US and EU.

Asmir, tell us about yourself. What inspired you to get into testing & optimization?

I transitioned to CRO back in 2016, after my career as an online poker player, which I had pursued for 8 years, came to a sudden end. A friend of mine, who was a Head of Marketing at a big ecommerce brand, invited me to their office to see how they operated. He spoke of the shortage of good analytics and people on the market and explained how they have all the numbers and tracking in the world but struggled to make use of it. He showed me their Google Analytics and some heatmaps and said “Learn how to use these and I can get you a job here in a blink of an eye”. I spent the next few weeks reading through CXL’s blog, learning about analytics, and playing with Google’s merchandise store.

In the beginning, analytics truly reminded me of online poker. Most online poker players use a form of statistical tracking software that collects opponents’ data and shows it directly on the table in the form of a heads-up display (hud). A sort of analytics for poker players if you will. So the benefit of that is that based on the numbers shown of your opponents, one can quickly create an image and adjust their playing strategy. And that was something I was really good at. Creating stories and actionable ideas out of those numbers and adjusting on the fly. This helped me in building the story from analytics and weaving those reports together to form a “bigger picture”.

Several months later, the same friend hired me as a Junior analyst/CRO strategist for his company. My first salary was $1200/month. Not much, but I wasn’t in need of money, but very much in need of opportunity, so I gladly took it. I stayed there for 3 years and climbed to be the Head of CRO (my salary improved as well) before my career path took me elsewhere. By then, I was very much hooked on CRO.

How many years have you been testing for?

Since 2016.

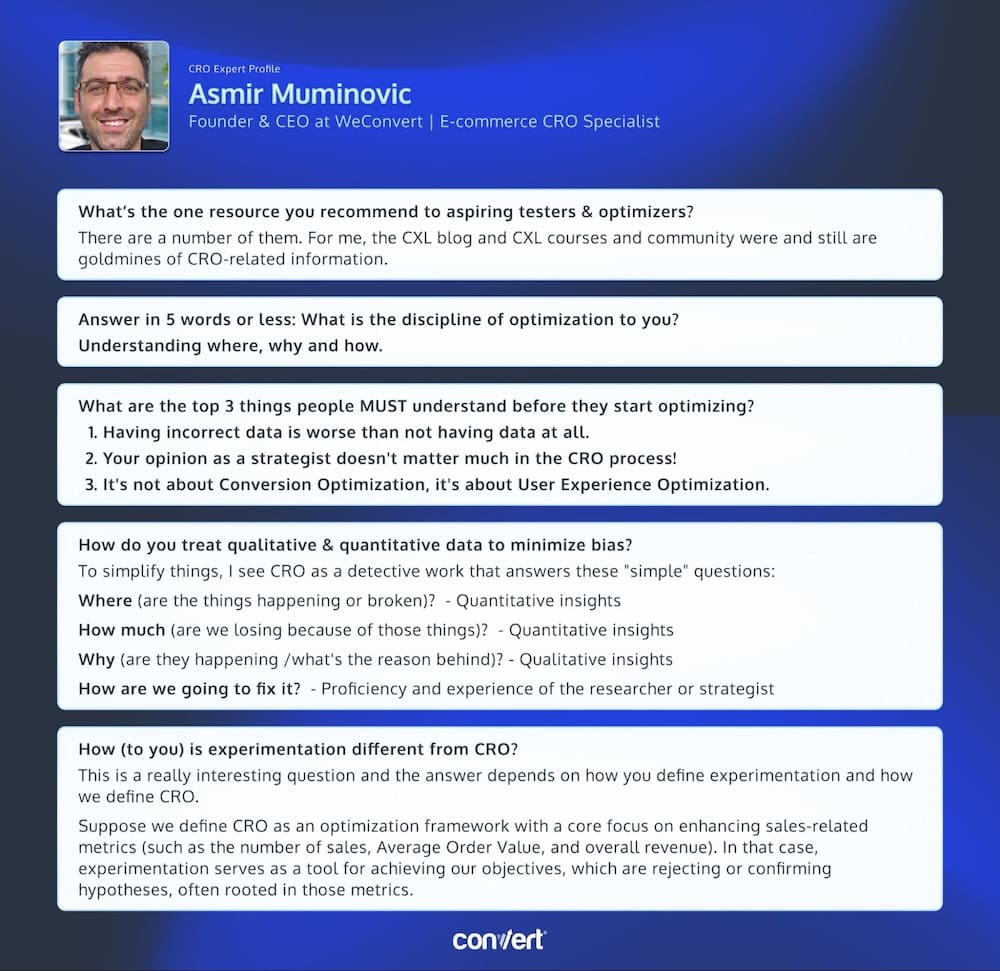

What’s the one resource you recommend to aspiring testers & optimizers?

There are a number of them. For me, the CXL blog and CXL courses and community were and still are goldmines of CRO-related information.

Answer in 5 words or less: What is the discipline of optimization to you?

Understanding where, why and how.

To explain off the record: understanding where the problems on the website are occurring (mostly via quantitative analysis, sometimes also qualitative through user testings or session recordings), understanding why they are happening (mostly through qualitative research methods), and knowing how to fix them. Some are easy, like browser or responsiveness incompatibilities, others are more complex. That’s where we form hypotheses and experiments.

What are the top 3 things people MUST understand before they start optimizing?

- Having incorrect data is worse than not having data at all. This applies to everything from GA configurations to A/B testing tools, and ultimately to analyzing testing results. When data is inaccurate, it leads to false conclusions, and false conclusions lead to wrong actions that result in a loss for the business.

- Your opinion as a strategist doesn’t matter much in the CRO process! Our job as CRO strategists is to be detectives and do investigations. Uncover all the small pieces of this big puzzle and form a big picture. But we are no heroes in this story. I see it over and over again in other agencies and new people coming to work for us. Many seem to consider heuristics and heatmaps the pinnacle of their investigative arsenal. And while those research methods have their place in the CRO world, they don’t often uncover needle-moving insights. Why? Because they are based on the subjectivity of the researcher. This means that insights found using those methods will always be biased and based on personal preferences / previous experiences. Alternatively, if we focus on qualitative research methods, like VOC, and find friction points there, those are rooted in the journeys and preferences of a customer, so improving those is usually much more potent.

- It’s not about Conversion Optimization, it’s about User Experience Optimization.

How do you treat qualitative & quantitative data to minimize bias?

To simplify things, I see CRO as a detective work that answers these “simple” questions:

- Where (are the things happening or broken)? – Quantitative insights

- How much (are we losing because of those things)? – Quantitative insights

- Why (are they happening /what’s the reason behind)? – Qualitative insights

- How are we going to fix it? – Proficiency and experience of the researcher or strategist

As you can see, understanding and fixing problems usually require the use of both qualitative and quantitative data.

So, it’s very important to have confidence in the data’s accuracy, especially when it comes to the quantitative findings.

In qualitative research methods, there is one other trap that we must always be aware of, namely that sample sizes are usually small, so it is crucial to conduct analysis on a large enough sample and not generalize findings based on a small sample.

And despite all of that, there is always an amount of subjectivity and bias involved in any research and insights surrounding our hypothesis. A lot of our research is also rooted in our past experiences. I refrain from usage of complicated academic terms but one could say we are at the risk of familiarity bias here. But as long as the test is statistically proven winner and we can confirm our hypothesis, I am fine with it.

How (to you) is experimentation different from CRO?

This is a really interesting question and the answer depends on how you define experimentation and how we define CRO.

Suppose we define CRO as an optimization framework with a core focus on enhancing sales-related metrics (such as the number of sales, Average Order Value, and overall revenue). In that case, experimentation serves as a tool for achieving our objectives, which are rejecting or confirming hypotheses, often rooted in those metrics.

Talk to us about some of the unique experiments you’ve run over the years.

One project that really stood out involved tweaking the angle for an ecommerce store selling football training gear. This company was used to dealing with big, established clubs across Europe, but their ecommerce was aimed at individual buyers. Although we initially targeted a buying demographic of 18+, our qualitative studies showed us something interesting: most of these buyers were actually parents snagging equipment for their younger relatives, mostly sons. So, we shifted our sales copy angle, moving from talking directly to the player to addressing the parents.

We tested this new approach on our top 5 selling products and it was a hit – 4 out of 5 tests won, with the biggest winner clocking an 11.4% sales increase at 97% statistical confidence. This was a clear signal that we were onto something big, so we dove deeper, conducting interviews and user testing with some of these buyers to refine our approach. This led to even more tailored changes, hitting those new pain points and shining a spotlight on our value proposition. The company had a whole lineup of 120 products, and armed with these insights, we revamped product descriptions across the board. This strategic shift pushed our overall conversion rate from 2.04% to 3.21%.

Our thanks go out to Asmir for taking part in this interview! To our lovely readers, we hope you found the insights useful and encourage you to apply them in your own optimization efforts.

Don’t forget to check back twice a month for more enlightening interviews! And if you haven’t already, check out our past interviews with CRO pros Gursimran Gujral, Haley Carpenter, Rishi Rawat, Sina Fak, Eden Bidani, Jakub Linowski, Shiva Manjunath, Deborah O’Malley, Andra Baragan, Rich Page, Ruben de Boer, Abi Hough, Alex Birkett, John Ostrowski, Ryan Levander, Ryan Thomas, Bhavik Patel, Siobhan Solberg, Tim Mehta, Rommil Santiago, Steph Le Prevost, Nils Koppelmann, Danielle Schwolow, Kevin Szpak, Marianne Stjernvall, Christoph Böcker, Max Bradley, Samuel Hess, Riccardo Vandra, Lukas Petrauskas, Gabriela Florea, Sean Clanchy, Ryan Webb, Tracy Laranjo, Lucia van den Brink, LeAnn Reyes, Lucrezia Platé, Daniel Jones, May Chin, Kyle Hearnshaw, Gerda Vogt-Thomas, Melanie Kyrklund, Sahil Patel, Lucas Vos, David Sanchez del Real, Oliver Kenyon, David Stepien, Maria Luiza de Lange, Callum Dreniw, Shirley Lee, Rúben Marinheiro, Lorik Mullaademi, Sergio Simarro Villalba, and our latest with Georgiana Hunter-Cozens.

Written By

Asmir Muminovic

Edited By

Carmen Apostu