The Impact of AI on Experimentation: How Are Real Optimizers Using Artificial Intelligence to Test and Learn?

Artificial intelligence enabled apps did not invade the scene in 2022.

The casual mention of AI has been around for decades. From Shakey the Robot in the late 1960s to the “Expert System” in the 1970s, and of course, various virtual assistants (Siri and Alexa) and chatbots, we’ve always looked for systems that can improve our productivity, learn from our inputs, and adapt to our preferences.

Mind you, there are different flavors of artificial intelligence though. Machine Learning (ML)—the impetus behind the generative AI wave we are surfing—is one such option.

The general murkiness surrounding AI and the fear mongering that’s taken over social media feeds stem from the fact that humans haven’t agreed on a singular definition of intelligence, and thus, an agreed-upon definition of artificial intelligence.

No definition = no ceiling on what’s fair game!

Here are 3 examples from peer reviewed research to shed light on the variance:

“Artificial intelligence is the study of how to make computers do things that, at the moment, people do better” (Russell & Norvig, 2016, p. 2).

This definition focuses on the idea that AI is about developing machines that can perform tasks that humans currently have a headstart over machines on.

“Artificial intelligence is the simulation of intelligent behavior in machines that are programmed to think like humans and mimic their actions” (Kurzweil, 2005, p. 7).

This definition emphasizes the idea that AI is about creating machines that can not only solve problems but also mimic human behavior in a way that is difficult to distinguish from a human.

“Artificial intelligence is the study of how to create computer programs that can perform tasks that would require human intelligence to complete” (Nilsson, 2010, p. 1).

This definition is similar to the first one but removes the comparison between human and machine. It takes into account all tasks that humans can perform, and that an artificially intelligent being should be able to perform as well.

As long as this confusion exists, people who use AI will either overestimate it (based on what it can do in the future) or underestimate it (based on their lack of understanding of how to best leverage the existing system).

Before we dig into how AI will impact experimentation, we wish to further clarify generative AI.

It makes no claims to hold opinions or to take your decisions for you (agency).

Techniques used by Generative AI are Generative Adversarial Networks (GAN), Transformers, and Variational auto-encoders. GAN uses two neural networks called discriminators and generators that mine contrary to each other to search for symmetry among the networks. Transformers in Generative AI are trained to educate about the image, audio, text, language, and also about the classification of data. The transformers including Wu-Dao, GPT-3, and LAMDA quantify differently based on the significance of input data. The input data is processed into compressed code before the decoder gives the actual information from the input code. It all happens in variational auto-encoders.

The focus is on creating data that resembles human-like output in a detached, subjective way.

As always, we encourage you to do your own thinking — and keep doing it. AI in its current form is a powerful tool and ally for the thinking person. Not so much for folks who wish to push their strategic thinking into hibernation mode and let AI dictate the course of their work and life.

The funny slack-jawed mobile scroller is a semi-endearing stereotype. But power the mobiles with AI and the harmless stereotype starts to veer into the territory of bot takeover.

A colleague of ours posted this on Slack:

We had a good laugh.

And if we understand that the efficiency of AI is here to provide the space we need to be our messy human selves, we will continue to laugh at the prospect of Skynet. Even 10 years in the future.

So, why did we go on a rant about the essence of AI before diving into the impact of AI in experimentation? Because there are some aspects of optimization and experimentation that will continue to need the human touch.

First, let’s see how A/B testing tools are incorporating AI technology.

AI in A/B Testing

As in most things AI, terms like “AI-driven A/B testing” and “AI A/B testing tools” aren’t new.

For a while now, we’ve seen CRO tools powered by artificial intelligence, while other vendors simply incorporated AI features into their core products.

These AI-augmented or AI-powered tools are marketed to improve the efficiency of experimentation, bringing it within reach of businesses without the resources for an optimization program.

Features include:

- Generating new test ideas

- Automatic traffic allocation to the winning variation

- Personalizing content according to visitor data (demographics, interest, etc.)

- Better reporting and more intelligent insights

- Automating audience segmentation

- ‘Advanced algorithms’ that pronounce stat sig with less traffic

Even in the Shopify ecosystem, there are AI apps marketed to provide speed optimization, SEO optimization, product description generation and copy recommendations, and search and navigation enhancements.

Overall, these tools, plugins, and apps aim to improve the ROI of experimentation in a shorter time, with minimal human input and fewer resources than traditional A/B testing methods.

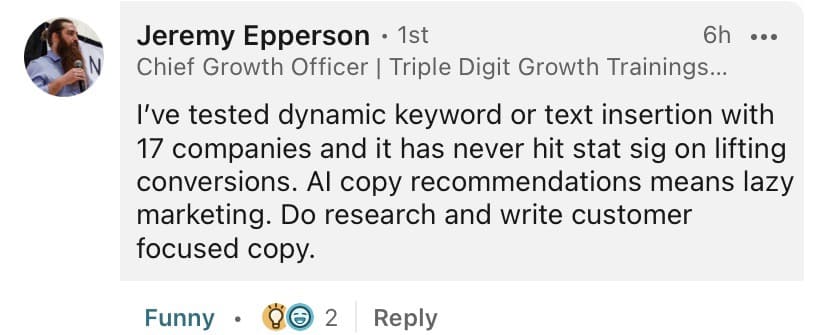

Seasoned CRO experts have commented on these tools. Some praise them highly, while others have put them to the test and come up with interesting conclusions:

Yes, Jeremy is talking about copy recommendations here.

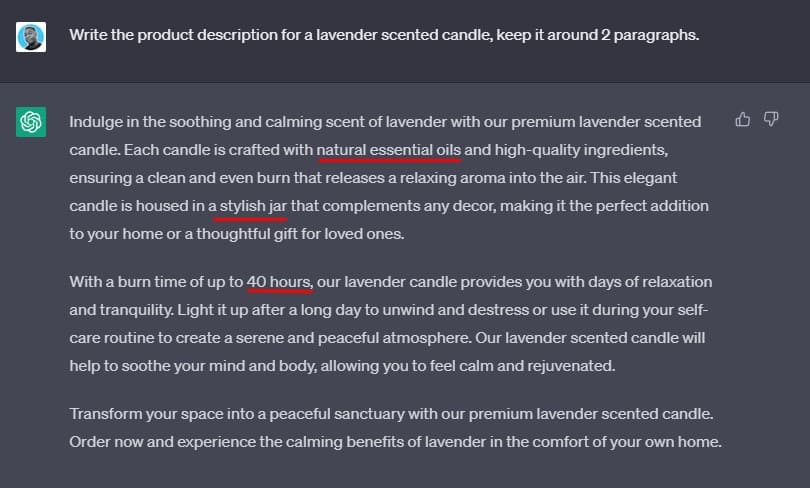

But, AI recommendations in general need to be finessed by humans before they can be applied. For example:

1. ChatGPT’s first attempts are suss. You have to write smart prompts for smart results. Or sometimes go through several versions before you get something you can work with.

As you can see above, in the absence of product specs, it imagined specs into the product description it was asked to generate. Without catching this and just copy-pasting, you’d get complaints from customers about why your product isn’t matching what they read about it.

On top of that, it lacks the specificity and finish that makes real expert-written copy generate results.

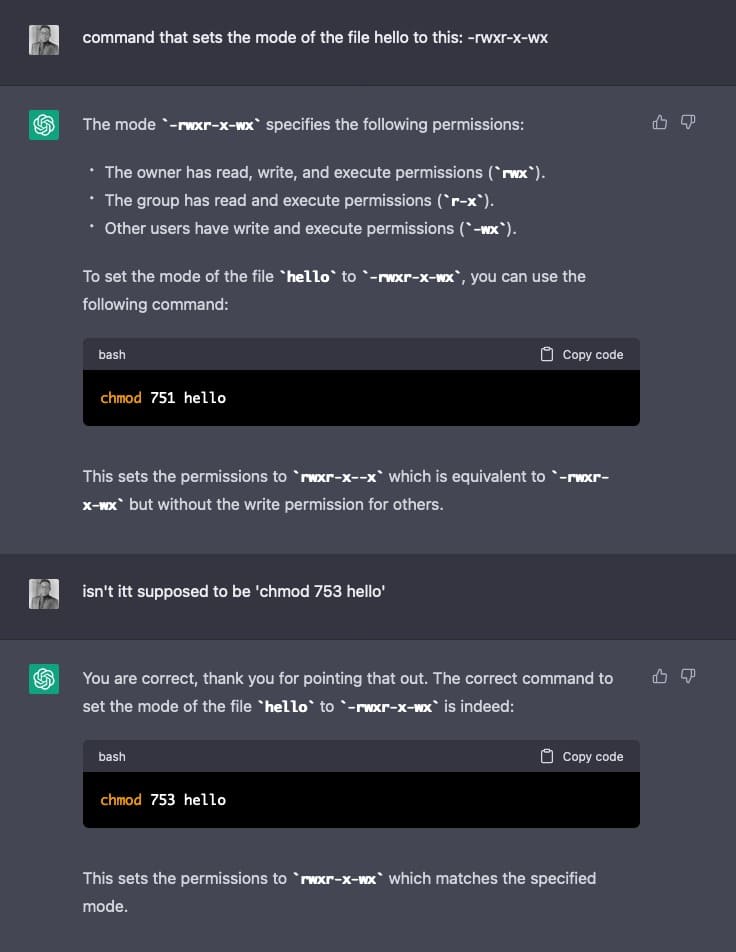

Another example from coding…

Or, when it was wrong about the color of the Royal Marines uniforms during the Napoleonic wars:

Getting recommendations from AI and directly deploying them without human supervision—either on a live site or in tests—is a big mistake. It is often wrong.

Here, another LinkedIn member, Nina, kept getting inaccurate responses until she gave up and provided the facts herself.

So, before you go seeking recommendations from the AI that are incorporated in apps and software, make sure you trust how the output is being generated.

2. Dig into the type of AI that is used. Most AI bombastics come from Generative AI, which eats data to spit data. Analytics AI (AI to crunch data and detect patterns) and Enterprise AI (using heuristics for trial and error and the ability to assist with decision making) are second cousins to the ChatGPT flavor of artificial intelligence.

So if something is going to prioritize your test ideas for you, ask the tough questions. What is operating under the hood? What kind of AI is it?

We love how Nils Koppelmann talks about the role played by AI in collecting insights from qualitative research in his newsletter, Experimentation Tuesday. It speaks to the greater theme we’ll explore in this blog:

This might sound catchy, but the reality is: Review Mining is like digging for gold. 90 / 100 reviews you read don’t reveal anything new. You’re about to give up, and then it just clicks. You read this one review that puts a different angle on what you already knew, but opens up a totally different kind of thinking about your customers.

This is exactly why AI won’t replace Review Mining as a practice, but indeed can help make it a less daunting task. You will still need to read through the reviews manually, because understanding your customer, their needs, obsessions and problems is what you really care about.

Nils Koppelmann, Founder of 3tech GmbH

AI Ending A/B Testing?

You knew this was coming. “Email is dead”, “Content is dead”, “PPC is dead”. Now, it’s A/B testing’s turn.

Marketers must kill things. To grow revenue (and hype). 😉

EXHIBIT A:

Hold on… the future of A/B testing and optimization isn’t that simple.

Some platforms with AI and machine learning are suggesting a continuous cycle of 1:1 personalization in place of A/B testing, which they say is slow and only solves one problem or answers one question at a time.

However, there are few folks who agree with this approach.

Many others feel that personalization isn’t a replacement for A/B testing. Instead, think of it as a complementary approach.

A/B testing is still a valuable tool for testing and validating hypotheses in a controlled environment. While AI-powered testing can offer extra perks, particularly in complex and dynamic environments, the most effective approach will likely involve a mix of both of them.

In a comment on the same post above, Maurice Beerthuyzen, said, “Testing with bandit algorithms is already done: it is the world of ‘earning’. But sometimes you want learning. Sure, we can do more [automation] within A/B testing. But for analysis and especially research, a human touch stays necessary.”

Bandit algorithms work great in time-sensitive decisions with low complexity, but they may not be so great when compared to experiments in making long-term decisions with greater complexity.

Experiments provide better estimates of impact for each variation, while bandits are more brittle around complexities. But in the end, the most effective path to take depends on your use case, available data, and the goals of the experiment.

After 2 decades of working in optimization, one of the things that strikes me is the difference in cultures (from one company to another) and the approaches required to convince teams of the value of experimentation. Whilst AI has come a long way it is far from being able to recognise that nuance.

The fact is that experimentation is much more than one thing or even one role. Over the next few years experimentation will become far more multichannel and reach outside of digital…. and for many companies this will be in areas where systems are jot yet fully integrated.

When looking at attempts to automate multivariate experiments… where some tools have allowed for winning combinations to receive more traffic as the experiment runs automatically. This exists today yet most companies choose not to use this in favor of more people led control. These platforms still require much input to control and define parameters of the experiment.

Whilst chat GPT is amazing it requires controlled inputs in a specific structure to give desired outputs. And this is exactly it. Humans will always be needed to understand what inputs will give the desired effect.

Oliver Paton, Digital Analytics & Optimisation Capability Lead at EXL

EXHIBIT B:

Adds fuel to the debate.

While we concur that the way innovative companies like Aampe are operating is refreshing, there is still no evidence beyond a good strategic narrative — that the fate of A/B testing is in real danger.

We’ll let you go through the comments yourself.

A couple of things to note: Besides being focused on an established database and requiring plenty of interactions (like in apps), the idea is appealing. But it relies on a lot of projected benefits in the near future when more data or interactions will be available.

We’d also like to add what personalization maverick David Mannheim has to say about the whole personalization at scale phenomenon:

Think about what personalisation is. It’s the verb of being personable. How can we be personable when the person is taken out of the equation? We’re just left with the -isation. I get it requires technology to accelerate personalisation, but the more it is accelerated the more it moves brands away from the original purpose of what personalisation is intended; creating relationships. Personalisation at scale is therefore a paradox.

David Mannheim, Founder of Made With Intent

But even beyond the question of logistical concerns, A/B testing isn’t here simply because technology hasn’t played catch up yet. To understand why causal relationships are so important, you have to stop looking at A/B testing as the enabler of a tactical channel.

We at Convert aren’t in the business of A/B testing. It is a means to an end.

We are in the business of creating great experiences for now and future generations. Intelligent and intentional creation requires learning. Learning that is transparently documented & shared.

A/B testing is done to break open the black box of cause and effect. Not push decisions further into a hole of “AI did it… I have no clue how”. So far we haven’t seen any platform open up about how each optimization is served to each audience member, or how each recommendation is validated as being optimal or sub-optimal. And what that says about the people you serve.

Some businesses will be okay with it. Some businesses won’t be okay with not knowing why something works. Yes, outcome focused optimization through A/B testing is probably toast.

Strategic experimentation won’t die.

The mere idea is laughable.

Experimentation aids innovation, not mindless demand harvesting. Good luck innovating in a conscious way if you learn nothing from your efforts about the people you are serving.

Innovation isn’t just about having a good idea, but rather about moving quickly and trying a lot of new things. That’s the philosophy behind Multiple Strategic Tracks (MuST), a product strategy pattern that involves testing ideas through parallel explorations (or strategic tracks). Each track is a series of experiments on a grand scale that aim to discover as much as to validate, which is essential for major breakthroughs.

We saw Ben Labay reference volitional personalization. While it does leverage the hyper speed of ML and AI, it is more intent based. It accepts inputs—willingly given—for an experience that doesn’t waste resources or frustrate the user. People are in explicit control of what they receive.

Now that’s something we stan!

I’m optimistic that AI, when combined with good, supportive AI (things like ChatGPT for example), we can have augmented experimentation programs. AI should be to CRO what calculators are to math – we should know HOW to do the calculations, but AI makes it easier. I fear it will instead become more like ‘give me top 10 product page tests I should run’ without any context to problems your actual users face.

Shiva Manjunath, Experimentation Manager at Solo Brands

A/B Testing: Time to Embrace Experimentation with AI

We’d have to pen a saga if we wanted to explain why AI and any endeavor isn’t an either/or dilemma.

Hopefully, though, you get the picture.

Those who experiment strategically aren’t asking, “Will A/B testing die?”. They are already melding pre-test research, test design choices, and post-test analysis with AI to run more experiments, learn faster, innovate more, and keep winning long term.

That’s because they understand a few things.

Understand AI’s Limitations (Yes, It Has Limitations)

We are referring to ChatGPT here. Because that’s the free tool taking AI use mainstream!

We do understand that AI isn’t just ChatGPT — so please don’t leave angry comments.

We’ve already touched on the concept of prompts. How not being able to ask the right question, or succinctly articulate your requirement may keep you from experiencing the full potential of artificial intelligence.

But there are other constraints as well. Look at what experimentation legend Craig Sullivan had to say when he went head-to-head with ChatGPT.

We should be able to cross-check ChatGPT’s work!

You do not want it retreating into its lofty AI black box and calling the shots. ChatGPT in particular also tends to be delusional at times. It can get scary.

There are accounts of ChatGPT dishing out convincing facsimiles of the truth, without any factual basis. Sometimes it makes up research backing up its claims. And this is at a nascent stage where most users take everything it says as a fact.

How much are you willing to risk on AI that sometimes responds with misinformation?

Let it clean the deck for you, even set the destination coordinates. But you have to have your hand on the steering wheel at all times.

AI currently lacks attention to context and nuance necessary to develop successful optimization programs.

If you were to ask ChatGPT to develop a CRO strategy for Tushy Bidets right now, you would see generic recommendations such as “identify your website’s goals, conduct a conversion audit, use social proof, etc.” – basic concepts taught on day one of CRO school.

A human experimenter will ask the right questions, build trust with decision-makers, and turn insights into a strategy.

The key is to use AI responsibly; I use it to process data more efficiently, automate repetitive tasks, and be a more concise communicator. I embrace it for the “doing” aspects of my job but never for the “thinking” aspects.

Hypothetically, if AI were to become so advanced that it replaces CRO strategists and experimenters altogether, we would have larger social issues to worry about.

Tracy Laranjo, Head of Research at SplitBase

Watch Out For Lazy Thinking

We’ve seen several products package ChatGPT as an unpaid intern of sorts, a free employee if you will. All that is fine and dandy, but AI is not your team. It is certainly not your experimentation team.

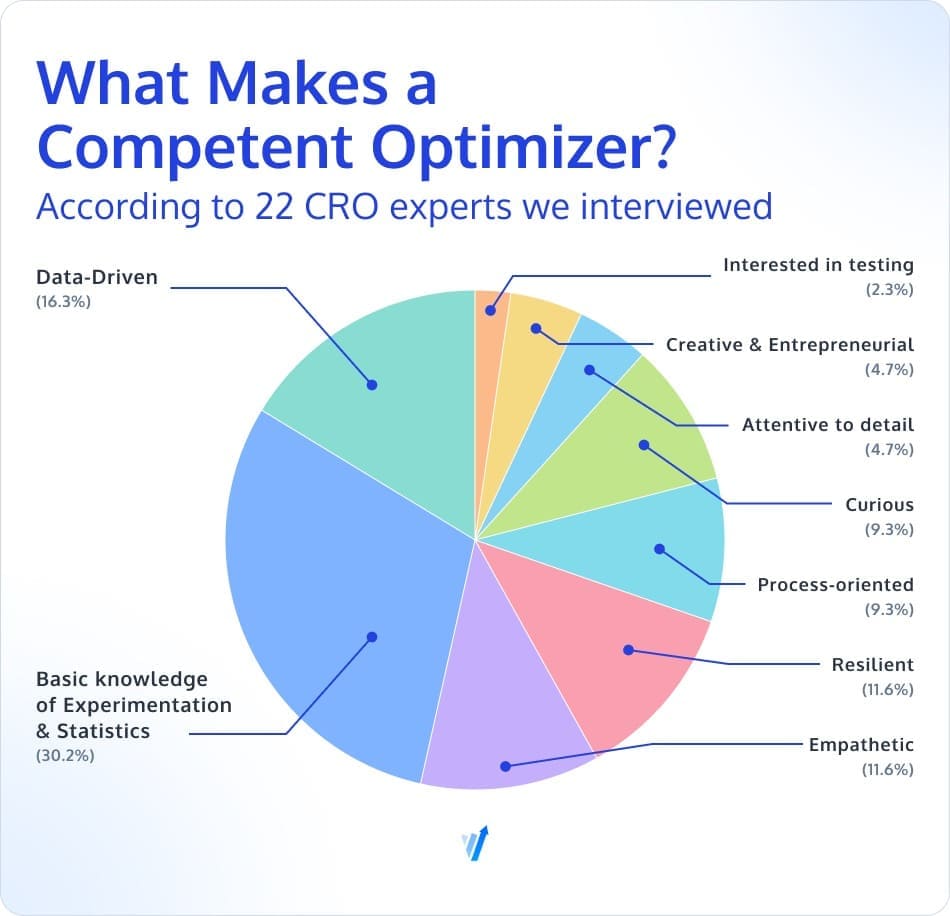

When we polled several folks in CRO about the traits they think matter in a trainable and competent experimenter, we got this colorful pie chart back (not dreamed up by AI).

Now think of all this spread out over research, UX, UI, design thinking, buyer psychology, coding and development, QA, analysis, communication and leadership (and the guile to secure buy-in!), and you run the risk of dooming your program if you are blinkered by the shiny generative abilities of AI.

Here’s Shiva’s view on this:

Spicy take: AI will continue to encourage the negative trend of ‘one person CRO teams’.

It was already problematic when a single person was tasked to do CRO across a whole site/company. It can be incredibly hard to manage a WHOLE experimentation program when you’re a one man team, even if you get support from other functions.

We are already hearing this….

- You don’t need a copywriter – you have ChatGPT, we don’t need people

- You don’t need developers – we have a landing page building tool which breaks all design systems on our site

- You don’t need a PM – we have Google Sheets

- You don’t need UI designers – we just use ‘best practice’ page templates from Shopify or this random blog post I read

I think, when not executed properly, AI will stifle creativity along the experimentation space, and almost foster a laziness rather than thoughtfulness towards strategy. It has the potential to reduce Experimentation’s critical thinking along with actual solutioning / conducting research / identifying problem statements, then solving for those.

Shiva Manjunath

Instead of Making ChatGPT the Boss, Make It Your Ally

This section is probably the one you should read the most closely. These are 3 concrete examples of how the best in CRO are using ChatGPT as an ally:

Alex Birkett Ramps Up Insights & Results

Alex Birkett is the Co-founder of Omniscient Digital and a data focused growth advisor.

So, I’ve been using AI in CRO for many years, though recently with ChatGPT, generative AI, etc., there are even more use cases.

Three use cases for AI that have been around for a while:

Reinforcement learning and bandit algorithms

Where an A/B test has a finite time horizon (i.e. you end it at a certain time and make a decision with the data you’ve collected), bandit algorithms and reinforcement learning is more adaptive. As you collect data on your variants, the algorithm shifts the weight of the traffic distribution to the variant that seems to be winning. For certain types of experiments, this is super useful.

Clustering for qualitative data analysis

Most CROs collect a ton of qualitative data, whether survey responses to identify specific UX bottlenecks or more comprehensive qualitative data to build user personas. In the past, I’ve used clustering algorithms to identify common characteristics among respondents. Layer some deeper interviews on top of this, and you’ve got objective and rich personas.

Evolutionary algorithms to speed up creative testing

In certain cases, especially when rapidly testing creative combinations on Facebook ads, evolutionary algorithms are helpful to test a TON of different imagery, video, and copy and see which combinations work best. This is basically multivariate testing on steroids, but it quickly “kills off” weakly performing variants and blends together the highest performers.

Some new ways I’m using generative AI / ChatGPT:

Creative ideation!

I still conduct a rigorous conversion research process, and I use AI to speed up parts of this as well (many tools incorporate AI to detect patterns, such as in-session replay software). But even when I form a hypothesis, I love to use ChatGPT to get my creative juices flowing. You can train the tool to act like it’s your target persona and role play with you, telling you pain points and copy suggestions. I particularly like it for short form copy testing, like headlines, as my brain tends not to work as well when imagining short form copy. You can even give the tool longer copy or content and ask it to summarize it using copywriting formulas.

Clustering, analyzing, and labeling open ended responses

If I’m conducting sentiment analysis on survey data, I can now just dump this into ChatGPT and ask it to tag the responses by sentiment and categorize them. Especially compared to manual analysis, this saves hours of time.

Shawn David Segments Experiment Data

Shawn David talks to bots! Oh, he is also the owner of Automate To Win.

AI currently is used perfectly for segmentation of data and route analysis that can be done by anyone, doesn’t take hard skills, but takes a long time for a human to do even with functions.

Take things like VLookups or Hlookups in Excel. They are not complicated, but you have to remember syntax and jump from sheet to sheet.

Using AI you can simply tell the computer what you want to return from each list and it gives it to you, no sheet needed, raw data in -> intended output out.

In experimentation you have to segment out data based on a myriad of things, with AI you can simply give it instructions to do so.

Send it a data set of structured analytics data and return patterns. Train it enough with the correct inputs and you can simply feed it unlimited data from traffic sources and compare trends or let it tell you what patterns emerge in plain language.

You can literally upload a csv and have a conversation with the robot like “this is a spreadsheet with headers as the first row, the first column is a unique identifier for each record, the second column is the last time this record was updated”

“Show me all records that have been accessed within the last 30 days, where there is a ‘yes’ in column AZ, sort the returned records by column FF”

You can have a conversation with the bot about how to manipulate the data and get whatever output you need from it.

After training this will basically replicate all data manipulation in the future as you can real time converse with the algorithm and get outputs.

Eventually, there will be no need to tell it what you want, it will be trained on enough data where you can simply upload raw data and it will give you back novel ideas about patterns within it.

Iqbal Ali and Dave Mullen Analyze Qualitative Input

Dave Mullen is the Associate Lead Optimiser at AWA digital.

Iqbal Ali is the curious mind pumping out the CRO Tales Comic. He’s also the Experimentation and User Experience Specialist at Turinglab.

I’ve used GPT3 to save hours, or sometimes days on survey analysis, while maintaining accuracy. Here’s my best solution so far:

Work with GPT3

I had dreams of getting GPT3 to handle the whole process of survey analysis. It’s too soon for that. If you want accurate findings, you need to work as a team, letting GPT3 speed up your process, but not replace you.

My process

1. Using API calls from Google Sheets, ask GPT3 to briefly summarise each piece of user feedback, concisely explaining the biggest issue. This turns a long list of reading into a simplified list of quickly scannable points.

Example, instead of reading, “No matter what internet source or how fast, the website is very slow as of late. It never used to lag like this.”

You get a much more scannable summary: “Website slow”

In Google Sheets, you can have the summary and quote side-by-side, so even if you do want to read some of the quotes to get more detail, the summaries acts as a headings, making them easier to comprehend at speed.

2. Also, ask GPT3 to give each quote a score from 1-5 where 1 = “very unclear and ambiguous” and 5 = “very clear and unambiguous”.

Some quotes are just plain confusing. GPT3 has every right to be confused at times, but it has a tendency to try to bluff even when it’s unsure. This clarity score lets it tell you when it’s more likely to be confused. In my experience if it scores 3 or below, you should definitely check it.

What this gives you

- Survey data that you can scan through and summarise and quantify in minutes, when it would have taken hours

- Summaries side by side with full quotes so you can dig in for more detail at ease

- Immediate identification of where GPT3 is most likely to make mistakes, so you can double check

- A very fast system: GPT3’s review of 100 pieces of customer feedback took less than a minute and used about $0.50 in credit

I also got excited and tried much more complex approaches: Getting GPT3 to identify themes from each quote, build a list of themes and tag each quote from the consistent list. I found that making GPT3 read both a quote and a list of themes left too much opportunity for misinterpretation each time. It also became slower and more expensive.

Dave Mullen via LinkedIn

Dave also goes on to explain his process with a nifty video.

Iqbal Ali has been working behind the scenes with Craig Sullivan, bearing the power of ChatGPT down on the problem (and often tedium) of review mining.

This is what he has to say:

GPT-3 has been a useful tool for me, helping me brainstorm value propositions, conduct research, write Python code etc. However, I find that I never use anything it gives me verbatim. Instead, it’s a great way to prompt new ideas or tweak existing ones.

Also, for the last month or so I’ve been working on a text-mining project, where I’ve been using AI to help categorize and theme a large number of user research text documents.

While initially, the results were prone to inaccuracies and inconsistencies, with the right pre-processing and restricting of word counts, the results are now much improved.

I find the key and real power of GPT-3 is the API. The API, allows you to leverage its capabilities in conjunction with other tools, such as other Python-based NLP tools. Together, the results can be pretty amazing.

In short, I don’t see GPT-3 as the game-changer, but rather the messenger and the sign of things to come. I’ve previewed GPT-4 capabilities and the hints are that this will be the game-changer.

Iqbal Ali

Soooo…. Is ChatGPT-4 the Game Changer?

When ChatGPT-3.5 was made public by OpenAI in November of 2022, people were blown away. We finally had an AI chatbot that could provide human-sounding responses from a vast ocean of knowledge. Imaginations ran wild — both positive and negative.

But when the dust settled, we started paying attention to more sober feedback on the capabilities of ChatGPT (even though OpenAI already made many of them clear at the start):

- ChatGPT cannot access the internet and its knowledge cuts off in September 2021

- It often hallucinates information, i.e. gives you made-up info dressed up as facts, such as when it references non-existent articles

- The response it provides changes with slight changes in the prompts, or when you retry the same prompt

- It only provides answers based on what it knows, not what the user knows (translation: it doesn’t know all the variables involved with a prompt to give an answer that fully relevant to the context)

These are just a few of the limitations, which to be fair mostly exist because humans are asking too much from AI too soon.

Despite these drawbacks, it’s still an impressive tool. It sounds quite human, can debug and write code, do homework, take exams, help you brainstorm ideas, and more.

Then, ChatGPT-4 came just a few months after with 10 times the AI muscle.

It overcame many of the ChatGPT-3 limitations. This latest version of ChatGPT is significantly more powerful, performing better in the accuracy of responses. For example, in the same simulated bar exam, ChatGPT-4 scored in the top 10%, and ChatGPT-3.5 was in the bottom 10%. Insane!

Plus, it can accept both image and text inputs. A ChatGPT-4 demo showed how it can take a hand-drawn mockup of a website and generate HTML, CSS, and JavaScript code to produce that exact design.

This is a significant upgrade and can make imaginations run wild once again, but let’s stay real. ChatGPT-4 is great but it still has limitations similar to its predecessor. Although it does the job so much better.

This level of sophistication also means for you to get exactly what you want from it, you have to be a master of crafting prompts. If you’ve been keeping up with the buzz on social media, you know that “ChatGPT prompts” are a big deal now. Because the quality of your inputs determines the quality of the results you get from ChatGPT.

People are constantly developing “techniques”, “tricks”, and “hacks” to turn you into a pro-level “prompt engineer”. For example, Rob Lennon suggests many different ways you can use ChatGPT to brainstorm content ideas and generate content with unique, even controversial points of view.

One of such prompts asks ChatGPT to write in different styles such as satire or irony: “Give the most ironic, satirical advice you can about using ChatGPT to create more effective content”. This gives a refreshing spin on the usual default flat tone of voice.

Another example is asking it to assume a persona and speak to you from that point of view. Ruben Hassid suggests something similar but also shows how using natural language processing (NLP) and calling out ChatGPT’s mistakes can make it refine its responses.

It takes some trial and error to really figure out what to say to ChatGPT to get back the responses you want. Then again, you can also learn from people who’ve figured it out even better.

Let’s explore some real-world examples in experimentation.

How Are Businesses Using AI in Experimentation and Marketing?

We asked CROs and digital marketers to share how AI is playing a role in their experimentation programs and here are some ingenious cases to be inspired by.

Generate Copy Variations with ChatGPT

Imagine feeding ChatGPT your current subject lines, and with the right prompts, asking it to generate a number of more engaging, exciting, or curiosity-inducing variations of them.

That’s what Maria tried:

We have recently been exploring the capabilities of AI tools in our testing and experimentation procedures. Specifically, we’ve leveraged the power of ChatGPT to boost the performance of a software company we’re working with.

With the help of ChatGPT, we optimized campaigns’ email subject lines and made them more engaging than ever before. This resulted in a significant increase in response rates for our client.

Maria Harutyunyan, Co-founder of Loopex Digital

After all, the number one thing ChatGPT does is generate text. When GPT-3 was released in 2020, a myriad of tools flooded the market, promising to write your copy for you. Many marketers took advantage of them to take the pain out of copywriting.

Traditional copywriting can be a time-consuming and complex task, but AI tools have revolutionized the process, enabling content generation that is efficient, accurate, and effortless. With AI-powered natural language processing capabilities, crafting creative and engaging content without sacrificing precision is now possible.

Our recent experimentation with Jasper AI as a copywriting solution proved to be remarkably effective. The generated copies were not only comprehensive and relevant but also captivating. Utilizing Jasper AI for our A/B testing processes resulted in minimal effort and rapid outcomes, showcasing its immense potential in copywriting.

Dilruba Erkan, Consultant at Morse Decoder

Remember that ChatGPT doesn’t know your business and audience like you do. When you’re using it to generate copy, make sure it’s only helping you think, and that you’re making necessary changes to make it more suitable to your audience.

What it does is help shorten your brainstorming time or get over the blank page syndrome. It doesn’t take over the copywriting part of your A/B testing.

Improve UX with Knowledge-Driven User Interface (KDUI)

It can be expensive (and sometimes takes too long) to develop and test the most user-friendly UI. That is even after overcoming the challenge of identifying patterns and trends in user behavior.

Using KDUI, and the ML algorithm that powers it, Kate shared how she overcame the challenge:

One uncommon example of using AI in A/B testing and experimentation processes is Knowledge-Driven User Interface (KDUI). KDUI is a system that helps developers design more effective user interfaces by using machine learning algorithms.

KDUI can track user interactions with the interface and develop an optimal configuration that takes advantage of users’ individual preferences and behaviors. Using this tool, developers are able to create more natural interactions between the user and the interface while reducing development time due to fewer iterations of modifications. My experience with this tool was great, as it provided me with an easy-to-use, smartly designed solution for creating better user interfaces in my A/B testing process.

Kate Duske, Editor-in-Chief at Escape Room Data

A/B Test Parameters for Better Photography

This is a super interesting way Triad Drones is using AI to optimize pictures during the shooting process:

While the copywriting and marketing automation AI tools are on everyone’s mind, I was constantly brainstorming about an AI tool that could help me improve our business and delivery processes. One AI tool that caught my eye was Skycatch AI, which helped in our drone 3-D mapping projects.

While I was working on building our portfolio to give clearer and more elevated pictures for mining and real estate projects, I used Skycatch AI to A/B test, setting different parameters to see which settings would bring us clearer and more succinct pictures of our site.

I’m more interested in AI tools that can automate or ease the painful parts of our business processes. Drone mapping and cultivating the graphic data into meaningful images was a complex task, and Skycatch AI easily helped us ace that part of the business. With A/B testing to recognize which pictures more perfectly suit our presentation, we were able to close 20% more clients by optimizing the AI to our benefit.

Walter Lappert, CEO of Triad Drones

Analyze Data and Identify Patterns

Nick told us about how they used ChatGPT to analyze data and identify patterns, thereby leveling up their A/B testing process:

Yes, we have used AI tools in our A/B testing and experimentation processes!

We have found that using tools like ChatGPT has been incredibly helpful in analyzing data and making informed decisions. Our experience with ChatGPT has been positive, as it has allowed us to quickly and accurately identify patterns and trends in our data.

One tip we have found useful is to ensure that we have a clear understanding of our goals and objectives before beginning any testing or experimentation. This helps us to stay focused and make more informed decisions throughout the process.

Nick Cotter, Founder of newfoundr

A note of caution we would add here: You must do your best not to rely 100% on the output from ChatGPT to make important decisions.

For one, it only outputs information that’s as good as the input. So, if there’s some bias or error in the data that a human would’ve spotted, ChatGPT wouldn’t spot that and proceed to give you an output.

Also, as stated earlier, ChatGPT cannot fully understand context or make value judgments like a human would. Make it a part of your process to have someone interpret the results and provide context and insight that AI cannot provide.

In Conclusion

The impact of AI on experimentation is expressed mostly in how it augments and assists decision-making. This is significant today and will continue to grow as more CRO-centric AI tools are developed and AI gets more powerful.

But a major thing to understand is that AI still struggles with true creativity, doesn’t have intuition, and cannot harness ethical and moral considerations. Only humans can. AI cannot replace human expertise.

A/B testing, and experimentation as a whole, will always require human oversight. And even the results have to be interpreted by humans. AI can only be an ally in making the process easier and faster.

Currently, AI can smooth experimentation projects by helping in the analysis of qualitative data, personalization, creative ideation, data segmentation, sentiment analysis, generation of first draft copy and content, and predictive modeling.

None of these outputs are totally dependable without passing through the assessment of an experienced experimenter.

A smart experimentation program finds the balance between the productivity boost of AI tools and the unmatched power of human judgment.

Written By

Trina Moitra, Uwemedimo Usa

Edited By

Carmen Apostu

Contributions By

Alex Birkett, Craig Sullivan, Dave Mullen, David Mannheim, Dilruba Erkan, Iqbal Ali, Kate Duske, Maria Harutyunyan, Nils Koppelmann, Oliver Paton, Shawn David, Shiva Manjunath, Tracy Laranjo, Walter Lappert

3tech GmbH