Thinking with AI: The Human-AI Partnership (Part 1)

What do you think of AI content? Impersonal? Generic, bland, inauthentic? There are many prompting guides out there, most of which focus on single prompt content generation, whether it’s generating articles, stories, poems, art, or video. You name it, there’s a supposed “magical prompt” to create something cool.

But doesn’t this approach feel like it’s robbing us of our creative autonomy? Doesn’t it feel like AI is taking the driver’s seat with this approach?

What if it doesn’t have to be that way?

This is the first part of a series aimed at practically improving human-AI collaboration. This isn’t a guide to prompt engineer your way to have AI magically do everything for you. Instead, this series offers practical rules and principles to help you leverage AI as a cognitive ally, handling tedious tasks and sharpening ideas while keeping you in the creative driver’s seat. Think of this guide as a practical way to amplify your creative abilities, not outsource them.

This guide is the culmination of months of research, firsthand experience, and insights gained from conducting AI ideation workshops with around 200 individuals (so far). It has been tested and refined through those experiences. Now, while this approach has proven effective for me, I’m not suggesting it’s the only way to work with AI; it is just the best approach I’ve found so far.

Personally, the following frameworks and principles have proven invaluable across a diverse range of projects. They helped me develop Ressada, an AI-powered tool for uncovering hidden pain points in user feedback. For my comic series, Mr Jones’s Smoking Bones, AI assisted with research, development, and structuring of the articles. In co-designing Experimentation.wtf, a game promoting experimentation, AI provided guidance, making up for my lack of experience with game design. These are just to name a few.

Across all those projects, it was important to me that I had an excellent level of control over the process and, therefore, the output. I am a control freak, after all.

Goal of This Guide

My goal with this guide is to share what I’ve learned and help you use AI as a collaborative partner, providing you with a framework to apply to your projects. By understanding how to better use AI, you can claim more control over the creative process.

So, if you’re dissatisfied with your current AI output, feel that AI is too blunt an instrument, or are interested in getting more authentic output, I hope you’ll find actionable insights here.

This part one explores foundational concepts and principles, laying the groundwork for the following more practical segments. Stay tuned for upcoming instalments where we’ll dive into actionable strategies and real-world applications of this collaborative approach.

Mapping the Chaotic Creative Process

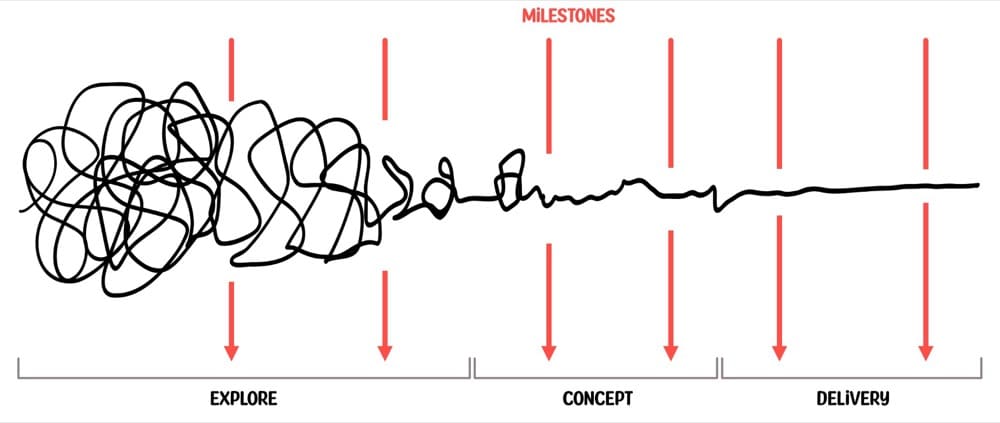

We’ll start with a deliberately simplified linear diagram that maps the act of creating something.

The journey often begins with chaos, a cacophony of brainstorming, dead ends, and half-formed ideas. At this stage, there are so many possible outcomes, and each twist of the line represents a decision about what to keep, what to kill, or how to pivot. This is the “explore” part of the process.

As important decisions are made, we move to the “concept”, where ideas are refined and a clearer picture emerges of precisely what we want to create.

And then, we finalise everything in the “delivery” stage, where we craft a coherent outcome.

Now, does this perfectly mirror reality? Of course not. Think of this as strategic simplification, “scaffolding” to hang our later collaboration strategies on. It’s not perfect, but it’s useful enough for our purpose.

Drivers of the Creative Process

Okay, we’ve got a nice simplified linear process to use as our framework. But what drives this creative process? What provides that rightward momentum? Rick Rubin, in The Creative Act, offers a perspective:

“Most of the time, we are gathering data from the world through the five senses… This is our source material, and from it, we build each creative moment.”

In this case, the “source material” is our own personal experiences, domain knowledge, cultural heritage, and overall knowledge. It defines the shape and trajectory of that messy line on the left side of the diagram, the choices that become the concepts that become the delivery. Think of each twist of the line as an individual creative moment.

Not only is this a simplified view of the creative process, but it’s also very generic. For instance, wouldn’t the process be more specific if we were creating something specific, like an article, story, or business proposal? Well, yes. And if we are experts in a specific field, we’ll likely have our own internal processes. Some may even be documented, but as Ethan Mollock rightly observes, even experts struggle to articulate their own processes.

If you already have a defined process, that’s great. You can use that. But if not, this structure is useful enough as a starting point. We just need to make a simple tweak to make it even more effective.

The Approach

To make this structure really work for us, we need to pin some mini-milestones to our scaffolding. These will be outputs or outcomes generated along the way to our final delivery. For instance, if creating an article, one of the milestones could be fetching relevant quotes from source materials, or perhaps creating an outline for the article.

These are our milestones, and they will become goals for each of our AI interactions.

Don’t worry if you’re stuck on this stage. In the next part of this series, we’ll discuss ways that AI can help us create these milestones. For now, let’s move ahead and learn a bit more about the collaboration partners themselves.

Humans + AI: Understanding The Thinking Partners

AI – The Pattern-Matching Polymath

Renowned literature teacher and author Thomas C. Foster said:

“Part of pattern recognition is talent, but a whole lot of this is practice: if you read enough and give what you read enough thought, you begin to see patterns…”

He was talking about human creativity, but he might as well have been talking about AI since large language models (LLMs) work on similar principles. They pattern-match at scale. We can think of LLMs as nothing more than highly sophisticated autocomplete systems on steroids.

Note: while pattern matching is a core function, it’s important to remember that LLMs use complex neural networks and statistical models to process information, enabling them to perform tasks beyond simple pattern recognition.

We’ll use this simplified pattern-matching capability to focus on how LLMs work so we can leverage this power.

Note again: AI is a broad term, and LLM is a type of AI. For this guide, I’ll be using these terms interchangeably. However, it’s important to remember that AI encompasses many different approaches, while LLMs are a specific type of AI focused on language processing.

Going back to what happens when you provide a prompt to an LLM: the LLM analyses its extensive dataset, identifies patterns matching the input (the prompt you provided), and generates its response based on statistical likelihoods of the best pattern match to make. What patterns it chooses to match depends on where it places its attention, how much creativity to employ, and of course, based on relevance.

To explain this a little more, let’s consider the following example.

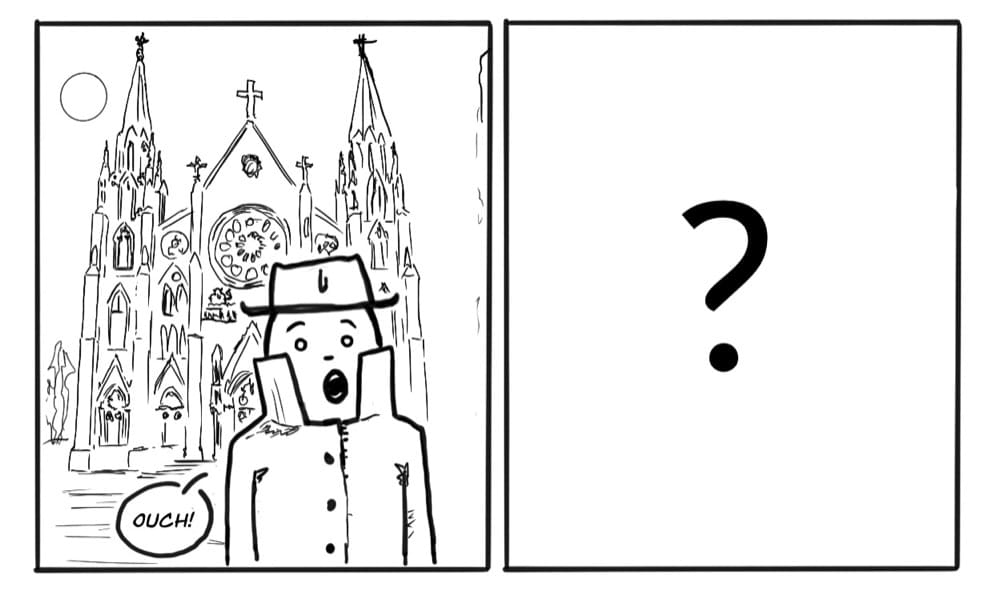

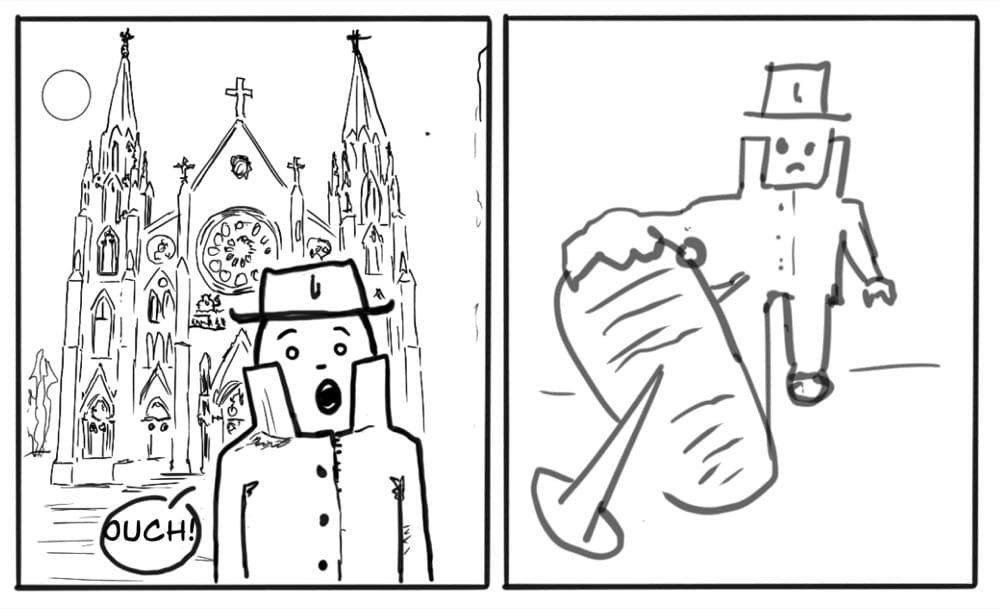

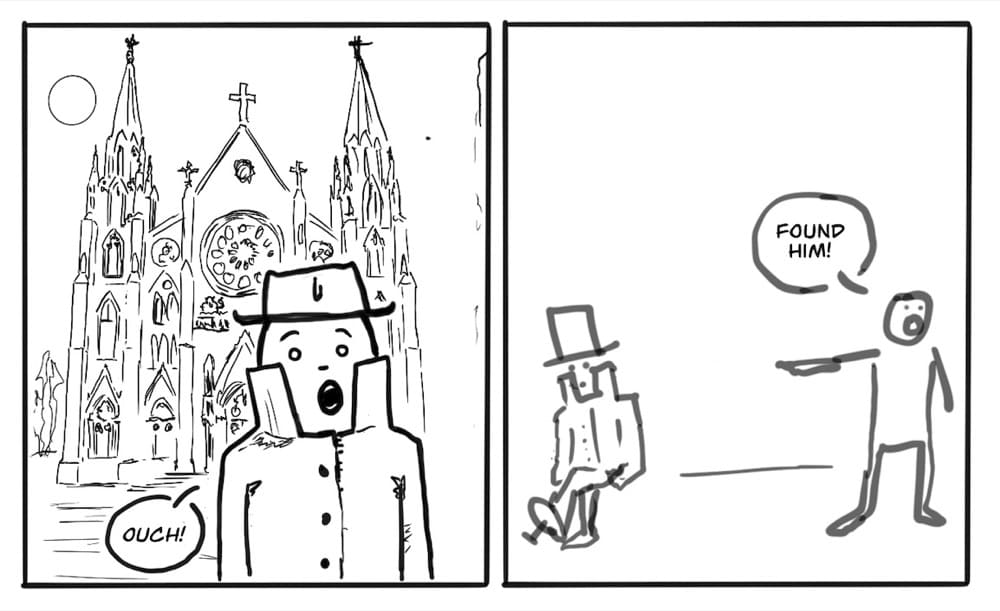

We have a simple comic panel that represents our prompt. Analysing the panel, we can see that multiple elements—even in its low-fidelity form—that need to be considered: the character’s position, the word “ouch,” the clothing (e.g., the trench coat and hat), the cathedral in the background, etc.

Now, if I were to ask you to create the next panel, what would you decide to do and why? The rule is that the second panel must follow from the first.

Perhaps you’d zoom out to show the character stepping on a nail, giving context to the “ouch.”

Alternatively, you might zoom out to show more of the city or focus on how the trenchcoat-wearing secretive character has given away his position to the enemy.

Your choice is based on what you focus your attention on and how much creativity you apply, all while maintaining relevance. For instance, in the above example, we decided to focus on the “ouch” and the fact he’s wearing a trench coat (making the assumption he’s a spy). Then we chose a level of creativity to apply while maintaining relevance.

The point is that you choose where to place your attention, and then you make associative leaps in keeping with your desired level of creativity to create your second panel.

Our choices for panel two may or may not reveal something about our personal experiences, beliefs, or cultural backgrounds. Whether they do depends on how deep we reach into ourselves to make the necessary associations. To go deep, we need to make an association that works, is uniquely ‘us’ and personal.

For instance, the ‘ouch’ could be caused by a hurtful text message from a loved one.

The deeper we reach, the more authentic and uniquely “us” we get. However, it should be noted that we’ll mostly opt for simple associations as we employ our default System One Thinking (more on that later).

Here’s the thing: we humans are always making these associations. Our brains do this while we’re daydreaming; they are constantly making these associations to help us solve problems and make predictions about our future. Smart nerds have called this system Episodic Future Simulation.

Now, LLMs pattern-match in a similar way to us. When it comes to where to place its attention, it relies on the language of the prompt. LLMs make statistical calculations based on the most effective and relevant associations based on the prompt and based on where this attention is. So, paying much attention to describing the background may influence its respective importance and, therefore, its choices for panel two.

To be clear, panel one represents our prompt, and panel two represents the AI output.

Since LLMs lack life experiences and emotive connections, their associations may be very general or be associations that someone has made in the past. These choices, though, may be comparable to our “shallow” choices for panel two. Hopefully, if you did the exercise, you will find this to be the case.

What LLMs possess that we don’t, however, is range and access to a large volume of information. This can enable them to make far-reaching connections, connecting topics and subjects we are unfamiliar with. They can pattern-match like a true polymath, being an expert in a wide range of fields (though this only happens if the prompt is engineered in that way).

We shouldn’t underestimate this advantage since numerous studies show that AI often outperforms humans on creative tasks (see references below). However, I’d wager that if you compared the best human creativity has to offer, where humans have reached far and deep to create something authentic, unique, and odd, humans would win. This is backed up by the following study which found that the most creative humans beat AI. But then again, most humans are not that creative.

Now, before you come at me with “but AI this” or “but humans that”, understand that I’m not trying to create a “us versus them” debate. After all, the aim of this guide is for humans and AI to work together. It’s just important to understand the strengths and weaknesses of the respective collaborative partners.

I also want to take a moment to emphasise how important this pattern-matching capability is for the rest of the series. Pattern-matching is the backbone of this and subsequent parts of the guide.

For now, let’s continue with some more important concepts. We want to know how humans and AI can work together, so let’s explore the different modes of collaboration we have available to us.

Modes of Working with AI

I’ve touched on Systems One and Two Thinking. But let’s get a firmer understanding of what they are. Psychologist Daniel Kahneman, in “Thinking Fast, Thinking Slow,” outlined these two systems of thought:

- System One Thinking. This is fast, intuitive thinking. It’s our brain’s automatic, unconscious mode. Quick and effortless, it relies on heuristic patterns and past experiences, requiring little energy. This is our habitual gut reaction based on personal experiences and existing knowledge. System one is always on, constantly processing information in the background.

- System Two Thinking. In contrast, system two is slow and deliberate. It’s our conscious, analytical, and effortful mode. It’s slower, requires concentration and is engaged when solving complex problems or making difficult decisions. It is a deliberate, focused process and would be ideal for most tasks were it not for the high energy and focus requirements.

System One is our default due to its low energy expenditure. Therefore, System One is often utilised due to efficiency or laziness. It is worth noting that when we think we are being creative, we often just regurgitate old ideas and use System One for easy solutions. At least some engagement of System Two is preferable, but we have limited access to it due to the energy required.

All this is a balance. A key part of human-AI collaboration is bridging the gaps between these two systems, using them to enhance each other’s strengths. This is where we can explore different modes of working with AI.

Different Modes of Collaboration with AI

In their paper “Human-AI Collaboration,” researchers at Harokopio University of Athens outline three distinct modes of collaboration: human-centric, AI-centric, and symbiotic. These modes each leverage System One and Two Thinking and the AI’s pattern-matching abilities in different ways.

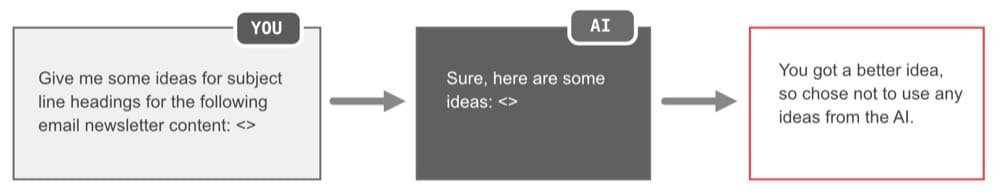

Human-Centric Approach

This mode focuses on brief and occasional use of AI, prioritising human intuition and experience instead. This mode is ideal when we understand the problem, have a clear vision, or enjoy leading a task. With this mode, AI validates our ideas, fills gaps, and explores alternative perspectives. AI can also be used to keep check of our biases and thinking fallacies, as well as validate our System Two Thinking.

Humans remain in charge of decision-making. AI takes a passenger seat role. This mode should be used heavily (and perhaps primarily) towards the final stages of the creative process–i.e. the delivery stage.

An example of Human-centric interaction could look something like this:

AI-Centric Approach

In contrast, the AI-centric mode of collaboration leans heavily on AI, perhaps to explore topics, generate ideas, perform repetitive tasks, or break down complex tasks such as summarising a paper or restructuring a piece of writing.

This mode is where AI drives most of the process and makes decisions. AI is in the driver’s seat, and we become the passenger. This is the common prompt approach to working with AI when we rely on AI to do the work and make decisions for us.

The AI-centric mode is generally best used during the first two stages of the creative process, as it can lead to inauthenticity in the final delivery.

An example of AI-centric interaction could look something like this:

Synergistic Approach

This mode is a balanced partnership where humans and AI collaborate together to enhance each other’s capabilities. It involves two-way interaction, shared decision-making, continuous feedback, and a collective mindset.

This mode is particularly relevant in complex tasks requiring human intuition and AI’s computational abilities. For example, using AI to widen the diversity of ideas, then the human contributing to those ideas, and feeding those ideas back into the AI before developing a system to narrow down and score the final ideas. Lots of back and forth.

This collaborative approach is very powerful but can also be complex to manage.

Overall, each of the three modes has strengths, weaknesses and uses. In truth, we must shift between all three modes of thinking throughout the creative process. But how do we choose which mode to use? To determine this, let’s explore the final two concepts in this article: “flow” and the “Kolb experiential learning model”.

An example of synergistic interaction could look something like this:

We’ll cover practical advice for all these modes later in the series.

A Framework for AI-Human Collaboration Synergy

Flow: Defined by psychologist Mihaly Csikszentmihalyi in 1990, flow is a state of deep immersion and focus in an activity and a feeling of losing oneself to the task. It’s optimal engagement in an activity. It requires focused attention and deliberate action and engages System Two in a rewarding and not overly taxing way.

The key requirements for achieving flow are:

Having a good balance between challenge and skill. The task must be challenging enough to be engaging, thus activating System Two, but not too difficult that it induces anxiety.

Having clear goals and immediate feedback. This is needed to allow for rapid adjusting actions and maintaining momentum.

Luckily, the Kolb Learning Cycle is perfect for applying these requirements in a simple framework. The Kolb Learning Cycleis an experiential learning model developed by Kolb in 1984, it’s an approach taught to teachers to help design their classes for optimal learning (at least this was when I was last teaching). The Kolb learning cycle states that for learning to be most effective, a learner needs to experience four stages in a cycle:

1. Concrete Experience: Involves having a concrete and immediate experience. This is the raw, sensory engagement with a situation or activity and observing what happens.

2. Reflective Observation: Involves reflecting on the experience from multiple perspectives. Critically examine what happened to identify patterns, feelings, discrepancies, strengths, weaknesses, assumptions, etc.

3. Abstract Conceptualisation: Involves making sense of your reflections by connecting them to existing knowledge. Here, you update existing theories, models, insights, frameworks, and generalisations or create new ones.

4. Active Experimentation: Based on abstract conceptualisations, plan and actively test new insights or theories in new situations or contexts to see what happens. You’ll then take the learnings back through to Concrete Experience, thus restarting the cycle.

Now, while this cycle is primarily designed to help learn stuff, it is also helpful for a wide range of applications, including applying it to our human-AI collaboration efforts in a way that helps us achieve flow.

As an example of how this can be applied, let’s consider a specific example where our goal is to develop experiment ideas for a pre-defined user problem.

Here’s what each stage might look like:

1. Experience an interaction and outcome: This might be where we prompt AI to generate experiment ideas, perhaps using an AI-centric approach. We experience the prompt and the output.

2. Reflect: We think about the interaction: How was the output? How was the process? Did you feel the right level of control over the output? We reflect on the experience and observe the output, reviewing the results from multiple angles.

3. Conceptualise: We connect these insights and information with our broader knowledge base, developing new theories, etc. Perhaps we believe that if we break down the task into smaller steps, we can incorporate more human input, thus improving the output and our level of control in the process.

4. Experiment: We develop and try this new approach with new steps, taking a simplified version of the user problem. We check the output and take the learnings back round through the cycle.

Following this, we loop back to concrete experience and repeat the cycle.

Our interactions with AI should ideally follow this cycle. You’ll notice that rapid feedback is built into the cycle (one of the requirements for “flow”). Not only that, but the abstract conceptualisation and active experimentation steps mean we can better tune our interactions for a “best fit” between system one and two thinking.

Additionally, as we iterate, we learn how to collaborate with AI and improve our collaboration with each iteration. We decide when to shift our mode of collaboration. We review AI output, get a better understanding about why the output was given, and then tweak our approach. All this approach needs is a mindful application of this cycle and tweaking of all the factors and variables we’ve mentioned in this article.

What is the best thing about using the Kolb cycle? The stages are very much focused on you. You reflect on the interaction you just had with AI. You apply these observations and reflections to everything you’ve already learned and decide what to try next with this newfound information! Applying the Kolb cycle to your interactions means you’re in the driver’s seat for the entire interaction. You’re the boss.

Furthermore, the Kolb learning cycle is a tried-and-tested framework widely used in learning institutions. Why reinvent the wheel?

Summary

So far, we’ve explored the often messy nature of creative work. We’ve also seen how creating an overall ‘scaffolding’ and marking some milestones within that scaffolding can make integrating AI collaboration into our processes easier without defining them in detail.

We’ve learned that both humans and AI rely on pattern-matching to form associations and make creative decisions. Attention and creativity are key decision-makers regarding what and how to pattern-match. We also learned that AI and humans have different strengths and weaknesses in this area. Perhaps AI is better at pattern-matching, and humans are better at meaning-making (for a deeper level of authenticity).

Finally, we examined various modes of working with AI, where the amount of AI used is variable. We also developed a framework to maximise learning from our collaborative efforts and formalise a structure that enables us to experiment with all the principles we’ve learned.

This is all well and good, but we still need practical guidance on using AI in these specific interactions. We need to know how to design our interactions to get the best results from AI, especially regarding the magical synergistic collaboration mode. The next part of the series will address these points, focusing on interaction patterns that we can practically apply to various tasks.

References

- Creativity and Your Brain, Indre Viskontas, Ph.D

- Episodic future simulation

- Collaborative Intelligence, Dawna Markova & Angie McArthur

- The Creative Act, Rick Rubin

- Human-AI Collaboration: A Review and Methodological Framework

- Hyper-focus, Chris Bailey

- Kolb, D. A. (1984). Experiential learning: Experience as the source of learning and development. Prentice-Hall.

- Schacter, D. L., & Addis, D. R. (2007). The cognitive neuroscience of constructive memory: remembering the past and imagining the future. Philosophical Transactions of the Royal Society B: Biological Sciences, 362(1481), 773-786.

- How LLMs choose the next word

- AI is more creative than humans

- AI and Human Co-Creation is better

- LLMs more creative than humans on divergent thinking tasks:

- AI Outperforms humans

- Large Language Models for Idea Generation in Innovation

- Only the best humans win in divergent thinking tasks

- Co-Intelligence for high-end research

Written By

Iqbal Ali

Edited By

Carmen Apostu