Exploring the Use of Feature Flags and Rollouts in Product Experimentation

Feature flags and rollouts empower product teams to release features gradually, test in production, and drive data-driven product optimization.

Key points:

- Feature flags turn features on or off, while rollouts use feature flags to gradually increase users’ exposure to new features.

- Feature flags are no longer a niche tool for software development; in product experimentation, they provide realistic insights for product optimization.

- Practical applications and benefits of feature flags and rollouts include feature testing, personalized user experiences, and kill switches.

What are the chances a new feature release goes horribly wrong?

Good product teams don’t even want to find out. The stakes are high, and catastrophic failures are possible, even for tested features.

To mitigate these risks, many turn to feature flags and rollouts. These act as a safety net when a new feature malfunctions. They help contain the damage and reverse it quickly.

But that isn’t all. We’ll discuss how feature flags and rollouts help accelerate release cycles, drive product experimentation, and fine-tune user experiences with granular control over feature availability and distribution.

First off…

What Are Feature Flags?

Although feature flags and rollouts might sound similar and are sometimes used interchangeably, they are not the same.

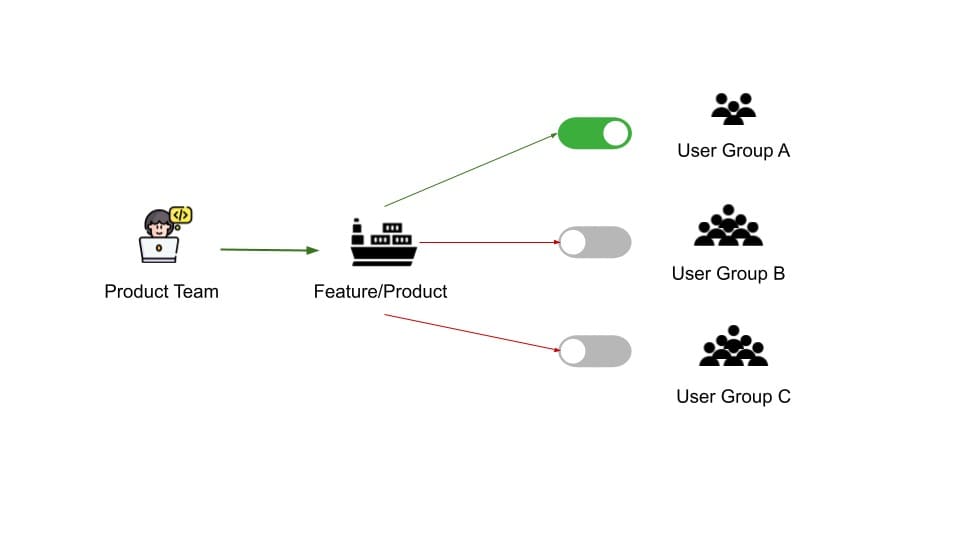

Feature flags (also known as feature toggles or switches) are a tool that allows teams to turn on or off specific features within an application without needing to redeploy the entire code. This allows for continuous experimentation and iterative development by controlling which users see the new feature and using data to decide whether to keep it.

With feature flags, you can:

- Reduce deployment risk. Deploy code without immediate release, enabling testing and quick rollback if needed.

- Accelerate release cycles: by integrating hidden features into production early, enabling smaller and more frequent updates.

- Conduct more realistic tests of new features with real users in production, for better data and faster feedback.

- Do more target rollouts by controlling which users see new features, enabling strategies like canary releases and A/B testing.

- Empower non-devs to control feature visibility, reducing dependence on developers for experimenting with or making changes to feature changes, fueling agility in the team.

There are nine main types of feature flags.

Types of Feature Flags

You’d find these pretty intuitive because feature flag types are named by what they do or how they behave:

1. Release flags: These separate the code deployment from the feature release. What this means is that you can integrate features into the main codebase and deploy them into production without making them available to users. This is used in phased rollouts and canary releases where features are gradually released to different user groups (more on rollouts later in this article).

2. Experimentation flags: This type is used for A/B testing, algorithm optimization, and content personalization. It presents different versions of a feature to various user groups to collect user behavior data. This is then used to measure the performance and impact of features.

At Albert, we used feature flags to run A/B tests on different user segments without disrupting the entire user base. This helped us gather real-world feedback and data on the new feature’s performance. For example, when we decided to revamp the user dashboard, we used feature flags to show the new version to just 10% of our users. Their interactions provided invaluable insights, which we used to make crucial adjustments before a wider rollout.

Will Yang, Head of Growth and Marketing, Instrumentl

3. Operational flags: They are used in quick incident responses, maintenance mode, managing logging levels, and adjusting system behavior in real time. Because they allow product teams to control the operational side of the system without impacting the user interface directly.

4. Permission flags: As the name suggests, these flags control users’ access to features. This is usually done by user segments defined by attributes, roles, or subscription tiers. It is also used to allow access to features in beta testing.

5. Kill Switch flags: Imagine this as the ‘big red button’ used in emergencies to shut down certain features without rolling back an entire release. This comes in handy when a newly released feature turns out to be detrimental to user experience, creating bugs, causing poor performance, or introducing security vulnerabilities.

These 5 types of feature flags are defined by their function. The next 4 types are defined by how and where they’re used, with functions that overlap with the previous 5.

6. Long-lived flags: These flags remain in the system for extended periods, which is ideal for controlling features that are still under development or require continuous monitoring.

7. Short-lived flags: Unlike the previous type, short-lived flags are bounded by predetermined timeframes. They’re designed for a specific purpose, often within a single release cycle or quick experiment, and then they’re removed from the codebase.

8. User-based flags: These flags are used to control feature access by user attributes or behaviors. Its prime use cases are personalization, dynamic pricing, and role-based feature access.

9. System-based flags: These feature flags allow for automated feature toggling based on system conditions or events. They are used in load balancing, failover mechanisms, and dynamic security measures.

Concrete Use Cases of Feature Flags

Feature flags have long been part of a developer’s toolkit. They evolved alongside practices like CI/CD (continuous integration and continuous delivery), where software can be released to production anytime.

As they became less of a niche tool, feature flags were integrated into comprehensive software delivery platforms, cementing their role in software development and product experimentation.

This has led to expanded use cases, such as:

1. Testing in production: Feature flags allow developers to test features directly in the production environment without impacting the entire codebase. This minimizes risks and provides a kill switch for when things go sideways.

This helps when you want to learn about the true performance of a feature in production, and staging environments just don’t cut it.

When we launched a new dashboard interface […], we used feature flags to limit its visibility to 5% of our users—those who regularly use several features every day. In addition to providing information about usability, this allowed us to determine how much the feature would increase the total system load. We might optimize the interface before a wider release by iteratively modifying it in response to direct user feedback and incremental performance statistics.

Moreover, feature flags support a strategy of gradual deployment. Following the first round of testing, we progressively increased the user base while modifying the functionality in response to ongoing input.

Alex Ginovski, Head of Product and Engineering, Enhancv

As two experts in the field note, understanding the feature’s true performance prioritizes both business and learning metrics.

In most cases, when we roll out the new feature, we are interested in both business performance and learning metrics.

The performance metrics highly depend on the feature and the type of business we deal with. Mostly, those are revenue-related metrics (Revenue per User, Revenue per Order, Sale Conversion rate, etc.).

Learning metrics mostly come from the micro-conversions that refer to certain interactions with the feature that we built. Quite often adoption rate is one of the focus metrics. Additionally, sometimes it is helpful to integrate NPS for the new features to learn about their acceptance by the target group.

Anastasia Shvedova, Lead Consultant Product Experimentation, and Michael Klöpping, Head of Engineering, KonversionsKRAFT

2. Canary releases: Roll out a new feature to a small subset of users before making it available to everyone else. This allows real-world testing and validation in a production environment with minimal fallout if things go wrong. Feature flags enable this. If necessary, you can quickly employ the next use case of feature flags.

During beta testing of a new AI-driven recommendation engine for our application, this was an effective application. We enabled the engine for a limited, targeted user group using feature signals while closely monitoring performance and user interaction. This enabled us to detect flaws and enhance the functionality prior to its wider implementation.

Jessica Shee, Senior Tech and Marketing Manager, M3 Data Recovery

3. Rollback/kill switch: There will come a time when you need a quick ‘undo’ button to remove a problematic feature post-release without impacting the entire codebase.

4. Server-side A/B testing: Toggle features for different user groups to compare performance. This is different from client-side A/B testing, where changes happen on the user’s web browser, for example. In fact, developers have used this to compare features’ performance long before cloud infrastructure and DevOps practices made it more common.

5. Feature gating: Facilitates targeting rollouts to specific user groups for beta testing, for example. It is also used for restricting feature access to certain users based on regulations, subscriptions, access levels, or user groups.

Other use cases include:

- Minimizing disruption during updates

- Feature sunsetting, and

- Phased rollouts where you gradually introduced features to users in percentage increments

We integrated feature indicators with phased deployment for rollouts. The feature was initially enabled for 10% of users; this percentage increased incrementally as user confidence in its stability increased. A regulated approach was implemented to mitigate risk and guarantee a seamless user experience.

Jessica Shee

How Do Feature Flags Work in Convert Experiences?

This video demonstrates how feature flags work within Convert’s Fullstack product:

Convert’s feature flagging integrates a JavaScript SDK into your application (compatible with any JS application).

You begin with the starter code from your project settings in the Convert dashboard. Then, within your Convert Fullstack project, define specific areas and features in your app to target for the experiment.

Here, you can create variations with the features you want (JSON, text attribute, etc.), to serve your specific variation customization logic. You can also toggle these features within the code.

Optionally, set up event listeners to monitor feature activation and user interactions for debugging.

Once set up, Convert allows you to assign users to different feature variations using any kind of user IDs. The bucketing is randomized yet deterministic for a specific user context. You can track conversions to analyze feature performance with preset and custom goals.

All of this data is accessible through Convert’s robust reporting and analysis tool.

What Are Feature Rollouts?

Feature rollout is a software development strategy for gradually introducing a new product or feature to users. Its main goal is to ensure the product—whether a minimum viable product (MVP) or a fully developed feature—performs as intended before reaching the entire user base.

This is closely linked to progressive delivery, which involves releasing new features to a smaller user group first to assess their impact before a wider release.

Feature rollouts control and phase the release of a validated feature, ensuring that the entire product system can handle the increased usage load.

Anastasia and Michael explain how they do this at KonversionsKRAFT:

Sometimes the step [after a successful feature A/B test and] before the native development would be to put the variant on 100% of audience traffic.

However, we highly recommend limiting the timeframe for this adjustment for two reasons:

- For some tools this means really high costs due to the increased amount of impressions.

- The experiment code is still fragile and the changes on the control-side can affect the variant functionality.

What we practice a lot is the phased rollout strategy that follows the following logic.

We might:

- Rollout to a smaller amount of users and then gradually increase the amount of the affected traffic (soft roll out)

- Rollout to one segment of users (for example if we want to reduce the quality assurance effort). The segmentation can be both technical (by browser/device) or socio-demographic (a customer group with a specific consumer behavior).

- Rollout in one country as a starting point.”

Anastasia Shvedova and Michael Klöpping

This careful management helps maintain system stability and performance as you introduce new features. On top of that, you can also:

- Catch bugs early, for faster fixes and a better user experience.

- Minimize potential issues for the entire user base.

- Enable real-world testing with actual users, providing valuable feedback for improvements.

- Personalize user experience by tailoring feature rollouts to specific user segments.

- Acquire data on how users interact with new features, a key asset for product optimization.

Note that while A/B testing is used to evaluate untested ideas and release changes to users, they typically involve a fixed 50-50 randomization of control and treatment groups. In contrast, rollouts offer more granular control of the user groups for a phased introduction of features to the user base.

How Are Rollouts Used?

Here lies the key difference between feature flags and rollouts.

Feature rollouts typically follow a successful experiment. They are used to ramp up traffic away from the control treatment whilst system and customer behaviour metrics are monitored. As an example, when re-platforming technology, an experiment between a new variant can de-risk the decision to move, and the roll-out will de-risk the actual move.

Andrew Brimble, Experimentation Lead at Specsavers

Think of it this way: Feature flags are like light switches that can turn a feature on or off. Rollouts, then, are like dimmer switches that use feature flags to gradually increase the brightness of a new feature so more and more users can experience it.

Makes sense?

That said, you’d use that ‘dimmer switch’ in the following cases:

- Targeted user feedback: Release a feature to specific user segments to gather targeted feedback from those most likely to use it. Use this input to refine the feature and better meet user needs.

- Personalization: Provide distinct user experiences to specific segments (based on preferences, behavior, or demographics).

We once conducted a successful experiment for a client in the field of educational e-commerce. User research indicated that different target groups on the website had varied needs regarding category preferences. To address this, the team proposed a feature that allowed personalized views of selected categories in user accounts.

Given the complexity of this new journey step and its integration with backend logic, implementing it as an A/B test was deemed too fragile. Instead, we rolled out the feature as a flag for 100% of selected user segments. This approach allowed us to control the feature’s availability dynamically.

The results were impressive: interaction rates with categories increased significantly across target groups. Consequently, our client decided to implement the feature fully in one country as a pilot.

Anastasia Shvedova and Michael Klöpping

- Controlled testing: Test with a limited audience before releasing to a large user base, and eventually the entire user base. This way, you spot bugs, usability problems, etc., without impacting everyone’s experience.

- Limited impact release: If a new feature has unforeseen negative consequences, a rollout limits the number of affected users, making it easier to roll back the change or implement fixes quickly.

At Instrumentl, we combined feature flags with gradual rollouts to mitigate risks and catch any issues early on. For example, when rolling out a new grant-tracking feature, we started with a feature flag to test it in a controlled environment.

Once the initial bugs were ironed out, we used a phased rollout strategy to incrementally expose the feature to more users. This approach not only protected user experience but also provided multiple checkpoints to optimize the feature. The key is to continuously monitor user feedback, making adjustments as needed, ensuring the feature enhances user satisfaction and meets business goals.

Will Yang

Keep in mind that incorrect usage of feature rollouts (and even feature flags) can be detrimental to your business and users.

For example, if there isn’t a clearly defined process for removing feature flags after they have served their purpose, such as after confirming the feature is widely accepted by users and stable in the production environment, you risk building up technical debt. These flags can clutter your codebase and make maintaining the code difficult.

If an A/B test was successful, we recommend our clients to proceed with the native development of the feature on their page.

If we did an experiment with the help of a feature flag, then this step is not required since [we developed] natively from the very beginning, the feature rollout becomes much easier. However, our recommendation is still to make a code cleanup and ensure that everything looks correct from the technical perspective.

Anastasia Shvedova and Michael Klöpping

Also, failing to monitor key performance indicators and health metrics during a rollout can mask underlying problems and negatively impact user experience, even when a feature appears successful in the short term.

We try to ensure a smooth feature/test rollout on several levels.

First of all, on a technical and quality assurance level. Once the test is developed we always proceed with the code review and unit test to ensure the functionality of our code.

The next thing that we do is the so-called exploratory testing with native devices. In our opinion, nothing can replace testing by a human when it comes to identifying some UX issues. Once the test is “tested” and launched, we do a go-live monitoring.

Usually, it includes steps like checking the code environment, checking the console, and making sure that all the metrics are firing correctly. We also create the “Error goals” as metrics based on the “try-catch” method — it helps us identify that the elements from the test are displayed and function correctly.

Secondly, on the metrics level. Of course, we look at the business metrics that help us see how our feature or test affects the business performance. Additionally, we often try to roll out a non-inferiority test experiment before we launch the new feature. It helps us answer the question of whether the new feature is actually not ruining some business metrics and, if yes – to what extent.

Anastasia Shvedova and Michael Klöpping

How Do Rollouts Work in Convert Experiences?

This video shows how Convert’s Fullstack Rollout feature enables controlled feature releases through

- Easy activation of features by changing rollout status

- Immediate deployment of new features to all users

- Simple and controlled feature management, allowing for gradual rollouts adjusting traffic allocation in the dashboard.

Feature Flags vs Feature Testing

Feature flags and feature testing both support software development, but they’re not the same thing.

Feature flags are conditional logic statements embedded in the code to switch specific functionalities on and off during runtime, without redeploying code.

Feature testing encompasses the methods and processes used in evaluating the performance, stability, and user acceptance of new features—before or during rollout.

While feature flags can be used to ‘test’ the impact of having vs not having a feature (on vs off), feature testing goes wider, enabling experimenters to vary certain feature parameters, one at a time, to understand user preferences and monitor the impact of different configurations on predetermined KPIs.

To tie it all together, Andrew Brimble describes how Specsavers use feature flags, rollouts, and feature testing to optimize their single page application:

Specsavers utilizes feature flagging within their in-house booking app, where customers book sight and hearing appointments at one of our many high street stores. This highly optimized single page app is responsible for over 80% of booked appointments. Its value to the business commands a rigorous approach to experimentation. For non-trivial optimizations or new feature roll-outs, Specsavers have chosen to integrate the experimentation within the product engineering teams. Oftentimes, client-side testing cannot achieve what is needed on the back end to achieve the desired change. The 3 main benefits have been:

1. Minimise code error: No conflicting code injected into the browser, follows standard development process, including extensive testing and release management.

2. Experiments can run in multiple markets: Our global codebase allows the release of experiments worldwide.

3. ‘Winning’ variants can be rolled out with ease: Usually the removal of the feature status logic is all that is needed.

With reference to point 3 above, you may decide that front-loading development of an un-tested feature is too costly. When a minimum viable product is tested more effort is needed following the experiment. This problem is not exclusive to feature flag testing, and the MVP code may not always be thrown away entirely.

Andrew Brimble

In a Nutshell

Feature flags and rollouts may present as just safety nets, but in the hands of the right product team, they’re true catalysts for product-led growth. They enable faster, safer releases, targeted user feedback, and data-driven optimizations that ensure you ship your most refined features.

Master these tools and the different roles they play to build products that truly resonate with your users.

Written By

Uwemedimo Usa

Edited By

Carmen Apostu