What Is Feature Testing in Experimentation (And How You Can Do It, for Free)

Feature testing enables you to optimize features by comparing variations, gathering user feedback, and making data-driven decisions on which versions to deploy.

Key points:

- Feature testing compares feature variations to determine which performs best based on user experience and business metrics.

- CROs are just beginning to expand their business impact deeper into product roadmaps and development processes.

- Feature testing empowers teams to validate assumptions, gather user feedback, and optimize features before full-scale rollout, minimizing risk and enhancing product quality.

- Convert offers a user-friendly platform for conducting feature tests, and you can get started for free today.

The future of experimentation is product.

Experimenters have long focused on optimizing conversion rates for marketing. But the game is changing, and they’re breaking new ground.

Ruben de Boer, Lead Conversion Manager at Online Dialogue, and John Ostrowski, Director of Product and Experimentation at Wise Publishing, are witnessing this shift firsthand.

Some CROs are now expanding their influence across the entire customer journey and play pivotal roles in product optimization.

John dubbed them ‘conversion product managers’ (spicy take!). These professionals ideate, validate, build, and optimize features and products, using testing as a critical tool in the discovery and delivery tracks.

Although this shift is very young at this point, it presents an opportunity for CROs to expand their horizons and assume more strategic influence within their organizations.

As Ruben and John highlight in their discussion below, feature testing or feature experimentation is the linchpin in the arsenal of these professionals.

What Is Feature Testing? How Is It Different From Feature Testing in Software Development?

Depending on how you use the term ‘feature testing,’ it can create two somewhat different images in your mind.

You could think of it as ensuring features work as intended—bug-free and smoothly handling production load. For example, “I programmed this feature to print ‘Hello World.’ Does it print ‘Hello World’?” That’s the quality assurance angle.

On the other hand, we have feature testing (or feature experimentation) for product optimization. In that context, we’ll define feature testing as a process of comparing variations of a feature (such as with A/B/n testing) to see which version performs best according to a predetermined user experience or business metric.

This sounds like there’s a divide between the two connotations of feature testing (one for software developers and the other for product optimizers), but that’s not the entire story:

There is a significant difference between feature testing in product experimentation and software development, yet a fundamental similarity. You see, testing is testing, but the scope and objectives of each type here differ, which is why the execution often falls to different experts.

In product experimentation, the focus is on validating the impact of a feature on user behavior and business metrics through live A/B testing. A CRO typically manages testing to evaluate this. Conversely, in software development, the objective is to ensure the feature functions correctly within the application, focusing on technical stability and performance, and the QA specialists’ job is to ensure this. Despite these differences, the core activity of testing—ensuring features meet their intended criteria—remains consistent.

The product experimenter’s approach to feature testing, which we’re focusing on in this article, helps validate assumptions, gather feedback, and measure a feature’s usability, performance, and accessibility in real production environments. That’s a powerful tool for developers, product managers, UX designers, and even marketers.

5 Things Product Experimenters Do With Feature Testing (That You Can Too)

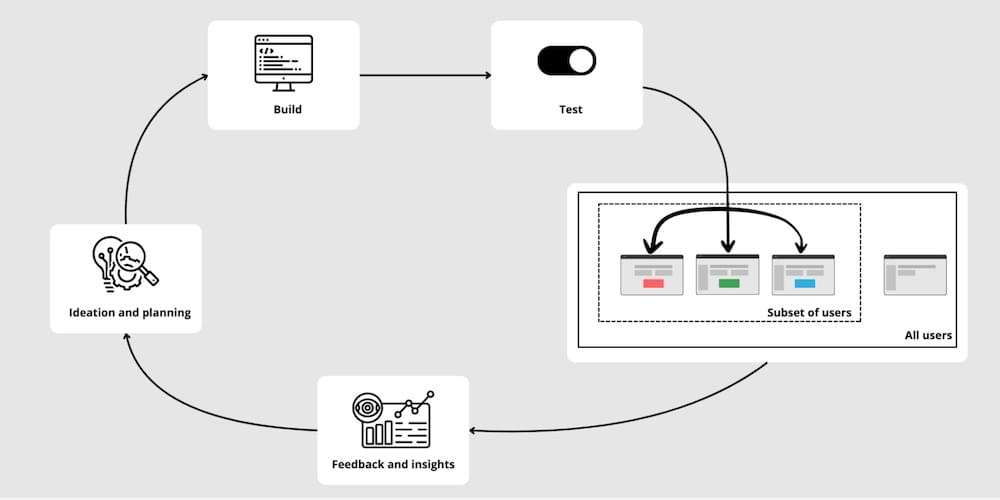

Feature testing, when combined with robust feature management (often using feature flags and rollouts), enables product experimenters to do the following:

1. Make product development decisions

With sound feature tests, product teams get real-world user data and measured impact to inform product decisions. David Sanchez del Real articulates it best:

Whenever we’re building products, we are faced with constant decisions.

Wherever there are decisions to be made, there are assumptions.

Since experiments are simply a robust way to validate (or invalidate) assumptions, they are a key tool for decision makers.

When building products, these decisions come in many different flavors, from exploring completely new ideas to getting an early understanding of their viability before resources are committed to them (exploring) to refining the execution of existing features (exploiting) or even measuring things that couldn’t be measured in any other way.

And as decisions come in many flavors, so do experiments: There are many ways to experiment and A/B tests are only one.

Working in digital products presents the advantage of making A/B testing very accessible, but you should always choose the right tool for the right job.

David Sanchez del Real, Head of Optimisation at AWA Digital

Testing multiple variations of a feature allows your team to validate the most effective design, configuration, or user experience to develop.

Alternatively, testing a single variation of a new feature before releasing it to all users—a practice known as gradual rollout—ensures that the feature performs well in real-world conditions without exposing the entire user base to potential risks.

What’s a more thoughtful and safer way to know what works best than putting the feature in the hands of your users?

An iterative feature testing process brings you closer to your desired outcomes faster.

2. Mitigate risks

Feature flags, a core component of feature testing, enable developers to release new features without permanent changes to the codebase—i.e., reversible changes. This allows for continuous delivery and reduces the risk of disrupting the user experience if things go sideways.

If issues arise due to a newly released feature, feature flags act like a switch to turn it off. This reverts the software to its previous state and minimizes the impact, just like CTRL+Z (or CMD+Z for Mac people) for feature releases.

Tim Donets gives us an example of how he has used feature tests to manage the risk of significantly impacting website performance:

Feature testing came in handy when we needed to test an alternative logic of a feature. Sometimes, entirely different backend services would be used, or a significantly changed flow using the same service.

For example, I once managed a migration from an in-house piece of software handling engagement elements on a website (think banners, pop-ups) to a third-party software. Using feature testing we were able to test whether the underlying logic of all engagement elements would work on a set of real users and if using a 3rd party would cause any significant drop in the website performance.

Tim Donets, Fractional Head of Product

3. Validate feature fit

This is one instance where feature testing doesn’t involve comparing versions of a feature.

Here, the feature might be released in beta to a segment of users. The product experimenter then tracks guardrail metrics such as usage, user engagement, or revenue generated by the feature.

This process helps determine if the new feature is a valuable addition and a good fit for the product. But it can also reveal usability and functionality issues, informing decisions about whether to release the feature or refine it further.

An added advantage of this use case for feature testing is that it ties feature releases to measurable outcomes, demonstrating the product team’s impact and guiding future development priorities.

4. Explore feature-product relationships

Feature tests are used to explore complex relationships between different product variables and features.

When properly executed, these controlled experiments remove the impact of randomness, helping you isolate cause-and-effect relationships between the changes introduced by the feature you’re testing and your product as a whole.

For example, let’s say you’ve added a search bar at the top of the screen. You’d want to know how this impacts user navigation, workflow speed, and feature discovery.

With insights like these, imagine the possibilities for improving your product.

5. Gather user feedback

A key element in the iterative feature testing process is collecting insights and feedback.

Feature testing provides an excellent opportunity for product experiments to collect valuable user feedback throughout the development process of a specific feature, which aligns perfectly with the principles of the lean methodology.

Let’s face it, features alone rarely create loyal customers. Instead, we need to shift the focus to understanding the “jobs to be done” for our user base.

Deeply empathize with their struggles, aspirations, and unmet needs.

This customer-centricity becomes the guiding light for innovation, ensuring we build products that solve real problems, not just add bells and whistles.” — Gopalakrishnan V., Product Manager at App0, in this LinkedIn Post

This user feedback turns into qualitative insights that can help:

- Validate assumptions

- Identify areas for improvement

- Guide decisions about feature improvement

- Suggest new feature ideas to explore

Using user feedback, developers can release new versions of the feature to test as they gradually approach the most user-centric variation.

The core benefit of feature testing for product teams is moving from intuition-based development to a hypothesis- or data-driven approach. Here, your decisions take root in real-world user data and measured impact.

What this means for your product is:

- More efficient use of development resources

- Faster time-to-market for the best-performing features (according to the metrics that matter to the business) and

- Continuous cycle of product optimization based on your users’ needs and the overarching business goals.

How to Conduct Feature Testing in Convert Experiences?

The five things product experimenters do with feature testing have gotten you excited. Here’s how you can start feature testing in Convert Experiences today.

Here’s a video demo:

Step 1: Setup and initialization

Integrate Convert’s JavaScript SDK into your application. It supports environments like Node.js. Initialize the SDK using the configuration settings on your Convert dashboard. This helps you manage feature flags and track your feature experiments.

Step 2: Define Locations and Features

Next, specify the product areas or locations in your application where you’ll conduct the feature tests. For example, you may want to target the search bar in the dashboard area. Create the features to be tested and associate them with the defined locations.

Step 3: Create Experiments and Variations

In Convert, set up your experiment by defining different variations of the feature. For instance, you can test a sticky search bar against a non-sticky search bar. You can customize these variations with specific logic to create unique experiences for different user segments.

Step 4: Set Up Event Listeners

Configure event listeners to track user interactions and conversions. These listeners will monitor key events such as clicks or page views, ensuring that your feature test captures the right metrics.

Step 5: Run the Experiment

Check that everything is configured as needed and launch. Convert uses a bucketing mechanism, where users are randomly yet deterministically assigned to different variations based on their specific context.

Step 6: Monitor and Analyze Results

As the experiment runs, Convert tracks user interactions and conversions. This is provided in detailed analytics in the Reports tab.

How Do Different A/B Testing Tools Tackle Feature Testing?

Feature experimentation is offered by some A/B testing tool vendors, either as a separate product or functionality within their experimentation platforms. Let’s learn about how they handle feature testing.

Optimizely

Optimizely supports feature testing through their Feature Experimentation product, which uses feature flags to power A/B tests on deployed features.

If you were to run a feature test with Optimizely, you’d first install the Optimizely Feature Experimentation’s SDKs in your code (available for both server- and client-side). Next, you’d define feature flags to run experiments, choose your target audience, and select the events to track.

VWO

VWO tackles feature testing through its Server Side product. You’d have to install the VWO SDK into your application to run a feature test with it.

Then, on the VWO Server Side UI, you can define a project and start a new feature test campaign. Follow some steps to define the feature variations, goals and metrics, traffic allocation, and other advanced options.

AB Tasty

This is done via Flagship, a feature flagging platform for testing features in production.

The process starts with integrating the API/SDK into your product codebase. Once setup is done, you can define flags for toggling features on and off. This also tracks feature performance.

Next, set up the metrics for tracking performance and configure targeting keys. You’re ready to test.

Kameleoon

Kameleoon has a feature experimentation product that goes by the same name. Like other tools, it uses feature flags to control feature visibility, variations to test different versions of features, goals to measure performance, and experiments to compare those performances.

Setting up feature management and experimentation in Kameleoon starts with installing the SDK in your app. The remaining steps:

- Creating feature flags

- Targeting and segmenting your audience

- Setting goals

- Monitoring performance

- Running the feature experiment

All steps are performed within the Kameleoon product interface.

Feature Testing For Free

How much does feature testing cost? And can you run feature tests for free?

The short answer is yes.

For the long answer, “free” means various things depending on where you’re looking.

If you’re looking at Kameleoon, you’ll find that there’s a free trial for feature experimentation. After the trial, a paid subscription starts at $35k per year. You’ll have to contact sales to get a custom quote.

With AB Tasty, the price is also gated. You have to fill out a form to contact sales before you find out what feature testing would cost you. One unverified source states the fee for AB Tasty starts at $15k a year, and another goes as high as $60k. There’s a free trial available, though.

With Optimizely, we’re talking about annual payments of $36K, according to SplitBase, and $49k, according to our findings.

VWO provides feature testing capabilities in the Pro and Enterprise plans of the ‘VWO Testing – Server Side’ product. This has a unique price that is separate from other VWO products. And it costs $16,308 a year for 50k unique tested users — after a free trial.

Then, there’s Convert Experiences Full-Stack. You get a 15-day free trial, and after that, pricing begins at $399 per month. This means when billed yearly, feature testing with Convert will only cost you $2,389 for 100k monthly traffic on the Entry Plan. On top of that, full stack experimentation comes with other web experimentation features in your plan because we don’t run it as a separately billed product.

| Feature testing tool | Annual prices | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Kameleoon | Starts at $35K | ||||||||||

| AB Tasty | Starts at $15K* | ||||||||||

| Optimizely | Starts at $36K* | ||||||||||

| VWO | Starts at $16.3K | ||||||||||

| Convert | Starts at $2.4K |

*rumored prices

Wrapping Up on Feature Testing (and Avoiding a Common Pitfall)

As full-stack experimentation becomes more accessible and CRO skills continue to extend beyond marketing and permeate the entire organization, we’ll see more feature testing.

Product experimenters will validate feature-product fit, mitigate risks, make product development decisions, gather user feedback, and more with feature experiments.

Aside from driving data-driven product growth and optimization decisions, the culture of experimentation will thrive more in such environments.

However, feature testing needs to be managed carefully. Teams risk creating technical debt when feature flags used for feature testing aren’t removed after they’ve served their purpose. This leads to a cluttered codebase that makes further development and maintenance difficult.

You can prevent this by setting clear rules about feature flag lifecycles (when to create and retire flags) and removing flags immediately after completing experiments.

Written By

Uwemedimo Usa

Edited By

Carmen Apostu