The Quiet Super Power of Iterations: From Spaghetti Testing to Strategic Experimentation

Let’s start with a bit of a mind-bender.

You know iterative testing adds structure to your experimentation program — at least on paper. You also probably know iterative testing makes you dress your testing program in adult pants and challenges you to ask tough questions like:

Is there a structure for how we’re executing things? And is there a grander plan—or actual strategy—propelling our experimentation or are we just indulging our egos?

Take a look at Erin Weigel’s post:

Unfortunately, too many programs view hypotheses as win/loss statements. They formulate a prediction, seek validation, and move on to the next. This pattern shows our human inclination to make predictions, doesn’t it?

However, we must remember that hypotheses serve as vehicles to transform research insights into testable problem statements — not as predictions to prove or disprove.

We’re not here to argue the nitty-gritty of how various experimentation programs use hypotheses. (If that piqued your interest, read ‘Never start with a hypothesis’ by Cassie Kozyrkov).

Instead, we’re setting the mood for a deep dive into iterative testing in a way that will unpack this rather loaded concept and use it in your experimentation efforts.

Between insight and action is the frenetic (yet mundane) work of testing.

Your formulated hypothesis and subsequent prediction are just one of many ways in which you choose to probe the validity of the connection you think exists between elements (in your control) and traffic behavior (beyond your control).

This insight, gathered from several sources of data and backed by rigorous research, highlights a problem—a conversion roadblock, if you will. In response, you introduce an intervention.

However, while the conversion roadblock remains fixed, the nature of the solution varies. It might depend on:

- The psychological principles that inform the kind of influence we wish to exert: A fantastic example of this is the Levers Framework™ by Conversion.com. Anyone who has run any test or even thought through problems and solutions in business knows that you can manipulate cost to temporarily improve most superficial metrics. But if you are interested in profits (which you absolutely should be), it’s not the lever you should pull.

- The research and subsequent insights that revealed the problem may offer clarity around the kind of lever you should be pulling. If you get 35 exit intent survey responses along the lines of “this is too expensive” but your competitors are selling their legitimately more expensive products like hotcakes, maybe work on Trust and Comprehension instead. This will unearth a can of worms around your viable market, and positioning. But hey, the journey to being better demands that some foundations be dismantled.

- Finally, a solution also depends on how your chosen influence lever is presented to the audience. As Edmund Beggs says:

So particularly when you’re looking at the early stages [of testing a lever] and how you should interpret what’s going on with each lever, mattering matters most: it’s most important that you make a difference to user behavior.

Edmund Beggs, Conversion Rate Optimisation Consultant at Conversion.com

Here, something like the ALARM protocol is needed. It simplifies the multi-layered nature of execution. Presenting aspects like Audience, Area, and Reasons the Test May Lose as a check to

- Ensure that the best possible execution is deployed.

- Honor the insight; understand that a win, a loss, or even an inconclusive call doesn’t negate the value of the insight. The problem remains, the journey continues.

Iterative testing is important because it shows why A/B testing is not a one-time solution. It is instead, a continuous improvement that grows and evolves as your business grows and evolves. Having a test ‘winner’ does not always mean that it would be a winner in every circumstance and external factors should always be considered on the impact they will have on test results e.g. market conditions or time of year. Let’s think of how much the pandemic shaped the market for so many industries. A ‘winning’ test pre-pandemic (particularly the case for message testing), may not have had the same result during, or even after. That’s why it is important to continuously and gradually test, as well as re-test in different market circumstances.

Loredana Principessa, Founder of MAR-CO

This takes the sting away from tests that don’t light up the dashboard with green. When you see things clearly, you realize the true value of testing is learning from multiple tests, thousands of tests over the years.

While you may never completely mitigate every problem for every shopper/buyer, if your testing leads to more informed and better decisions, and fuel your next iteration, you’re golden.

The A/B Testing Equivalent of Retention: Iterations

So, what is iterative testing anyway?

Iteration is the process of doing something again and again usually to improve it. As Simon Girardin cleverly points out with a sports analogy, even hockey players iterate. After all, practice makes perfect.

As hockey players fire multiple shots, with each shot an improvement on the previous one, so too does iterative testing operate. It leverages insights generated from previous tests to inform the experimentation roadmap moving forward.

And it comes with a delightful array of benefits.

Fast Paced, (Often) Low Effort

Balancing big ideas and pre-planned experiments with fast-paced iteration should be a part of any mature testing program. The latter can reveal new customer insights that can help increase conversion rates for relatively low effort. So not only is ROI high but the insights we glean can be invaluable and used in other marketing channels as well. Iteration can be thought of as a treasure map where you get a reward at each checkpoint, not only at the X.

Steve Meyer, Director of Strategy at Cro Metrics

It is, in a sense, the red-hot retention concept applied to experimentation. We don’t yet have a dollar value to attribute to the ease of iterative testing but you increase your chances of hitting on an impactful intervention with it.

Big A/B test wins for us are most commonly an 8-12% lift. When we are iterating we usually try to squeeze out an extra 3-5% lift on top of that. Our CRO programs are narrowly focused around one problem area at a time. So it doesn’t sound like much, but an incremental 5% lift on the most detrimental metrics hurting a site can be massive.Sheldon Adams, Head of Growth, Enavi

And all this accelerated progress happens with less effort, especially when compared to spaghetti testing — a strategy-less approach where you test various changes to see what sticks.

With iterative testing, you’ve already laid down your markers.

You’ve established a clear understanding of the parameters to monitor when you introduce a specific type of influence to the audience struggling with a particular roadblock. You know which metrics to track upfront. And crucially, you’re equipped to rapidly fix any mistakes, resulting in interventions mattering more for your audience.

For those wary of the rut of iteration, fear not—we will address how to move on too. We don’t want “attached” A/B testers! 😉

And for those who might feel that iterative testing is simply redundant, building on the same insight, it’s time for a shift in perspective. Iterations, when you look at them the way Shiva & Tracy do, are user research. They just don’t fuel incremental improvements; they have the power to shape blue-sky thinking.

Iterative Testing = User Research

To grasp this second benefit, spare 26 odd minutes of your life. Don’t worry, this isn’t a dry podcast. You will laugh and nod your way to deep understanding.

Here’s Tracy and Shiva discussing the significance of iterative testing over spaghetti testing:

In this episode, they talk about how research is the basis of iterative testing, enabling a profound understanding of why certain outcomes occur. When you turn your findings into problem statements, you create a path to subsequent iterations and test ideas.

With the iterative approach, your mindset towards win and loss changes. You find there’s always something to learn from any test outcome to support informed decision-making. And you see that true failure happens only when you give up.

And that’s the idea they support: a persistent and ongoing process that leads to continuous user research and refinement.

So, you can’t iterate if you don’t have a hypothesis rooted in data. If your approach lacks depth—say, you’re hastily ripping banners off of your website just because your competitors are doing the same—you’ll never have the data or approach to iterate. Watch Jeremy Epperson’s take on iterative testing here.

Commitment to iterative testing is thus a commitment to stop looking at outcomes only.

It is a commitment to learning from the actual test design and implementation too, and treating the experiments themselves as a form of user research.

Listen to Tracy Laranjo’s process where she walks you through how she reviews screen recordings of test variants in Hotjar. See how she looks for what could have resulted in an emphatic response (win or loss) or a lukewarm reaction and how she envisions pushing a particular implementation further.

Even the most well-researched and solid ideas don’t always result in a winning test. But that doesn’t mean that the hypothesis is flawed; sometimes it’s merely the implementation of the variation(s) that didn’t quite hit the mark. The good news is that we learn from every single test. When we segment the data and look at click/scroll maps, we get clues into the reason why the variation didn’t win, which becomes the basis for our next tests. Sometimes a small change from a previous test is all it takes to find the winning variation. Furthermore, iterations usually take less time to develop and QA since much of the work can be reused, so an iterative testing approach also helps maintain a high volume of tests.

Theresa Farr, CRO Strategist at Tinuiti

In essence, iterative testing is very much like Machine Learning. Each time you refine a component of your persuasion or influence lever, fine-tuning its execution, you’re ‘feeding the beast’—enabling the company-wide gut instinct that sits atop the experimentation learning repository.

Iterative Testing Makes You Action-Focused

Insights and test outcomes, on their own, aren’t actions.

Speero highlights this distinction in their Results vs Action blueprint. Here, they emphasized that the true value of testing lies in the subsequent actions you take. That’s when experimentation can blossom into the robust decision-support tool it is meant to be.

As Cassie recommends, it is best to have a clear idea of what you will do if the data fails to change your mind (and you would then stick to your default action). And what you will do if the data successfully updates your beliefs.

When you adopt the mindset of iteration, you have to plan at least 2-3 tests.

You have to get into the habit of looking beyond the primary metric and choosing micro-conversions that reflect nuances of behavioral shifts. Such strategic foresight transcends beyond the simplistic, binary mindset of ‘implement intervention’ versus ‘don’t implement intervention’.

The more thought you put into what comes after the admin and academia of running tests, the more tangible impact is felt by business KPIs and the organization as a whole. This bodes well for buy-in, validating the indispensable value of experimentation and everything else a program lead is worried about.

Shiva connects the dots here:

Iterative testing is one of many things that separates junior CROs from senior CROs. Iterative testing usually is the genesis of forward-facing, strategic thinking. Spaghetti testing (throwing sh*t at the wall and seeing what sticks) generally ignores this kind of strategic thinking. Iterative testing is something that requires research.

Why is research important? Back to the ‘strategic thinking’ — if you see something won, but you don’t have a concrete hypothesis backed in data, nor are you tracking micro conversions to understand behavioral shifts better, you are basically stuck with “Ok, it won. What now?”

Research leads to better hypotheses, which leads you to a better understanding of the ‘why’ something won, rather than simply knowing if something won or not. Which leads to problem-focused hypotheses. These are critical.

Having research is ‘easy’ (meaning conceptually easy to capture at least). Actually doing something productive with that research can be more difficult. Moving your research into problem statements is a very easy vehicle to move your research into testable hypotheses.

But also, that problem statement is crucial in knowing HOW to iterate if something wins/loses (along with a ton of other benefits). If you’ve done the two above points, you should have a general idea of what you expect to happen in a test, with a plan for tracking behavioral shifts.

Before you launch your test, you should consider “what happens if the hypothesis is correct” and “what happens if the hypothesis is proven incorrect.” This is where the ‘iteration’ step comes in. You are planning the next 2-3 tests based on the research, user behavior, and some general idea of things you believe will happen (based on that research). This is why planning for iterative testing can be so much harder without research (and spaghetti testing). If you run one test and don’t really know where else to go from there, ‘iteration’ isn’t something you’d be able to do more of.

Iterative Testing Minimizes Risk

It’s tempting to think of iterative testing as just another method for experimentation, but it goes beyond that. It’s a strategic approach that also minimizes risk in testing.

Iterative testing takes out-of-the-box, disruptive concepts and transforms them into potential revenue levers. By embracing incremental improvements and working out the kinks, it converts roadblocks into bridges without kicking up a lot of dust.

In her insightful LinkedIn post, Steph Le Prevost from Conversion.com elaborates on this — the concept of risk profiles in experimentation:

How to Iterate on Insights and Test Results?

Iterative ‘testing’ is everywhere now.

Most elite marketers in the world iterate.

To illustrate, here’s one of our favorite recent examples of iteration in action, shared publicly (Slide #10 onwards):

Excellence, it appears, rides on the back of iterations.

Yet, it begs the question: Why has iterative testing only recently become a buzzword?

Sheldon Adams offers an insightful perspective, attributing it to the ‘shiny object syndrome’:

Despite everything I said above I am extremely guilty of wanting to move on as soon as we validate a test. I think it is the novelty factor that makes it seem like the right thing to do. So I certainly understand why teams get that urge.

Sheldon Adams, Head of Growth at Enavi

So, how do you implement the iterative testing approach?

Identify Key Metrics for Effective Iteration

The more you test, the better you’ll get at iterating on insights.

Till you do though, a framework is handy.

Here, we introduce you to Speero’s Experimentation Decision Matrix Blueprint.

With a framework like this, you’re identifying primary success metrics and outlining the actions you’ll take based on the outcomes of the test before even diving into an experiment.

It’s the type of structured approach associated with iterative testing that ensures every test has a clear purpose and isn’t done just for the sake of testing. And that these tests update your experimentation roadmap based on previous learnings.

Pre-Iterate Towards Success

Shiva spoke about the fact that iterative testing involves thinking two to three tests ahead. Kyle Hearnshaw adds his advanced spin to the idea:

Think of it like a decision tree: you should always have subsequent iterations planned out for every outcome of your tests. Then, you can appreciate the quantum world of possibilities we had teased in the intro.

Experimentation is human nature. And iterations mimic life and its dynamics. When you pre-iterate, you pay adequate attention to action and planning scenarios ahead of time.

And you also accept what the audience shows you — voting on your solution and its execution with their preferences.

The framework you create for the actions and iterations draws on your previous experiences. Then you make room for new data with the actual experiment outcome.

Brian Schmitt talks about how when surefoot decides on the metrics to capture in a test, they are thinking of iterating on the outcome. This is mostly informed by previous test experience:

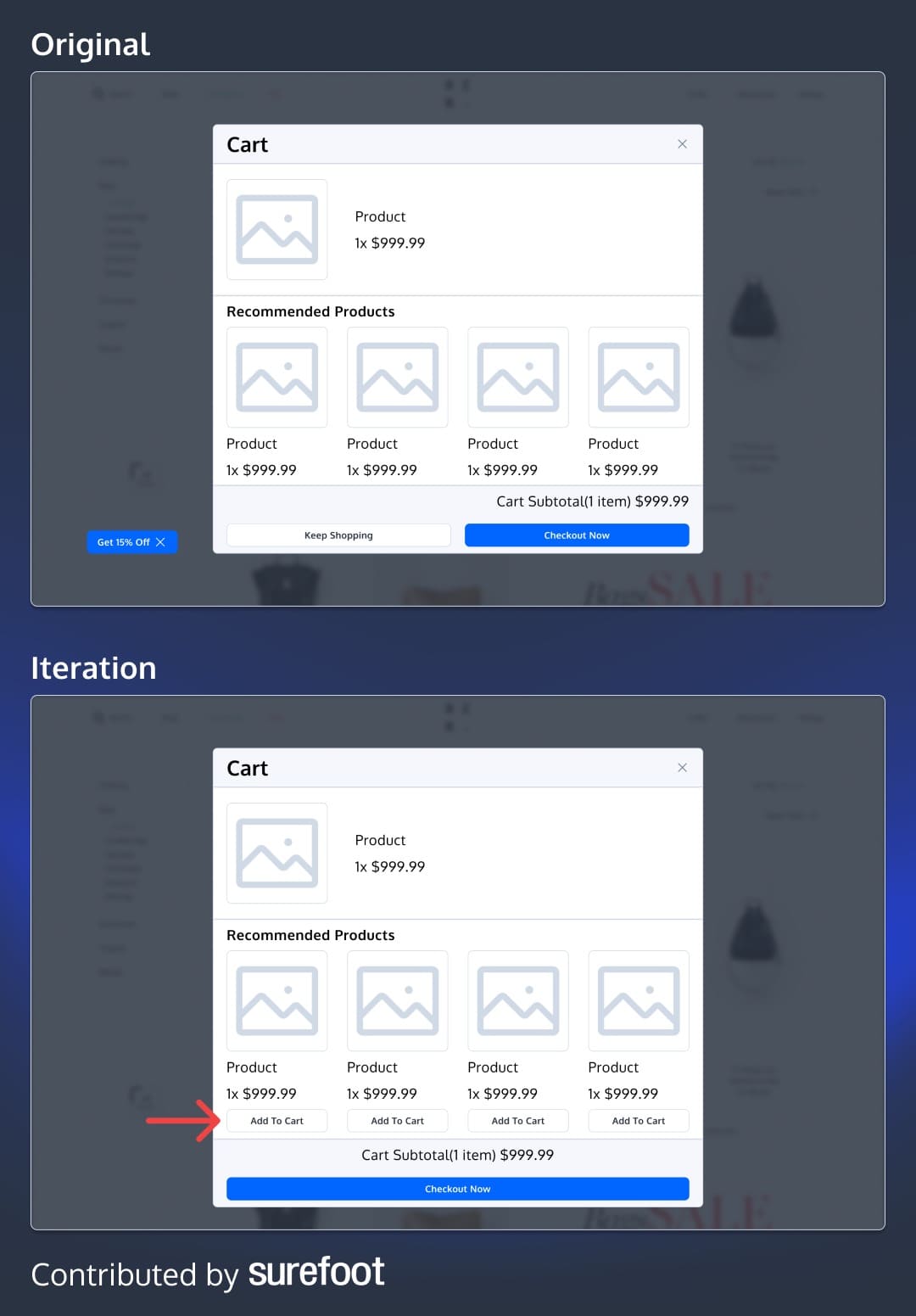

In our first test, we primarily measured the add-to-cart rate to understand the impact on user behavior after adding their first item to the cart. We were interested in finding out whether users proceeded to checkout, interacted with recommended products, clicked on the added product, decided to continue shopping, or chose to close the window. Did they click the ‘X’ or somewhere around it? From the original data, it was clear that a significant percentage of mobile users instinctively closed the window.

Analyzing the data from this test, particularly through Hotjar or any other heat mapping tool, revealed that users were not clicking as expected. This observation led us to introduce the ‘add to cart’ button to ensure users understood that the options were clickable. For this particular test, we included the ‘add to cart’ button click as a metric. Notably, there is no ‘keep shopping’ option; users simply close the window.

This is the basic rationale behind our choice. As part of our routine, we forward the test to our data analyst, Lori, for review. Lori evaluates the test, considers potential ways to dissect the data for later analysis, and, based on her experience and the need for specific metrics from past tests, adds additional metrics for a comprehensive story. This could include impacts on user behavior, such as whether they proceeded to the cart, skipped the cart to checkout directly, or utilized the mini cart. These insights are crucial for understanding the behavioral changes induced by the test variation.

Brian Schmitt, Co-Founder of surefoot

Create MVP Tests as a Starting Point

MVP tests — they’re simple, quick, and a solid base to guide future iterations.

Since they’re designed to isolate specific variables and measure their impact, you get to glean valuable data with minimal effort; data that essentially becomes the root of iterations.

MVP tests are arguably the least risky method in experimentation to get rapid feedback and quick validation of ideas, ensuring that any potential missteps are manageable.

Will Laurenson talks about how important MVP tests are in their approach to experimentation at Customers Who Click:

Tests are initiated based on prior research. Even if the original concept didn’t yield robust results, the variant might just need refinement. For instance, at Customers Who Click, our approach focuses on MVP (minimum viable product) tests. We aim to verify the core idea without extensive resources. Consider the case of social proof. If we test product reviews on a product page and see a conversion uplift, many brands & agencies would stop there. However, we’d dive deeper: Can reviews be integrated into image galleries? Should we incorporate image or video reviews? What about a section spotlighting how customers use the product?”

Will Laurenson, CEO of Customers Who Click

How do you create MVP tests? Isolate a key metric, create a simple test design, prioritize speed and launch quickly, and then analyze and pull insights from your results. Iterate with confidence.

Develop a Learning Repository

Without a learning repository, you’re missing out on a crucial step in iterative testing.

Iterative testing stands on the shoulders of past learning. With no actual record of past learnings, there’s nothing to stand on. It’s just dangling there, on guesswork and flawed human memory.

A learning repo is a structured compendium that makes the process of building upon ideas and previous experiments significantly smoother and more efficient.

We’ve gone into details about setting up a learning repository before, but here’s a quick reminder of what it’s typically used for:

- Acknowledge the value of experimentation data: Recognize that every bit of data from your tests is a priceless asset of your company, essential for informing future tests and drawing insights from previous endeavors.

- Select the appropriate structure: Choose a structure for your learning repository that best suits your organizational needs, be it a Centre of Excellence, decentralized units, or a hybrid model.

- Document clearly and precisely: Make certain that every piece of data and learning captured in the repository is documented in a clear, concise, and accessible manner.

- Maintain comprehensive overview: Use the repository to keep a bird’s eye view on past, present, and future projects, ensuring knowledge retention regardless of personnel changes.

- Prevent redundancy: Leverage the repository to avoid repetition of past experiments, ensuring each test conducted brings forth new insights to iterate on.

In the grand scheme of all things iterative experimentation, your learning repository not only streamlines the process of referencing past experiments but also ensures that each test is a stepping stone toward more insights.

However, this will depend heavily on the quality of the experiment data in the repo and access to it. Tom talks about meticulous tagging and structuring within the repository:

As we know from the hierarchy of evidence the strongest method to gain quality evidence (and have less risk of bias) is a meta-analysis over multiple experiments. To make this possible it’s extremely important to tag every experiment in the right way. Tooling which supports this helps you a lot. In my experience, Airtable is a great tool. To do this in the right way it helps to work with the main hypothesis and experiment hypothesis. Most of the time you work on 2 or 3 main hypotheses maximum at the same time, but under these main hypotheses you can have many experiments each of which has its own hypothesis. So you can learn after one year for example that the win rate at the PDP for “Motivation” experiments is 28% and for “Certainty” it’s 19%.

Tom van den Berg, Lead Online Conversie Specialist at de Bijenkorf

Experimentation Experts Share How They Iterate

Since we’re about the practical aspect as much as we are about taking you through a mind-bending journey from spaghetti testing to iterative testing, here are stories of how experts iterate to inspire your experimentation program:

Streamlining Checkout with Iterative Optimization

By Matt Scaysbrook, Director of Optimisation, WeTeachCRO

The test began as a simple one, removing the upsell stages of a checkout process to smooth the journey out.

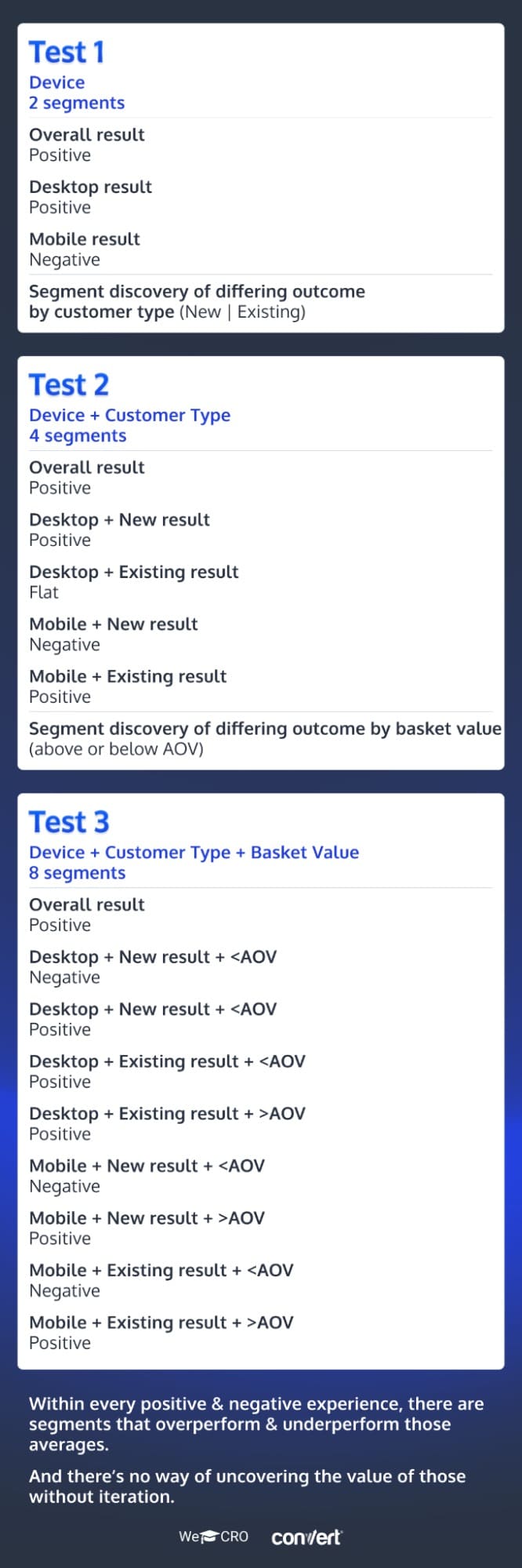

However, after each test was analyzed and segmented, it became evident that there were positive and negative segments within each experience.

So while the net revenue gain may have been £50k for the experiment, it was in fact £60k from one segment, offset by -£10k in another.

This then led to iterative tests that sought to negate those underperformances with new experiences, whilst keeping the overperformances intact.

Iterating for Better Add-to-Cart Conversions

By Brian Schmitt, Co-Founder, surefoot

Test 1:

Hypothesis: We believe showing an “added to cart” confirmation modal showing complementary products and an easy-to-find checkout button will confirm cart adds, personalize the shopping experience, and increase AOV and transactions.

Analysis revealed there was minimal interaction with the “Keep Shopping” button, users were more likely to click the “X” to close the modal.

We saw an opportunity to optimize the add-to-cart modal by removing the “Keep Shopping” button, which was not used frequently, and giving more visual presence to the checkout button. Additionally, there may be an opportunity to adjust the recommended items shown to lower-cost items, potentially driving an increased number of users adding these items to cart. Last, adding a “Quick Add” CTA to each item could lead to more of the recommended items being added to cart.

Test 2:

Hypothesis: We believe showing products under $25 and having a quick add-to-cart button on the recommended product tiles of the confirmation modal will increase units sold per transaction.

Analysis revealed a 14.4% lift in recommended products being added to cart, and an 8.5% lift in UPT (at stat sig).

We iterated on the first test to capitalize on observed user behavior. By removing the underutilized ‘Keep Shopping’ button and emphasizing a more visually prominent checkout option, along with featuring lower-cost, quick-add recommended products, we aimed to enhance both the user experience and key performance metrics.

Using Iterative Testing to Understand Data Patterns

By Sumantha Shankaranarayana, Founder of EndlessROI

Hypothetically, let’s assume your first high contrast A/B test on the website results in a 50%

conversion uplift. To get to this winner, you would have altered a number of aspects,

including the headline copy, the CTA, and the overall page design, based on page scripts.

With iterative testing, you can now measure the impact of focused clusters, say, just on the

masthead, and determine what factor caused the 50% boost above. Say the headline copy

gave you -10%, the CTA +40%, and the overall design +20%.

You may now be more specific and try to boost the headline content by 20%, the CTA by

70%, and the eye flow management with the design by an additional 50%. The overall

throughput is +140%, which is much greater than it was during the initial round of A/B testing

at a +50% uplift.

Applying iterative testing with data patterns uncovered through sound experimentation programs, like those hypothetical examples above, will help you build a growth engine.

Ideas for Iterative Testing (or Rules of Thumb, If You Like)

Thinking of recreating one of those stories above? Keep these in mind:

- Begin with simple tests and deep analysis to identify varying impacts across different segments.

- Celebrate net gains from experiments but delve deeper to understand the positive and negative contributions of different segments.

- Use insights from segmentation to enhance well-performing areas and improve underperforming ones, ensuring a balanced optimization strategy.

- Observe user interactions and optimize accordingly, removing underused features and enhancing conversion-driving elements.

- Use user behavior insights to optimize elements like recommended product tiles and quick add-to-cart options, enhancing user experience and key performance metrics.

- Acknowledge and build upon small wins, using them as a foundation for further optimization and testing.

- Apply focused iterative testing to specific elements after a high-contrast A/B test to optimize their individual impacts.

- Consistently use data patterns from iterative testing to inform your strategy, transforming your experimentation program into a growth engine.

Breaking Up: When to Move On From Your Insight

Navigating the tricky terrain of iterative testing requires not just a keen sense of when to persevere and when to let go of an insight. Some insights have come to a dead end and there are three ways to know:

1. When Your Experiments Never Show Positive Results Regardless of How Many Times You Iterate

Maybe you’ve peaked on that one. Sometimes you can’t move the needle forward because the current user experience is close to optimal and there’s nothing left to optimize.

The next step in ‘iterative testing’ is understanding at what point you iterate vs. move on to the next concept you want to test. A rigorous prioritization framework includes prioritizing iterations in your testing (because it’s another form of ‘research’) more than non-iterative tests.

However, if you are on the 50th iteration of a test and have seen 49 losses, there may be a point where you have extracted the most out of that hypothesis and need to move on to another hypothesis to test. I made this blueprint a while ago to help you consider when to continue iterating vs. when to consider moving on to another hypothesis.

This blueprint isn’t set in stone – I wouldn’t follow it blindly. However, consider the logic behind the decision-making as you balance the needs within your business when deciding whether to iterate or move on to another hypothesis.

Shiva Manjunath

When You’re Sapped-Out on Insight-Inspired Creative Ideas

It may be time to redirect your focus if you find that there’s nothing new creative-wise forthcoming after a miles-long string of unsuccessful tests, even leaving you and your team feeling drained of creative energy.

When Your Experiment Isn’t Leading to Any New Learning

If your iterations from those insights show a trend of no new learnings about user preferences or the effectiveness of different experiences, then you should let go.

If multiple tests on a theme yield neutral outcomes, it’s wiser to pivot and revisit later. Also, avoid excessive changes that disrupt the user experience. Occasional additions or removals are fine, but overhauling layouts or key features can disorient returning visitors.

Lastly, always weigh the opportunity cost. If you’ve already secured gains from a test and have other high-potential ideas in the pipeline, it might be more beneficial to explore those before reiterating on a previous concept. This ensures you’re always aiming for the highest impact.

Will Laurenson

Note that you want to have a data-driven way to move on from insights, not just because one or more of the three points here make you feel you should move on. Ask real questions, such as the one Sheldon suggests:

Generally we are looking for impact from tests, positive or negative. We take inconclusive tests to mean that the change didn’t impact the user’s decision-making process enough.

When we move on from an idea it is likely a combination of factors:

- How robust is our testing roadmap? Are we overflowing with other ideas?

- Does the client have the traffic to run tests to a smaller percentage of users?

- Is the dev lift too great to create multiple versions of an idea?

- Have other warning lights come on in the site that need more urgent attention?”

Sheldon Adams

In conclusion, not all experiments are destined for stardom. When your A/B tests consistently flop, regardless of their size or boldness, it might be the universe’s nudge to say, “You’ve hit the peak!”. If your idea well is running dry and the team’s morale is low, it’s time for a creative intermission.

And when experiments turn into a monotonous rerun without new learnings, it’s your cue to exit stage left.

Want more fun, spicy, insightful and different takes on the key issues in experimentation? Our community enjoys the “From A to B” podcast co-founded & co-hosted by Shiva Manjunath and Tracy Laranjo: https://podcasters.spotify.com/pod/show/from-a-to-b

Written By

Trina Moitra, Shiva Manjunath

Edited By

Carmen Apostu

Contributions By

Brian Schmitt, Edmund Beggs, Loredana Principessa, Matt Scaysbrook, Sheldon Adams, Steve Meyer, Sumantha Shankaranarayana, Theresa Farr, Tom van den Berg, Tracy Laranjo, Will Laurenson