“Test Everything” — From On-Paper to In-Practice

Let’s be clear.

It’s never a mistake to run more A/B tests, even if they don’t directly impact sales and revenue, as long as you execute them well and learn from the process.

But…

Every test requires an investment of time, effort, human capital, and more.

So why do some experts advocate testing everything? And what’s the middle ground… especially for you… yup… you reading this article.

Now you might have tons of good testing ideas and want to know whether you should test them all. But that’s not the point! As Natalia mentions in an interview with Speero’s Ben Labay:

There are hundreds of great ideas out there, but that’s not the point. The point is finding the right one to work on at the right time.

It may sound tempting to start randomly testing everything, but prioritizing testing the right hypothesis at the right time is key.

With the right prioritization, you can cultivate a “test everything” mentality. However, most hypotheses prioritization models fall short.

Let’s dig a little deeper to understand whether you should A/B test everything, how prioritization helps you make decisions, and how to create your own prioritization model that overcomes the limitations of traditional options.

Test Everything: Why Is it Recommended?

Let’s start by examining this controversial approach: Does testing everything make sense?

Go on, pick a side. 🙂

What is “palatable” to you without reading the rest of this article and gathering more data?

Done?

Now let’s see if our reasoning lines up with yours.

Before we tackle the conundrum of “testing everything”, we need to understand what A/B testing and its results imply.

Statistical Validity

A/B testing is a statistically valid way to see if the changes you have in mind impact your Key Performance Indicators (KPIs).

For example, if your goal is to get more visits to your blog, you can add it to the main navigation menu. The new menu is no longer a copy of the old one. But this change is useless if it doesn’t positively impact your site visitors’ behavior.

The simple fact that the two versions are structurally different doesn’t matter. Overall, what matters is a realization of the outcome you desired and anticipated. Are folks more inclined to visit the blog when they see it on the main menu than when it’s tucked away in the footer?

Common sense might say YES, there should be a (positive) effect. But your test may not show any change in the metrics you’ve chosen to monitor as a measure of impact.

If this is true, then A/B tests also mitigate risk.

Risk Mitigation

Implementing site-wide changes is complex and a whole different ball game.

You can end up making a change and risk wasting resources by building features users don’t want and customizing website elements that don’t produce the expected results. This is one of the main reasons A/B testing is necessary, as it’s the acid test for the proposed solution before it’s actually implemented.

A/B test build-outs (especially client-side builds) are less resource-intensive than hard-coded website changes and high-fidelity features. This gives you the green light not to take a particular route, especially when the results indicate that the key KPIs are not moving in the right direction.

Without testing, you invest in experiences that simply don’t work. It’s a blind risk you take, not knowing you may have to revert to the previous design to protect revenue and performance.

There is no idea too special that it will definitely work.

Longden wrote:

“Everything you do to your website/app carries a huge risk. Mostly it will make no difference, and you will have wasted the effort, but there’s a good chance it will have the opposite effect.”

Georgi, the creator of Analytics-toolkit.com, even argues that A/B testing is by its essence a risk management tool:

“We aim to limit the amount of risk in making a particular decision while balancing it with the need to innovate and improve the product or service.”

Why risk it when you can test?

(—More on that later in the blog. Keep reading!—)

Trend Analysis

When you consistently run tests that you learn from, you start to spot trends in your audience’s response to specific inputs. It’s best not to assume that you can derive anything of value from a single iteration. But meta-analysis (in a single variable A/B testing environment) over time can give you the confidence to potentially prioritize testing a particular hypothesis over others.

“Without experimentation, you are using either your gut or your stakeholder’s gut to make decisions. A solid experimentation program with logged learning is akin to creating a data-informed “gut” you can use to “check” your decisions.”

Natalia Contreras-Brown, VP of Product Management at The Bouqs

Some experts stand behind testing everything, given that experimentation has many benefits.

On the other hand, many experts advocate at least getting inspired by repeatable results from previous experiments to answer the big questions.

Chief Editor of GoodUI, Jakub Linowski, is among the most notable. He argues that experiments generate knowledge that enables prediction. He thinks pursuing knowledge, tactics, patterns, best practices, and heuristics is important.

The knowledge you gain from experiments helps you make more reliable predictions. This, in turn, allows you to create more accurate hypotheses and better prioritize them.

At first glance, these perspectives may seem contradictory. But they reinforce the same argument, “experimentation brings certainty in an uncertain world.”

You either make the assumptions on your set of data, or you carry over beliefs from tests run for similar desired end results, across multiple verticals and industries.

At Convert, we take inspiration from both views and see how one facet builds the next in the following two simple ways.

Learning & Testing: The Practical Duo For Inspired Testing

Question Everything

This is an enduring teaching from the Stoic masters.

When you question everything around you, you realize that you’re a composite of what you pick up from the people you meet, the culture you live in, and the heuristics your brain chooses to follow.

This “gut instinct” that typically drives us is usually not ours.

For example, many of us dream of leading a lavish lifestyle centered on consumption. But did this really come from us? Or are we victims of advertising, media, and Hollywood?

The media and advertising began to play a major role in shaping the dreams and desires of the individual person to become primarily a consumer… and it became possible to seduce the individual person and make him believe that what he desires is a free decision stemming from within him (but in fact, he is captive to hundreds of advertisements that generated the desire that he said is subjective and created for him the automatic desire.

Abdel Wahab El-Messiri

Making changes and business decisions using JUST your own “gut instinct” is unwise. If we can’t be sure that the changes we want to make and the decisions we want to enforce in our business come from us, why are we even attached to them?

Questioning everything is essential. Your gut, best practices, prioritization models, and so on.

And if we do question everything, the next step is to subject it to mathematical verification through experiments. Because stopping at questioning doesn’t close the loop from uncertainty to certainty.

Learn From Experiments

You can’t learn if you don’t test.

Make sure you properly quantify your insights. You need to leverage both qualitative and quantitative data in A/B tests to translate your learnings into something meaningful. For example, group the average impact by context, type of test, test location, KPIs monitored, test result, and so on.

A great example of this methodical approach is the GOODUI database.

It identifies patterns that repeatedly perform in A/B tests, helping businesses achieve better and faster conversions.

GOODUI features 124 patterns based on 366 tests (members only) and adds more than 5 new reliable tests every month. By learning from experiments and quantifying those learnings into patterns, the GOODUI database shortens the tedious A/B testing process and enables customers to get more wins!

Get some inspiration from these patterns:

Pattern #2: Icon Labels: https://goodui.org/patterns/2/

Pattern #20: Canned Response: https://goodui.org/patterns/20/

Pattern #43: Long Titles: https://goodui.org/patterns/43/

Convert Experiences plans come with a free GoodUI subscription. Start with a free trial.

But Focus on… Prioritizing Ideas

Prioritize ideas that align with your overarching business goals while considering the company-specific outcomes.

Picture this.

You run a business with a decent customer base. However, the retention rate of these customers is below 15%. Your main goal is to build a sustainable business that retains customers at a much higher rate. This goal should influence your prioritization.

Let’s say you have two ideas and want to know which to test first – one that’s likely to increase retention rate and another likely to increase sales from new customers. You should probably prioritize the first idea despite the latter having a much higher potential for improvement.

That’s because a higher customer retention rate means a more stable business in the long run. This aligns perfectly with your overarching business goals and strategy.

The other idea may get you a lot more new sales. But eventually, your business will still be leaking more than 85% of its customers.

Prioritization models for experimentation always fail to consider the strategic importance of what is being tested. They look at the likely impact, effort, etc., of a wide range of different ideas, but they do not consider which of those ideas best align to business strategy and direction.

Jonny Longden, via Test Everything

Where Do Most Prioritization Models Fall Short?

If you’re a keen optimizer, you might have a long list of A/B testing ideas. But you can’t test them all at once, even if you decide to test everything, due to limited traffic and resources.

It’s like having a long to-do list and knowing that you just can’t tackle everything at the same time. So you prioritize and start with the ones with the HIGHEST PRIORITY. This applies to driving profits with experimentation. With proper prioritization, your testing program will be much more successful.

But prioritization in A/B testing, as David Mannheim wrote in his article, is so hard. This is mainly because

- You often produce ideas not focused on and aligned with business goals.

- Don’t consider iteration and learning from previous experiments.

- Use dysfunctional prioritization models and try to add arbitrary frameworks to the problem.

And these are also some areas that even the most popular prioritization models tend to overlook and get stuff wrong. But why is that? Here are some critical elements that contribute to their lack of proper prioritization.

- Misleading factors: The factors they use to select tests are highly misleading. First up is effort, giving the impression that low-effort ideas deserve quick prioritization.

- Weight function: Most models assign arbitrary weights to factors. You can’t just prioritize randomly; you need a reason for it.

- Complex iterations: They can’t distinguish existing test iterations (driven by learning) from brand new ideas for prioritization purposes.

For starters, the PIE prioritization framework ranks hypotheses based on:

- Potential for Improvement

- Importance

- Ease

But how do you objectively determine a test idea’s potential? If we could know this in advance, as Peep Laja, Founder of CXL, explained, we wouldn’t need prioritization models.

The PIE model is very subjective. It also doesn’t align well with business goals and promotes low-risk solutions. Ease is misleading as it suggests that low-effort ideas should be prioritized.

The greater the risk, the greater the reward.

And this only applies if you challenge yourself with more sophisticated ideas.

Struggling to find the right inspiration for your next A/B test? Follow these 16 A/B testing experts to get ahead in 2022.

Another popular model, the ICE (Impact, Confidence, and Ease) scoring model, is much like PIE and has the same flaws as PIE.

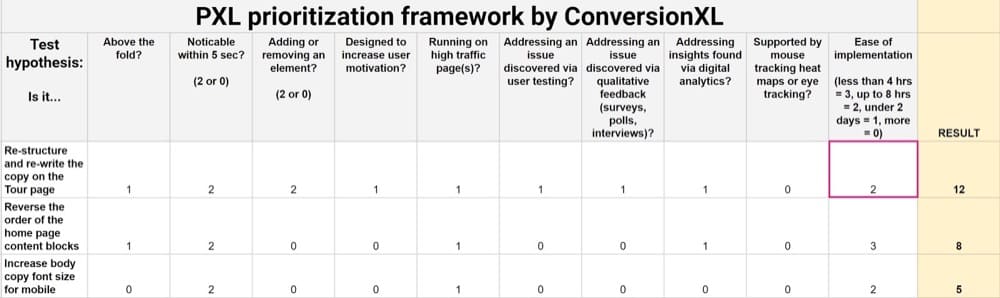

The PXL framework is an improvement over these two and makes any “potential” or “impact” evaluation much more objective. However, it still has its shortcomings.

First, it doesn’t consider aligning with business goals. Second, it doesn’t distinguish current test iterations from brand new ideas.

David Mannheim, Global VP of Conversion Rate Optimization, Brainlabs, revealed that 50% of all 200+ experiments they built for a client at his former consultancy, User Conversion, were iterations of each other. They also once created an 80% improved value from the original hypothesis and stated:

We knew the “concept” worked, but by altering the execution, over 6 different iterations might I add, we saw an incremental gain of over 80% on the original.

In its “Running Experience Informed Experiments report,” Convert found that nearly all the experts interviewed agreed that learning drives successful ideation. This can be further supported with H&M’s take on learning patterns.

Almost every other experiment that we run at H&M in our product team is backed by a documented learning of a previous experiment or other research methodology.

Matthias Mandiau

How to Create Your Own A/B Test Prioritization Model?

Prioritization encourages the “test everything” mindset. It imparts confidence to test (first) the ideas and hypotheses that make the biggest dent on the most pressing problem.

But every business is different. So there can’t be a one-size-fits-all experiment prioritization approach. Context, as David pointed out, is king.

Essentially, all models are wrong, but some are useful.

George E. P. Box, a statistician

From the examples above, we can say that all models are flawed, but some are useful. The secret is to create the most useful and impactful model for your business.

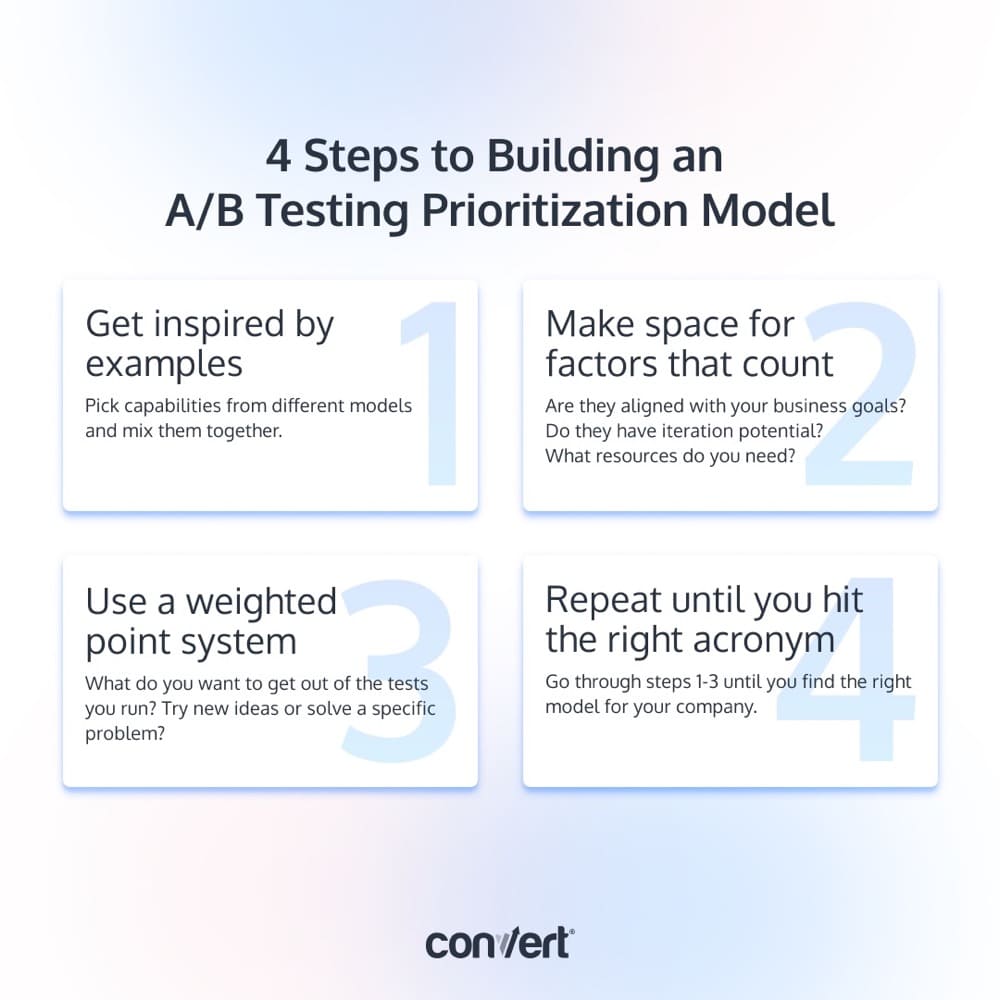

Here are four steps to building a model:

1. Get Inspired by Examples

One of the most fruitful ways to develop a good prioritization model is to pick capabilities from different models, mix and match, and produce a blend with limited nitpicking.

For example, you can take inspiration from PXL’s more objective assessment approach, which asks questions like “Add or remove item?”. At the same time, you can consider ICE’s impact angle and add it in.

“Bigger changes like removing distractions or adding key information tend to have more impact.”

Peep Laja, CXL

2. Make Space for Factors That Count

Include factors that align with your business goals. This will help you focus more on core growth drivers and KPIs like customer lifetime value (LTV) and customer retention rate, not just surface-level metrics and results.

As previously mentioned, company-specific learning is also crucial when prioritizing experiments. Do certain solutions consistently and historically outperform others for your audience?

Also, consider the iteration potential. Iterations can help make more headway toward solving a particular business problem and are more successful. If true, hypotheses with potential for iteration can and should be prioritized over stand-alone tests. Additionally, experimentation is nothing if not a flywheel where efforts feed into each other.

Finally, factor in resource investment, including complexity, time, cost, and the traditional measures used to prioritize experiments.

3. Weightage Is Critical

Decide what you want to get out of the tests you run. Is it exploring new, groundbreaking ideas? Or exploiting a problem area until you find a solution?

Customize the scoring system to suit your needs. Let’s consider two different types of experiments to understand this better.

- Adding the blog to the navigation menu will increase visits to the blog.

- Decreasing form fills on the checkout page will reduce the cart abandonment rate.

For this example, let’s assume we’ve only selected two factors for our prioritization model. One is iteration potential, and the other is impact potential and rate each hypothesis on a scale of 1-5 for each factor.

Our main goal for testing now is to fix the card abandonment issue for an e-commerce site. We should give more weight to the iteration potential as we probably won’t fix that with a test. And we’re likely to iterate many times within a single hypothesis before noticeably reducing cart abandonment.

We can weigh the iteration potential factor by doubling its score.

Let’s give the first hypothesis a “4” for the impact potential factor. And “2” for iteration potential. Then, for the form-filling hypothesis, “3” for both impact and iteration potential factors.

Without the emphasis on iteration potential, it would be a tie: “4 + 2 = 3 + 3”

But after doubling the score on this factor, hypothesis number two wins:

The final score for the first hypothesis: “4 + 2(2) = 8“

The final score for the second hypothesis: “3 + 3(2) = 9“

The bottom line is that the same framework’s prioritization output should change as external and internal considerations change.

4. Rinse and Repeat Until You Hit The Right Acronym

Try not to expect good results with a single iteration. Keep modifying until you end up with the right prioritization model for your business.

For example, ConversionAdvocates created their own framework, IIEA, which stands for:

- Insight

- Ideation

- Experimentation

- Analysis

IIEA attempts to solve two major issues of most models by listing every experiment’s learning and business objectives before launching.

Whatever acronym you end up creating, constantly review and reevaluate it. Sina Fak, Head of Optimization at ConversionAdvocates, mentioned that they’ve been refining IIEA for the past five years.

Since 2013, they’ve used this custom framework to help several businesses solve critical problems, such as reducing costs and increasing conversions.

Yours may not be a convenient ICE or PIE, but the results will be delicious.

With your new ultra-useful prioritization model, you can borrow from legacy learning AND potentially “test everything”.

A win-win in our book!

Written By

Mohammed Nadir

Edited By

Carmen Apostu