From Specialist to Lead: The Peaks, Valleys & Troughs of Simon Girardin’s CRO Journey (ft. John Ostrowski)

Hailed as the “most important marketing activity,” CRO has come a long way. The abundance of courses, blueprints and frameworks has made it easy for even a complete beginner to learn the ropes.

But a few years ago, this was hardly the case.

Simon Girardin had to figure out the nitty gritties of a CRO program with a limited budget and resources.

Even though he “stood on the shoulder of giants,” his pursuit of a framework that worked was strife with roadblocks but his enthusiasm and curiosity never wavered.

And it paid off. Currently the CRO Manager at Conversion Advocates, Simon makes the journey from a CRO specialist to a lead at an agency look easy.

In his conversation with John Ostrowski, Product Growth and Experimentation Consultant at Positive Experiments, Simon talked about

- What drew him to CRO

- The resources he relied on

- How he came up with his prioritization framework

- What makes a great agency

- The one ingredient that can cost you success

Enjoy this raw, unfiltered version of a CRO specialist’s journey to the top!

The Motivations of an Experimenter

Why are you in the optimization business?

John

You’re surprised all the time. And I’m someone who loves navigating chaos.

Simon

What someone sees as a mess, Simon sees as an opportunity.

(Reminds us a little of someone else who loves being organized!)

Being an experimenter means being perennially curious. And Simon loves being the instigator of growth — the push or the impetus. The feeling of seeing clients obsess over an insight that can completely transform their understanding of their customers is addictive.

But here’s the kicker: what’s fun for Simon is he’s not always right. While some insights are a welcome surprise, you’re constantly humbled by what you don’t know about customers.

Even internal teams have blind spots when it comes to customer research. The thrill of unraveling those mysteries is what keeps Simon going.

Play the Humble Card

John loves using “his humble card.”

In experimentation, we test so many things and we fail more often than not. It’s a profession that humbles us. We make peace with the fact that we don’t always have the best ideas.

John

Using the humble card to navigate the dynamic of entropy and allowing yourself to be surprised brings you joy in the field of experimentation and optimization.

Lesson #1: If you are not surprised and humbled often, you are not tapping into the true potential of running experiments.

Flip the Script: Strategic >>> Reactive

Optimizers aren’t firefighters.

It’s not an experimenter’s job to perpetually put out fires and yet that’s what CRO meant at the time where Simon came from.

CRO was used as a band-aid. A reaction to a problem. Every CRO project had this sense of urgency as if they were at the end of their rope.

That type of reactive mindset was in clear contradiction with what CRO stands for fundamentally — creating processes and building frameworks.

When you’re constantly reacting to problems that need fixing, you’re in the “ship fast” frame of mind. And that doesn’t leave you a lot of room to be more strategic and focus on some of the more exciting and arguably passionate parts of CRO.

Lesson #2: Get out of the fireman costume and don your experimenter hat. CRO isn’t just about tests and results. It’s a growth strategy.

The Step Up: From a One Person Band to Lead Optimizer

Simon comes from a legacy of reactive projects or set-it-and-forget-it type of endeavors. Think site audits or no-code landing pages — CRO was a small part of the ecosystem at the digital global marketing agency Simon worked at.

The turning point came when his agency wanted to move away from user acquisition to better retention.

But Simon realized he couldn’t really build out a massive team if all they did was put out fires. He had to build a different focus for the optimization clients. He needed to convince them to keep working on their website even when things were good. And ensure the agency was on board with what he planned to do.

A lot had to be done because there was no experimentation. We had no prioritization framework and we had no research being done except basic Google search[es].

Simon

“I Didn’t Know Much”

Simon had to start from scratch.

He spent time learning from content creators on LinkedIn and what their approach was.

Standing on the shoulder of giants, Simon discovered “testing to learn” i.e. most tests fail.

But most businesses don’t use CRO to learn. They’re almost exclusively result oriented and don’t have the luxury to run many tests that may fail. That in itself introduces a bias in the testing process. If businesses can’t make room for failure, they’re bound to stick to the low-hanging fruit.

Simon realized that this mismatch had caused a massive gap between what CRO is and what clients expected from optimization.

His desire to reconcile the gap led to a trial and error journey — trying to build frameworks while still trying to learn from established experimenters.

Create a Research Framework

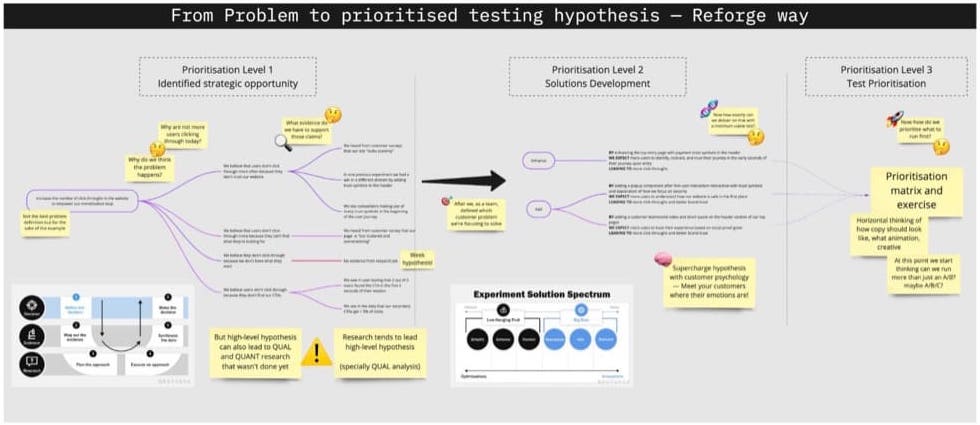

Simon started with a catalog of 120-140 questions — a massive checklist in a spreadsheet. A list of questions, matched to research methods, with page targets.

Unsurprisingly, this process wasn’t efficient.

Team members were always jumping from one research method to the other and insights were scattered. They were also limited by their capabilities and skill sets and missing clear methodologies and SOPs.

Despite the shortcomings, this was at least a starting point. The beginning of a framework that his team could leverage to gather insights for the clients.

Remix Your Own System: Look for Questions, Not Answers

You don’t have to reinvent the wheel to create a system.

What Simon did was uncover the gaps his team had and look to experimenters who were actively sharing their processes and putting his own system.

What he did differently is to go looking for questions, not answers.

The instinct is to look for answers, but the truth is that questions teach us the most

Positive John’s Question Bible.

Lesson #3: Something Simon would recommend doing differently right now is focusing on one research method at a time, and doing one in-depth analysis. Also, adding more people to the insights & ideation process increases the value exponentially due to the varying backgrounds and perspectives.

We also asked John to share his take on creating frameworks from scratch. This is what he had to say:

“Back to “My decision-first approach to research” — quoting Convert already! — I start with defining the decisions and the type of information that will help us get there » https://positive-experiments.com/KnowledgeBase/Content .

I met Annika Thompson and Emma Travis from Speero at Experimentation Elite to discuss “continuous discovery” — I still believe I need some sort of budget dedication for “inspiring discovery,” e.g. someone looking at recordings or talking to users to find things that are not tied to decisions to allow serendipity.

Thinking as an investor, if I keep operations lean, 80% of the research budget goes to things that clearly will affect a decision and 20% for serendipity.

The Right CRO Resources on a Tight Budget

If you were to leave this page for a hot second to look up CRO frameworks, you’d see many results from established agencies.

But 2 years ago, there were a lot fewer frameworks available on the interweb.

There were a few courses that came with a hefty price tag.

But the budget was tight and Simon had to be strategic.

So here’s what he did:

- He scoured LinkedIn and Youtube and reproduced those assets.

- Created templates based on the mindsets and case studies he came across.

- Documented methodologies and SOPs. (They however never got further than maybe 5% of what Simon currently knows and uses. Collecting 1 at a time is better than nothing, but far from exhaustive.)

- Used test approval and development workflows by other content creators.

- Developed a test plan based on what he gleaned from CRO courses.

- Created a workflow on Trello to visualize tests and their development stage for internal teams and clients.

What Simon couldn’t find in his deep dive is a prioritization framework. So, he built one.

The Prioritization Framework: John’s Take and Simon’s Struggle

John likes to see the different stages of a CRO program like this:

- Discovering

- Ideating

- Capturing

- Prioritizing Hypothesis

- Experiment Build

- Analyzing, Learning, Communicating

All of this is wrapped in program management that provides direction. Without direction, no wind is favorable.

But agencies also need to add an extra layer – relationship management with different stakeholders, sales process (of their own), getting even more buy-in, and access to the clients’ legacy data.

Prioritization is already *bloody* hard but it’s even harder for an agency.

A rant on prioritization frameworks by CXL: PXL: A Better Way to Prioritize Your A/B Tests

ICE/PIE to Binary Frameworks

While Simon started with the ICE/PIE frameworks, he realized that they wouldn’t work.

He added more weight to impact.

This meant breaking down the PIE framework from 3 factors into several (up to 12) factors. Some of these factors assigned values based on the level of triangulation of insights.

If a particular hypothesis or idea had more channels of qualitative and quantitative data backing it up, then the idea was prioritized.

Simon also included aspects like “Whether the change to be tested was above the fold or not..” which would indicate a greater chance of the insights being activated and validated because more people would see the change and interact with it.

Despite all of this, the scale of 1-10 for each criterion/factor of the prioritization framework did not pan out.

The scale was too broad & subjective. And it turned into a real nightmare.

There were 10 different values that could be assigned at random. Simon and his team were left wondering why certain ideas would rise to the top, while certain stronger candidates would sink to the bottom.

(Caveat: While even the definitions of “weak” and “strong” are subjective, constantly testing ideas that have been prioritized despite the true experiment impact being weak, is a signal to understand how they are being weighted).

So Simon sought the help of the best business analyst he knew.

“My Framework Doesn’t Work”

In the first iteration they took the 10-point weightage system and turned it into a three-point grade scale – going from “very little” to “average” to “A lot”. This approach condensed some of the subjectivity.

But it still wasn’t enough. Often they would not know what “average” was and some of the criteria did not conform to this “3 levels” approach.

The second iteration was going binary — black and white. Simon also started varying the weightage by considerations that mattered to their own CRO maturity and the goals for their clients.

For example, triangulation was something they valued. The level of effort of a test also mattered since they didn’t have a team of researchers, analysts, and developers.

The idea was to be process and data-driven, but not get unnecessarily bogged down by effort that won’t yield desired results.

Not long after, Simon saw a binary model published online which was endorsed by multiple CRO experts, and realized they had probably arrived at the same conclusion through a similar process.

Lesson #4: As a program leader, every time you review your prioritization framework and you look at the rankings, you have to be critical. Review the rankings of each criterion and if you know it’s a strong hypothesis that’s ranked low, there’s an issue.

ICE -> RICE

Prioritization matters as well as good decision making. Life is too short to ship the wrong initiative. Life is too short for prioritizing the wrong test.

John

John recommends going from ICE to RICE (R=Reach) i.e. to consider how many people will be impacted by the test being shipped.

While the reconciliation of traffic volume with a binary scale is difficult, Simon and his team got around it.

They tagged certain pages that would receive the biggest chunk of testing traffic and then applied the binary code to the location of the test being run (as in “Will the test go on the pricing page?”).

They also included the question “Is this testable?” in the very template of any ideas that they brought to the table. So what made its way to their version of the framework would already tick the checkbox of “reach”.

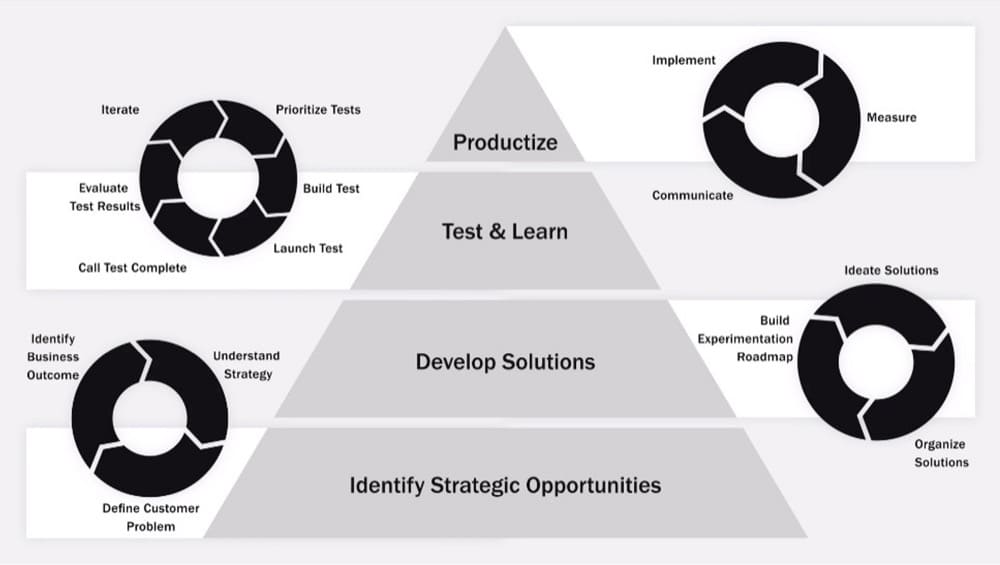

How John sees prioritization: The three layers of prioritization according to Reforge.

“I see prioritization in stages – at least three layers. Are those tests or initiatives aligned with what’s a priority for the business in the first place?”

Simon elaborates on what goes into a “testable template” which helps ideas reach the prioritization pipeline:

- Alignment with business goals

CRO isn’t a program, it is a process. You don’t grow business with numbers, you need internal alignment. You need a criterion that asks if you’re creating alignment.

- Funnel impact

Another criterion is to ask if you’re optimizing for immediate impact. In the loose sense of the words, BOFU > TOFU tests for impacting the bottom line of businesses

- Scroll depth

How many users are going to be impacted? If the test is further down a page, you’re not going to influence behavior which is the whole point of the tests.

Buy-in Fails + How to Succeed

Whether it’s alignment with business goals or with internal teams, top-down buy-in is crucial.

Simon and his team didn’t initially succeed at this because they were often dealing with mid-level managers.

So they could only alleviate the perceived pains and answer the objections of this “client team”. The idea was the client team would then build C-level buy-in, which was an uphill battle. This in turn posed challenges to the experimentation program ramp-up and introduced unnecessary inefficiencies.

Lessons from Simon’s Struggle: The Pre-Buy

A few realizations from this struggle (which have since been validated by Simon’s more recent experiences at Conversion Advocates) are:

- When launching an optimization program it is important to work directly with CEOs/CMOs/Sales VPs/growth leaders. This simplifies the process of building buy-in.

- Secondly, when you have these movers and shakers on call, tap into their unique perspective of the business. They deal with the same customers but their touchpoints are different. If you can design experiments that help them achieve some of their goals (framed in the language they care about), CRO as a whole gets more credibility and support.

- Finally, optimization is viewed as a marketing-first activity. If a program is enriched by cross-functional insights (through inter-department leaders), then suddenly CRO is something that everyone rallies around, and views as “important”.

When you have these 3 points aligned, the conversation is direct and immediate.

Everyone understands the insights and agrees that the hypothesis will have the desired impact. You venture into your experiment with stakeholders who’ve in a sense “pre-bought” into the idea. It is a big step up from working with stakeholders who are disconnected and disjointed.

Now you can channel all of your energy into executing your ideas — actually running tests.

Leaders = Storytellers: Securing Buy-in

A leader should be a good storyteller. A CRO lead is no exception.

As you go up the career ladder, you become a storyteller and you need to understand what stories C-suite tells its teams to motivate them.

A great way to do this is through the OKRs framework, in specific the cascading OKRs framework. And make conversion rate optimization a part of that narrative/story.

Everything, Everywhere All at Once?

Simon has grappled with prioritization from the ground up, and also struggled with buy-in, the processes he goes through now reflect his experience and hard-won lessons.

You can’t optimize everything at once.

Simon

This is what Simon does when he wants to create targeted strategic alignment:

He and his team review the 12-month goals of the business. This process encompasses priorities from all departments and functions.

A 90-day growth plan is then drawn up into weekly sprints, where they focus on a maximum of 3 KPIs that really capture the essence of what the business is trying to move the needle on, and grow.

3 [KPIs] is the golden number. We’re targeting a problem and every experiment is trying to chip away at a problem.

Simon

It stops the experimentation program from being too scattered and not doubling down on things that work. 8 to 12 experiments a quarter is a significant improvement for most businesses.

Create a Flywheel of Efficiency and Excitement

The above approach results in company-wide alignment in terms of the core problems — you create a flywheel of efficiency and excitement. Because everyone feels like the problem is worth solving and will have an impact across teams.

Part of the steps is getting people excited about testing.

John

What Makes a CRO Agency Great?

“What is the best agency? Is it the one with the biggest uplifts? Is it one with the most number of noteworthy speakers?” – John

John proposes (based on Craig Sullivan’s masterclass) that the best agency is probably one that constantly transfers skills to the client, maturing their program, and helping them fish for themselves.

Simon believes that skill transference is crucial.

When there is no skill transference, clients don’t advance and mature with the program. Their language and capabilities stay stuck in an era that the CRO program and the complexity of the tests being run have outgrown.

Pro Tip from John: The quote about Ben Labay at Speero sums up the importance of an agency and a client speaking the same language. “More often than not, language is the impediment to progress”.

Even in something like statistical significance, it is crucial to educate the client about the what and why of a specific significance level and why even though a number like 86% looks strong, it may not be the right decision for a given test (higher risk).

Simon and his team do “research insight presentations” with their clients. It’s all about greasing that efficiency and excitement flywheel.

This is also a form of alignment and education because they go over how each insight was collected and triangulated. And in the process, there is agreement around the potential test plan since the client team thoroughly understands where the proposed tests come from and what fed the experiment.

Education & skill transference is what allows the conversation to move from the admin of running tests to learning.

Simon’s Tip: Focus on the testing plan (90-day sprint). Then zoom out and talk about the 12-month business plan. Reinforce alignment in this way. We also do a victory lap with clients, to celebrate wins.

- Did we solve another customer challenge?

- What did we learn from it?

- We also discuss recommendations in real time and have an ideation workshop to think through follow-up variants with the entire team

Simon and his team also work on context and perspective to avoid misinterpretation of test results because CRO outputs are not absolute. The rollout gives results on a scale of possible uplifts in the real numbers (output in the long run).

This work may seem insignificant. But contextualizing test results (together with the background of education already imparted) ensures that the client and agency have the right expectations.

Lesson #4: Good education leads to quality client questions.

John circles back to his understanding of a good agency with Craig’s work. He believes that one of the ways to gauge how successful an agency has been at transferring knowledge is by the evolving maturity of client questions.

An easy way to understand if clients are maturing is by assessing them based on the quality and maturity of questions they ask over time.

John

A quick example from Craig:

“My numbers are not matching up” – low maturity

“How can we look at confidence interval levels” – high maturity

Recipe for a Successful Experimentation Framework: The Absence of This Ingredient that Makes the Whole System Bland

“Asking someone to list one thing that leads to success is like boiling down the taste of a recipe to one ingredient.”

“So re-framing the question. What is that factor or facet – the absence of which would definitely take away from the overall success of this journey, and the resulting program?”

Two things that tie in together – it’s a process and applying it with rigor.

Simon

Simon recommends:

- Map out the test plan – your program, research, test velocity.

- Discuss how you will communicate and disseminate results company wide to keep building excitement.

- Rope in the C-suite since experimentation impacts all teams

A robust structure is half the game. But you need a strong team to support you.

Simon currently works with teams of 4-6 people for each individual client. To cover 14+ key skills that truly bring experimentation programs to life! It is nearly impossible to expect a one-person CRO show to make meaningful progress.

Growth, at the end of the day, is a team sport.

John

Quotes & Takeaways

- “We make peace with the fact that we are not the ones we don’t always have the best ideas.” – John

- “Keep working on your website even when things are good.” – Simon

- “Without direction, no wind is favorable.” – John

- The customer is the same. Insights from different teams aren’t.

- Top-down buy-in is crucial for experimentation success.

- Which is the best CRO agency? The one who teaches you how to fish for yourself

- You don’t have to reinvent the wheel. Stand on the shoulders of giants.

- “Growth, at the end of the day, is a team sport.” – John

- You need a process and apply it with rigor.

- “Work in programs that are fully built out with teams that are locked and loaded. So you can solve roadblocks for customers, [instead of struggling with the programs.]” – Simon

Resources

Take this quiz to find out how well-versed you are with CRO (+ resources to level up)

- Starting Out:

- CRO tips & book – Conversion Ideas

- Convert’s origin story series “Testing Mind Map” where we highlight the diverse starts of CRO pros and the mental models they use to approach testing. (Start Here.)

- Sign up for the best newsletters in the experimentation space. We have a handy dandy list for you!

- Follow these stalwarts on LinkedIn. Because blogs are great, but raw vulnerability & in-the-trench stories define optimization and testing maturity. 1st Round Up | 2nd Round Up

- Processes & Frameworks:

- The CRO Mini Degree by CXL

- The Technical Marketing Mini Degree by CXL (‘Cause experimentation without relevant & accurate data does more harm than good).

- Read Trustworthy Online Controlled Experiments.

- Looking Beyond A/B Testing:

- Apply for Experimentation + Testing at Reforge.

Written By

Sneh Ratna Choudhary

Edited By

Carmen Apostu