How to Run an Experimentation Meeting that Will Transform Your Company (& How I Do it at Workato)

For the most part, I hate meetings.

I’ve said it many times, in articles, Tweets, and to my colleagues.

Mainly, I dislike it when people use meetings as a crutch to avoid getting work done, or when people run meetings poorly and waste everyone’s time.

But as a leader, I recognize the importance of some meetings. Particularly, in my case, the weekly experimentation meeting.

That’s right – the cornerstone of the experimentation program at Workato is just one meeting. We don’t do daily standups (we do these asynchronously on Slack); instead just one meeting at the start of the week with some other artifacts and rituals sprinkled in to keep the machine moving.

This will be my first and only article diving deeply into the details of how I run this meeting, why I run it that way, and how I balance it out with other experimentation program tools (like emails, knowledge sharing databases, and readouts).

Before walking you through the minutes, I’d like to start by asking a high level question – why do this meeting in the first place?

What is the purpose of an experimentation team meeting?

Every meeting needs a purpose. If it doesn’t have a purpose, it shouldn’t happen.

The three utilities of my weekly experimentation meeting are as follows:

- Learning and insights sharing

- Prioritization of initiatives

- Identification and elimination of process bottlenecks

This means we eliminate most other talking points that don’t deliver on these from the meeting.

Experimentation is accelerated by learning

When you start building an experimentation program, you have to do the hard work of laying down the foundations.

- Getting a proper testing tool.

- Making sure it’s integrated with your analytics tool and you’re tracking data appropriately.

- Communicating with executives to set proper expectations for experimentation.

Once you start scaling experimentation, though, what you learn on each experiment will act as a potentiator for the rest of your program.

As you pass 5-10 experiments a month, how well you learn from winners, losers, and inconclusive will affect the success rate of the next batch of experiments you run. And all of this should be core in influencing how you prioritize your roadmap.

It may seem like a waste of time, but it’s not. Brian Balfour, founder of Reforge, also emphasizes the importance of learnings in running a growth meeting:

So we spend a large part of our meeting walking through learnings and sharing insights.

This is especially helpful if your meeting is cross-functional or has members from other teams on the call, as you often get perspectives that you normally wouldn’t.

For example, we’ll often get product marketers on our experimentation meeting to pop in and share recent discoveries from customer research or to share upcoming strategic narrative changes.

Things change; prioritization is agile

In my meeting, I want to make it clear where we’re aiming, what steps we’re taking to get there, and who is accountable and responsible for what.

That’s the next part of the meeting.

Flowing from what we’ve learned comes the question, “what are we going to do next?”

Our homepage experiment lost – so what did we learn and what will we do next?

A lot of prioritization is actually done asynchronously and on a quarterly basis – at least the largest experiments and broadest thematic buckets of our projects.

But we do leave a lot of wiggle room for small changes and iterations to the roadmap based on strategic changes and what we’ve learned from past experiments, and we use part of the meeting to move things around to reflect that.

Identification and elimination of process bottlenecks

Finally, it’s important to uncover process bottlenecks, especially if you’re in the scaling phase of an experimentation program.

This requires systems thinking, and it’s the core job of the team leader or program manager. Effectively, you’ll need to identify and measure each step of the experimentation process from idea all the way to production.

Which steps take the longest time, or rather, more time than expected? And how can you use this weekly meeting to identify those delays and time lags so you make sure the rest of the process isn’t overly affected?

This is a critical part of the weekly meeting, and more, generally speaking, to keep your experimentation train on the tracks.

What to avoid in experimentation meetings

You can run your meetings however you want, but for the weekly team experimentation meeting, two things tend to be a massive waste of time.

- Pulling ad-hoc data or asking anyone to do so

- Unstructured brainstorming and ideation

When you pull ad-hoc data or ask someone to do so, make a note of that. If you repeatedly ask for a bit of data, add that to your process checklist. It shouldn’t be something that comes up every meeting (it wastes a lot of time and focus). This is where dashboards and reporting are useful.

On the unstructured brainstorming, I’m actually not a hater of that in general.

But there’s a time and place and typically a method to the madness.

When you spend an inordinate amount of time fielding ideas during your weekly meeting, you reduce the time spent identifying process bottlenecks and making sure you’re running the right experiments.

If a random good idea comes up, that’s fine. If it devolves into a loosely structured brainstorm session, you have to put your foot down and take it offline.

How I run my weekly experimentation team meetings

Alright, so how do I actually run my weekly experimentation team meeting?

I’ll have to obfuscate some details around the actual experiments we run and the results, of course, but I’ll cover each part in as much detail as I can.

The people

First up, the people who attend every week are part of the core strategic experimentation functions. It’s a small group that includes analysts, performance marketers, web developers, and product managers. My manager also attends every so often, but not on every call.

I have separate meetings with our design team since they are a center of excellence at our company.

These people attend regularly, and then I invite “guests” every once in a while from adjacent teams like design, brand marketing, or product marketing. Also, for these guest calls, we usually just set up a separate session, since its purpose is usually to introduce new ideas, better team communication or to brainstorm.

To make this more generic, in most cases your experiment meeting should include people who make decisions about strategy and people who execute on that strategy. Typically, this is:

- Leader / program manager

- Product manager

- Developers

- Designers

- Analysts

The schedule

I run this meeting every Monday at 3pm Central time. Monday mornings are great for prepping and getting deep work done, or tying up loose ends from the preceding week. By afternoon, the team is well prepared to discuss the upcoming week. This also lets us all know what we’re supposed to be working on and prioritizing throughout the week.

Then, throughout the week, we’ll have asynchronous check ups and 1:1 meetings to discuss the specifics of what we decided we would work on during Monday’s team meeting.

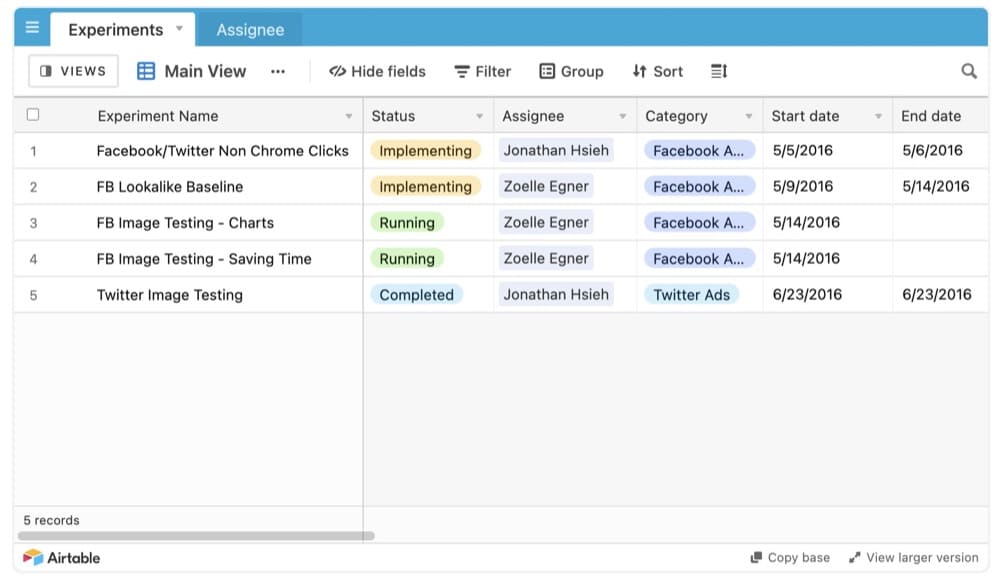

We don’t use a slide deck at this time, but we may incorporate it. If that’s the case, I’ll keep individual sections tied to our time limits listed above, and the deck will be sent out Friday of the preceding week (with the goal of not spending more than 20 minutes Monday morning putting together each person’s section). At the moment, we mainly walk through our Airtable database, which we use for project management.

The agenda

Here’s my exact agenda:

Meeting length: 45 minutes

- Informal catch up: 5-10 minutes

- Learnings from concluded experiments / research: <10 minutes

- Prioritizing / grooming the backlog: <10 minutes

- Identifying bottlenecks / blockers: rest of the time (10-15 minutes)

We already walk through each of the above sections and their purpose.

I’ll give you a few tools and frameworks that may help with each of the above as well.

Learnings from concluded experiments / research.

The main thing you need here is a good documentation system.

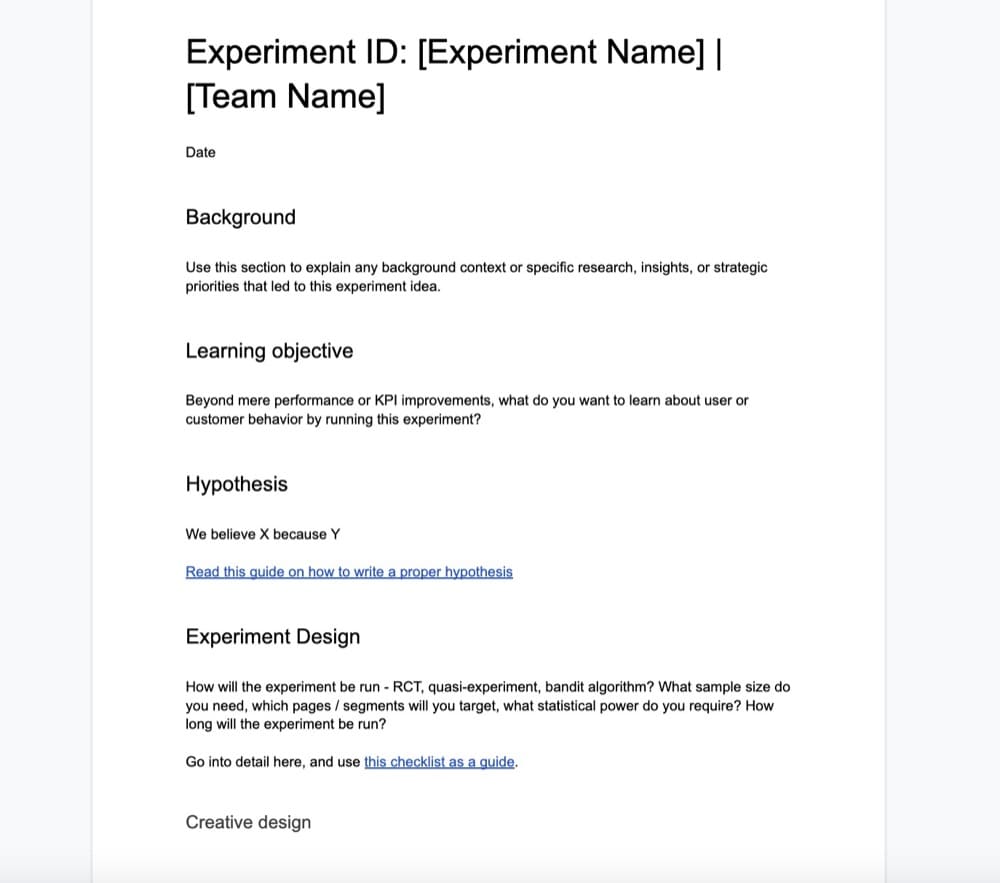

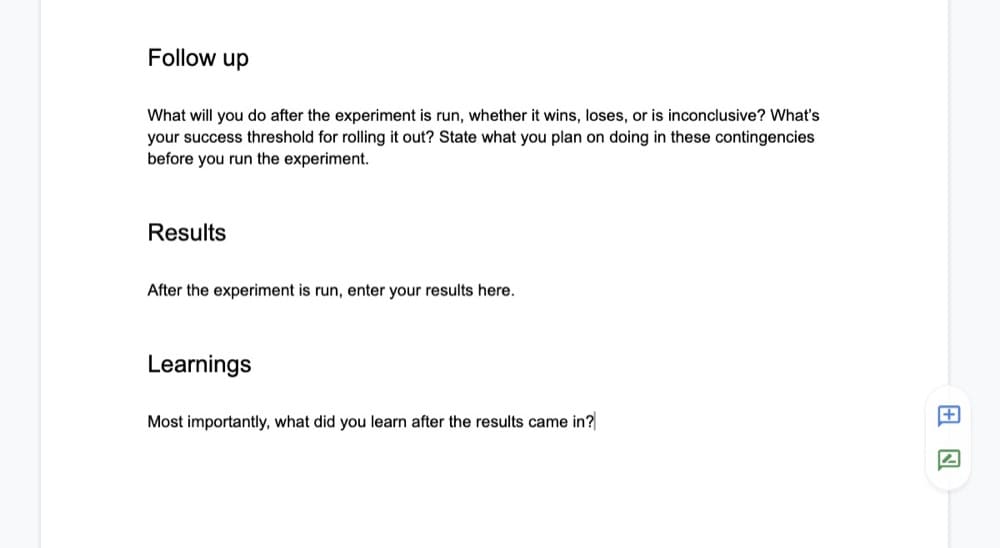

Before an experiment is ever run, I require an experiment document to be filled out with the following sections:

- Background

- Learning objective

- Hypothesis

- Prediction (which is different than a hypothesis).

- Experiment design (quant and creative)

- Follow up (what happens upon conclusion)

Then after the experiment is run, two more sections are filled out:

- Results

- Learnings

And in our meeting, we briefly cover the results and more deeply cover the learnings section. Whoever was the experiment “owner” is the one who presents the learnings, and then there is a brief discussion as to how we can apply them. Sometimes this means testing at a broader scale or simply scaling the learnings to similar experiences. Sometimes this means iterating in a meaningful way.

Your two tools of choice in this step should be:

- The experiment document (click here to steal my template)

- The experiment knowledge share system / Wiki

Prioritizing / grooming the backlog: <10 minutes

There’s debate on the utility of this part, but I like keeping it a group discussion because, unlike in simple web production or product management, experimentation is subject to many external pressures, blockers, and whims.

For example, we may not have the buy-in for a particularly large experiment we had planned, or we may have a blocker from design. But we still want to maintain our testing cadence, so in that event, we can remain agile and move up a lower effort idea from the backlog.

In some organizations, this decision making is solely placed on the experimentation team leader. I like to open the discussion up to the group.

Why?

First, I don’t want to crush the team under the weight of my prioritization scores. Part of the value of experimentation is in learning things you didn’t expect to learn, and by allowing autonomy of prioritization from others on the team, we get ideas on the table that I wouldn’t have put there. This also helps improve overall team communication and rapport.

Second, people are more excited to work on things they chose to work on. If I start assigning all ideas, I’m limiting the passion people can feel for individual experiments (which, in many ways, limits the potential of the experiment to win).

So in this section, we’re basically working from two tools:

- The project management kanban (we use Airtable – here’s a great template).

- The prioritization matrix (we use PXL).

Identifying bottlenecks / blockers: rest of the time

Finally, identifying bottlenecks.

This is hard. In the beginning, it’s doing two things:

- Finding patterns in late deliveries

- Asking qualitative and open ended question of the team

In my Airtable (which I’m sorry to say I can’t share), I have four columns in each card:

- Design due

- Design delivered

- Development due

- Development delivered

This is in addition to the Kanban steps we follow:

- Idea

- Experiment document in progress

- Experiment document in review

- In development

- Quality assurance

- QA’d and ready to launch

- Running

- Concluded

- Pushed to production

This allows me to see where things are falling behind schedule. If, for example, we have run 10 tests yet none of them have been implemented, we have a production problem. Or perhaps our experiments stall out in quality assurance and we have a backlog of ready-to-publish experiments waiting to launch.

In the development stage, we also want to see if we’re waiting on design or developers to create the experience. If we repeatedly fall behind, it means we need to refactor the priorities of these teams or potentially increase headcount.

Qualitative questions sound like this:

- Does anyone need any support from me this week?

- Are there any potential blockers to accomplishing our goals this week?

The answers to these should both allow you to fix these bottlenecks in the near term and also identify patterns when they repeat to be fixed over the long term.

Filling in the gaps: other useful experimentation program tools

The experimentation team meeting isn’t the only tool that should be in your manager’s toolkit.

In fact, the biggest mistake I see experimentation managers make is trying to do it all in one meeting.

Rather, you want to spread out your rituals and artifacts so you’re using the best tool for each job you want to accomplish.

The experimentation team meeting accomplishes three things:

- Learnings and insights

- Prioritization and work allocation

- Blocker identification and fixes.

Other experimentation program goals you might care about are as follows:

- Gaining ideas from other teams

- Keeping executives and stakeholders in the loop on ROI and results

- Sharing results with other teams to spread knowledge outside of experimentation

Depending on your organization, you may have more to work on, like keeping experimentation scoreboards or working with data scientists to implement the DataOps process to automate parts of analysis or your platform.

But for the above purposes, I find these tools to be low effort and highly effective:

- The experimentation review email

- The cross-functional experimentation readout meeting

- The experimentation archive / knowledge sharing tool

The experimentation review email

Your experimentation team meeting shouldn’t be spent going over results, for the most part. This should be effectively done in a dashboard and a weekly email you, the program manager, send out to the rest of the team and any interested parties.

It should include:

- Past experiments that have concluded + results

- Currently running experiments

- Future experiments

Any self-serve analytics and dashboards can also be included here. I like to create visually appealing results charts and link to more details for the nerdy ones in the group. But by and large, your VP isn’t going to care much about the detailed statistics of every individual test. So this email is to gain visibility for your efforts and share high level results and learnings.

The cross-functional experimentation readout meeting

Experiment teams should never act as a territorial silo. You limit the spread of the knowledge your team gains, and you also limit the inflow of disrupting ideas.

So every quarter, I like to run a cross-functional experimentation readout where we walk through all the experiments we’ve run and high level learnings, and then we open up the microphone for the rest of the teams to comment and pitch ideas.

This gives the other teams – for me, generally product marketing, brand, product, and demand gen – a look at what we’ve learned. Perhaps they can use it to influence their work, messaging, and design.

It also allows them to put things on our radar we may not have known about – an upcoming campaign, an experiment run on the onboarding flow, etc.

The experimentation archive / knowledge sharing tool

Finally, the most underrated tool in an experimentation program is some place to store all your results and learnings. Preferably, this is a tool with good search and tagging functionality.

This allows highly interested and engaged parties to simply look for themselves at what has been run.

Personally, I use a combination of Airtable and Google Docs. But you can use Notion, Confluence, or a dedicated A/B test archive tool like Effective Experiments.

Conclusion

The experimentation team meeting is an important ritual that can make or break your team’s efficiency (experiment velocity and quality) and effectiveness (how visible and respected your team is in the org).

Treat the meeting and the participants with respect, and stay true to its purpose. Avoid distractions and scope creep – there are other tools for brainstorming and reporting. And quickly you’ll find that instead of dreading another meeting, you look forward to this one because it’s highly productive. That’s where we’ve landed anyway.

Written By

Alex Birkett

Edited By

Carmen Apostu