Full Stack Experimentation: A Comprehensive Guide

Every modern digital organization needs to adopt full stack experimentation.

Whether you’re a startup or a several-decades-old behemoth, the ability to test and optimize every part of your business—from the first line of code to the last touchpoint of sales—is crucial to winning. Full stack testing provides insights for continuous learning and delivering higher quality products.

In this comprehensive guide, we’ll help you unlock this power through a complete understanding of this critical component of successful modern businesses.

Let’s start at the beginning…

What Is Web Experimentation?

Web experimentation is the process of using controlled tests to evaluate and improve the performance of a website or web application.

It involves creating and deploying different versions of a website or a specific feature and measuring their impact on user behavior and business metrics.

Examples are:

- A/B/n testing

- Multivariate testing

- Usability testing

- Functionality testing

- Security testing

- Database testing, etc.

Full stack experimentation encompasses all of the above and more.

Analyzing the results of these tests produces insights for data-driven decisions, web optimization, enhanced user experiences, increased conversions, and achieving business goals.

Challenges in Web Experimentation

Web experimenting has come a long way since the first experiment in 1995. In its almost three-decade history, web experimentation has picked up some challenges along the way—especially as internet usage grew and the web evolved.

One of the most pressing issues is the phasing out of third-party cookies by more and more browsers, which can lead to skewed A/B test results. A/B testing tools rely on cookies to remember which visitor was bucketed in which variation (control or the treatment), but with cookies no longer available, testers must find new solutions.

Then we also have the impact of GDPR and e-Privacy regulations. As a tester, you have to seek consent from visitors before you can bucket them into A/B tests, unless you choose a tool that uses aggregated or anonymized data, like Convert.

On the other hand, simple, straightforward ways of obtaining consent aren’t forthcoming, or known to everyone. Consider the challenge Lucia van den Brink discussed on LinkedIn:

How do you optimize the “accept” button placement in cookie walls when accurate experimenting is restricted by user consent? (You need to obtain consent via the popup you intend to test).

Lucas Vermeer suggested a genius solution: “You can randomise and treat before they accept.” This isn’t something that would intuitively be the first option to consider for many companies and testers.

As Marianne Stjernvall excellently points out, “The result of your optimization program will only be as good as your data quality.”

It does seem that collecting consent (without dark patterns) to bucket visitors into A/B tests, and then ensuring that they are consistently served the same variation, for consistency in reports and to prevent data pollution, is indeed becoming more and more difficult.

Furthermore, veterans like Tim Stewart believe that data hygiene has always been questionable in the optimization and experimentation space.

Even before the cookie disaster and consent complication, many sprawling businesses did not understand what was broken in their processes. And they may have tested on a mess of unseen issues, taking the outputs of these tests as gospel and moving in the wrong direction at breakneck speed.

One potential solution to these challenges is something you may have heard a lot: server-side testing.

It offers some relief to the crumbling cookie situation. However, as Jonny Longden cautions in the LinkedIn insert above, “it is by no means a panacea”.

Instead, server-side testing is one building block in the practice of full-stack and robust experimentation across products and touchpoints. And it is what savvy businesses are using to feed all their data to their experimentation system, resulting in better-informed hypotheses and decisions.

What Is Server Side Testing?

Server-side testing is a type of web experimentation where the experimentation tool works on the server and not inside the user’s browser. Once a user requests a page or feature, it is modified on the server and delivered to the user, based on predefined algorithms and the bucket the user belongs to.

This allows for testing deeper aspects of the website such as the logic and functionality of different versions.

Server-side testing differs from client-side testing because the server-side experimentation tool implements JS inside the user’s browser to alter the content they see based on your targeting rules. (The browser is the “client”).

Now…

What Is Full Stack Experimentation? How Is It Different from Server Side Testing?

While server side testing is a key technology that makes robust experimentation possible, full-stack refers to a more comprehensive scope of experimentation, not just website testing.

Full stack experimentation is testing that encompasses all aspects of a business, from the website to every touchpoint and experience delivery mechanism. This means you can test across all channels, devices, and customer interactions to gain a holistic view of the customer experience.

Want to pit two different cart experiences against each other? You are working in the code-driven, substantial change realm of testing, a part of full stack experimentation.

What about testing the devices you sell your buyers to improve performance and engagement? Test via the Internet of Things? That’s full-stack testing. It’s not limited to webpages, for example, it could be an app layout or a recommendation algorithm as well.

It can also include product feature testing, allowing businesses to test new ideas and features before rolling them out to their customers. Companies like Netflix are the epitome of full stack testing, leveraging the practice to optimize their platform and create a better user experience for their customers.

Product Experimentation

Companies looking to constantly satisfy their users with great product experiences and win market share adopt product experimentation.

This is in contrast to making “educated guesses” and blindly following the “founders’ vision”. Instead, it’s meeting real marketing needs in the way users request them to be met, by testing new features and changes in a controlled environment.

This is a core approach in the Lean Startup methodology, which uses validated learning, experimentation, and interactive product releases to shorten product development cycles, measure progress, and gain valuable customer feedback.

One key component of product experimentation is the use of feature flags. They are on/off switches that allow developers to enable or disable specific features without requiring a full deployment. This way they can gradually roll out new features to some users and test different versions.

Feature flags also enable testing pricing models, user interfaces, and other aspects of the product. They’re a key part of the full stack experimentation experience.

A/B Testing vs. Full Stack Experimentation

We know A/B testing as a form of experimentation that involves testing two different versions of a single element on a webpage or application to determine which one performs better.

On the other hand, full-stack experimentation is several steps ahead of A/B testing and involves testing across every layer of the business, including the testing of substantial changes in your product code.

Full stack testing is especially common in product and software development circles where the entire software technology stack, from the front-end user interface to the back-end database and everything in between, is experimented upon.

It perfectly enables agile development, CI/CD, and DevOps practices to deliver high-quality software products quickly and efficiently through continuous testing. This is somewhat different in the way web teams use experimentation to deliver optimized user experience on websites:

Product and web testing have been on a converging path for the last several years. Testing platforms and web technology have gotten more advanced, and web teams have adopted more product methodologies. However they do have their differences. Web testing has in the past been reactive where product testing has been more proactive.

The experimentation roadmap for a product team would be validating planned features where a web team would be less interested in validation and more interested in immediate iterative impact on KPIs. For web teams, a test winning and driving revenue has at times been more important than the idea that is being tested.

This approach certainly does have its place especially where there may not be a defined roadmap, and agility is important. Quick self-serve web experiments will always have a use-case, for example for optimizing paid landing pages.

Jonathan Callahan, Product & Web Experimentation

Why Is Full Stack Experimentation the Future?

There’s a massive opportunity for modern businesses today to grow fast through unprecedented access to their customers. With a market-driven approach, they can now constantly measure how changes, such as feature updates, impact their key business metrics rather than making up-front guesses.

Full stack experimentation makes this possible.

While traditional A/B testing has limitations, such as only working on completed applications in few aspects of a business, full-stack testing offers rich aggregation of business-wide insights leading to better outcomes backed by data.

This also leads to:

- Faster innovation

- Shorter time to value

- Reduced operational and performance risks

- Better security

- Improved site performance

This, in turn, protects businesses from making changes that degrade the overall customer experience and hurt the bottom line. Case in point: Digg.

Different Experiment Types in the Full Stack Setting

There are a range of tests that make up the entire full stack experience.

1. A/B Testing

A/B testing is a popular type of experimentation where two versions of a webpage, app layout, or recommendation algorithm with one specific element changed are compared to observe the effect on performance. One version (A) is called the control, and the other (B) is called the treatment. Traffic is split between the two versions.

2. Multivariate Testing

Multivariate testing is similar to A/B testing, but instead of comparing two versions, it tests multiple combinations of a number of elements at the same time. You use this when you want to see what combination of elements brings the best outcome.

3. Usability Testing

Usability testing is a type of test where users are tasked to perform tasks with an application or website while they’re observed by testers. The goal is to learn and gather insights about user experience and identify ways to improve it.

4. Functional Testing

Functional tests include unit testing, integration testing, and regression testing. The aim here is to verify that the application or website works according to requirements, without bugs or other issues.

5. Localization Testing

This type of testing examines how well the app or website’s appearance, content, and functionality adapts to users in different cultures or languages. It often involves user surveys, interviews, and A/B testing.

6. Cross-browser and Cross-platform Testing

This determines if an app performs well on different browsers (Chrome, Safari, Firefox) and platforms (Windows, Linux, macOS).

But wait, there’s more… check this article to find out more types of tests you can run on your site (and when to run them).

Full Stack Experimentation Infrastructure (Tools Comparison) & Methodology

The full stack experimentation methodology relies heavily on data and analytics to make informed product change decisions — from the front-end user interface to the back-end infrastructure.

Here’s how it should work:

Steps to Implement Full Stack Experimentation

Ensure Statistical Rigor

The stakes are always higher in full stack experimentation. It is riskier than traditional A/B testing as it is easier to fall victim to poor statistical rigor.

One instance that illustrates this is making fundamental changes, such as how an algorithm works to deliver results that keeps your business afloat (example: Uber Eats). You must ensure issues such as peeking, p-hacking, interference, and SRM aren’t just discussed, but actively managed.

Bigger swings = bigger risks = more learning.

Consistently Choose the Right Test

Examine the level of risk, the complexity of your systems, desired outcome, and available resources when choosing your test. In web experimentation, for example, the default is A/B testing.

But other types of tests such as multivariate and split URL testing may be more appropriate in certain situations.

Put Up Concrete Guardrails

Guardrail metrics ensure that while you’re testing to improve a key performance indicator (KPI), no other KPI is negatively impacted.

For example, if you’re testing a new feature that’s designed to improve average time on site, you can set up guardrail metrics around user engagement and revenue. So, while you’re improving time on site, you’re not doing that at the expense of user engagement and revenue.

This manages the risk of tunnel vision on a single success metric.

Follow a Full Stack Experimentation Workflow

A great workflow illustrates a well-defined process for running experiments, collecting data, and analyzing results.

Take inspiration from these:

- The Netflix Experimentation Platform

- Microsoft’s Experimentation Platform

- Speero Experimentation Blueprints

- A Glimpse into Experimentation Reporting at eBay

Tools for Full Stack Testing

Let’s explore some of the popular tools used for Full Stack Testing.

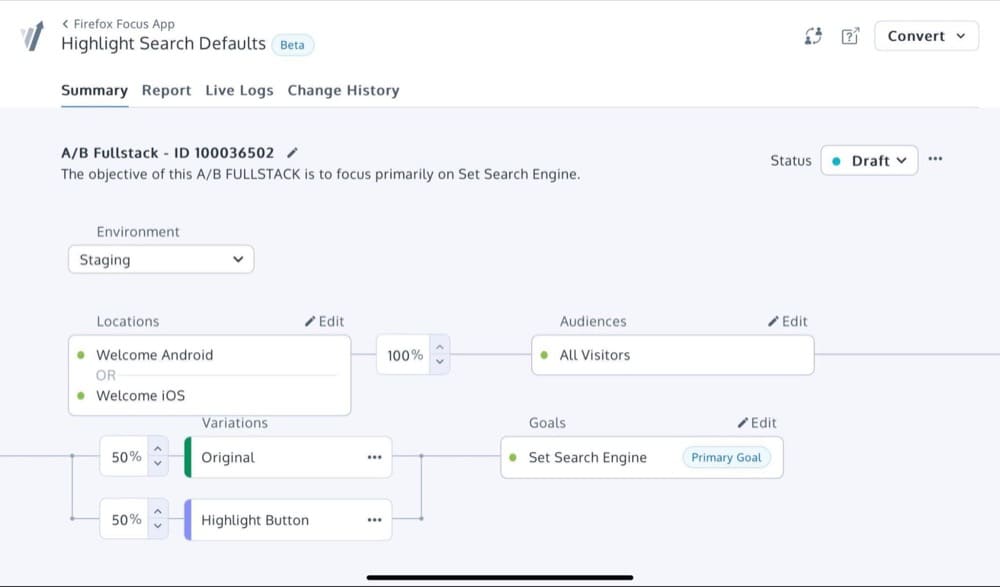

1. Convert Experiences

Convert Experiences is an A/B testing platform with full-stack capabilities. It has feature flagging, audience targeting, plus Node, JavaScript, and PHP SDKs.

Ratings: 9.0/10 on TrustRadius and 4.7/5 on G2

Pricing: The plans that include full-stack features start at $399/mo!

Free plan/free trial available? Yes, a 15-day free trial is available.

SDKs: Node, JavaScript and PHP SDKs that can be integrated with most JS frameworks.

Feature flagging and rollouts: Yes. You can turn on and off features in your app and roll out to segments of your users.

Integrations: Convert has in-built integrations with more than 90 tools. These include Amplitude, GA4, Data Studio, Salesforce, Segment, Split, and Zuko.

Customer support: Support is available via email, a support center, and live chat.

Pros:

- Stable, flicker-free testing

- Server-side, feature flags, rollouts — all from one intuitive interface

- Feature testing

- 90+ integrations with other marketing tools (Shopify, WordPress, HubSpot)

- Behavior-based targeting

- API access

Cons:

- Limited SDKs (more coming soon)

- You need developer resources to make the best use of the platform

2. Split

Split.io is an enterprise feature management and experimentation platform that enables continuous improvement in software development. Inside, you can toggle features on and off in production, perform A/B testing, and track performance issues at a feature level.

Ratings: 4.7 out of 5 stars on G2, from 101 reviews

Pricing: Starts at $0 and then there’s the Team plan for $33 per seat per month

Free plan/free trial available? Yes

SDKs: Angular, Android, Flutter, Go, iOs, React, PHP, Node.js, Ruby on Rails, and more.

Feature flagging and rollouts: Yes, you can roll out features to users in segments — by location, account name, or any custom attribute. In case of problems, there’s a kill switch to turn off features.

Guardrail metrics: Split lets you set up safety nets that monitor changes in KPIs you set and will alert you when something is off (and activate the kill switch for that feature).

Integrations: There are over 30 integrations with tools like Amazon S3, Datadog, Google Analytics, Microsoft Visual Studio Code, Segment, and Terraform.

Customer support: Phone and email support are available. There is also help documentation in a support center, a community, and ample resources.

Pros:

- You get integrated impact measurement and alerts when a feature misbehaves

- You can integrate feature flags with experimentation data

- Ability to create custom JSON configurations

Con:

- Some users experience difficulty when managing old feature flags

3. LaunchDarkly

LaunchDarkly is a cutting-edge feature management platform that allows devs to test and deploy software without risk. Its robust capabilities include feature management, client-side and server-side SDKs, experimentation, feature flags, and more.

Ratings: 4.7 out of 5 stars on G2 and 8/10 on TrustRadius

Pricing: Starts at $8.33 per seat per month, on an annual plan

Free plan/free trial available? Yes, 14-day free trial of pro features.

SDKs: 30 SDKs are provided on every major platform, including Ruby, Vue, React, PHP, iOS, Android, C#, and Java.

Feature flagging and rollouts: Supports feature rollouts to segments of users, flag scheduling, automated rollouts, and rollbacks.

Guardrail metrics: LaunchDarkly lets you automate flag changes based on metrics fed in from external tools. These guardrail metrics could be error rates, latency, and other performance indicators.

Integrations: Over 45 integrations with popular dev tools such as CircleCi, AWS CloudTrail Lake, Bitbucket, ADFS, Datadog, and Segment.

Customer support: There is email support, a knowledge base, an academy, and text-based guides.

Pros:

- Great integration with existing developer workflows and tools

- Robust permission capabilities for working with teams and external vendors

- Seamless front-end, back-end, and mobile experimentation capabilities

Con:

- Some users found the initial setup daunting

4. AB Tasty

AB Tasty offers feature flags, progressive rollout, and KPI triggered rollbacks through a product called Flagship. The core AB Tasty provides a full-featured experimentation platform and AI-powered personalization to boost click-through rate, conversion rate, and average order value.

Ratings: Flagship by AB Tasty is rated 4.2/5 on G2. AB Tasty is rated 4.5/5 on G2 and 8.5/10 on TrustRadius.

Pricing: Contact sales for custom pricing.

Free plan/free trial available? Yes. 30-day free trial.

SDKs: .NET, PHP, Flutter, iOS, Android, Python, Go, Java, Node JS, and more.

Feature flagging and rollouts: Yes, Flagship has safety mechanisms in-built for automated rollbacks and allows you to set up custom user segments that get to experience new features.

Guardrail metrics: With phased rollout, Flagship allows you to monitor crucial business KPIs when testing new features and automatically roll back when they’re impacted.

Integrations: Amplitude, GA4, Zapier, Microsoft Dynamics 365 Commerce, Segment, and more.

Customer support: AB Tasty offers customer success manager, account manager, and technical support engineer.

Pros:

- Easy to setup A/B tests and progressive rollout

- Great, fast-responding customer service

- Easy-to-use WYSIWYG get for handling simple changes

Con:

- According to a user, the server side flagship tool isn’t as robust as the client side tool in terms of reporting

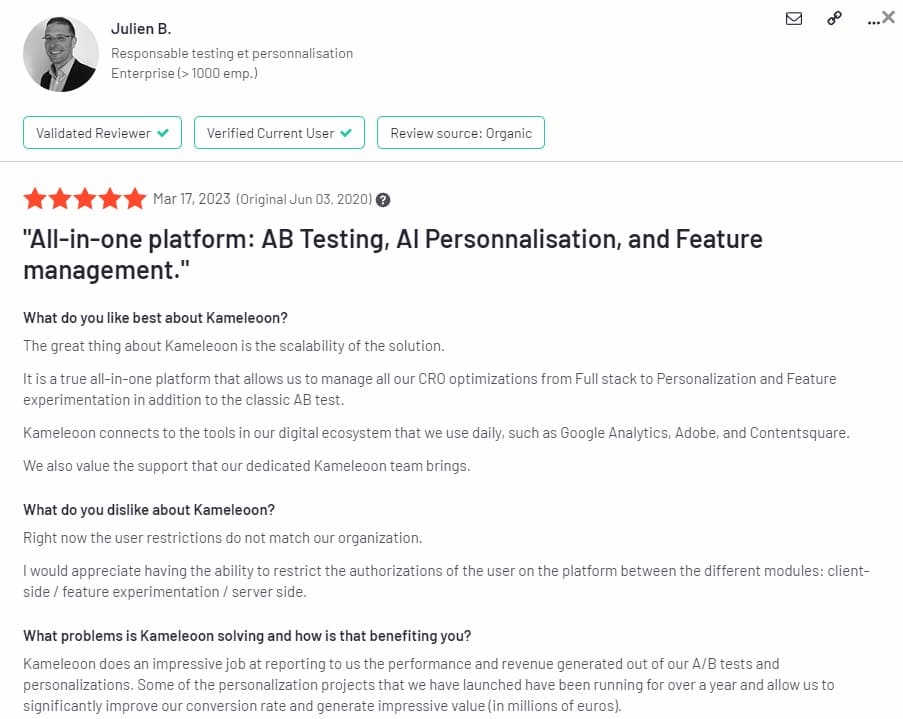

5. Kameleoon

Kameleoon is one impressive platform for experiments, AI-powered personalization, and feature management. It’s great for unifying product and marketing teams for growth.

Ratings: 9.3/10 on TrustRadius and 4.7/5 on G2

Pricing: Contact sales for pricing information

Free plan/free trial available? Yes

Customer support: Yes, with a dedicated account manager.

Pros:

- Advanced AI-powered personalization and targeting features

- Accurate and detailed test planning and execution

- Easy-to-use WYSIWYG editor

Con:

- Complex scenarios require developer-level skill

6. VWO

VWO is a popular A/B testing and conversion optimization platform that supports both server-side and client-side testing. It offers other features as well: heatmaps and session recordings.

Ratings: 8.4/10 on TrustRadius and 4.3/5 on G2

Pricing: Server-side testing starts at $1,708/mo

Free plan/free trial available? Yes

Customer support: Support is available via email, phone, and chat

Pros:

- Comes with a dedicated account manager, open-source SDKs in 8+ languages, SSO, and unlimited account members

- Offers advanced targeting

- You get to choose between US and EU data centers

Con:

- Limited historical tracking of visitor behavior

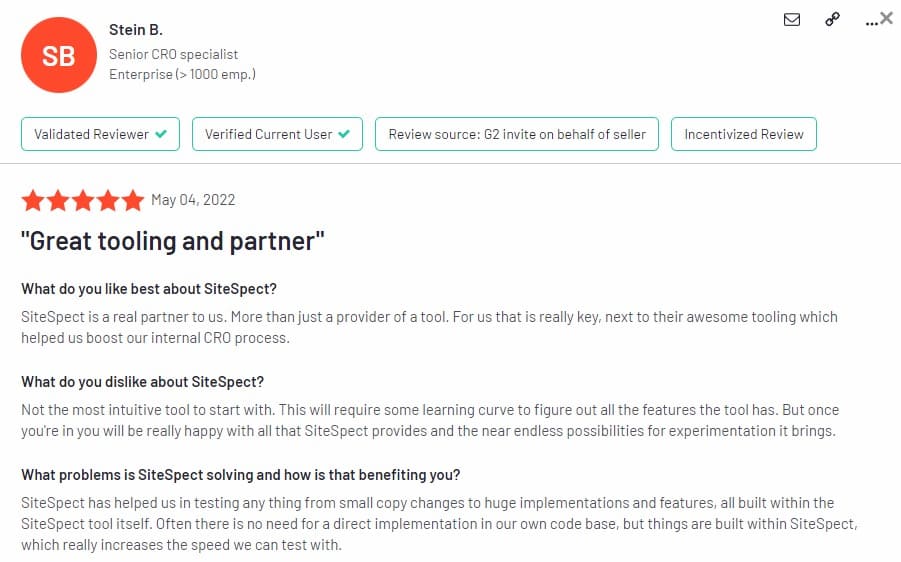

7. SiteSpect

SiteSpect is an enterprise-level A/B testing and experimentation platform that offers feature release testing and AI-driven recommendations.

Ratings: 8.0/10 on TrustRadius and 4.3/5 on G2

Pricing: Contact sales for custom pricing.

Free plan/free trial available? Yes

Customer support: Email and phone support available

Pros:

- Supports smart traffic management and continuous deployment

- Tracks all sessions, users, metrics, and targeting

- Non-intrusive testing

Con:

- Technical knowledge required to implement tests

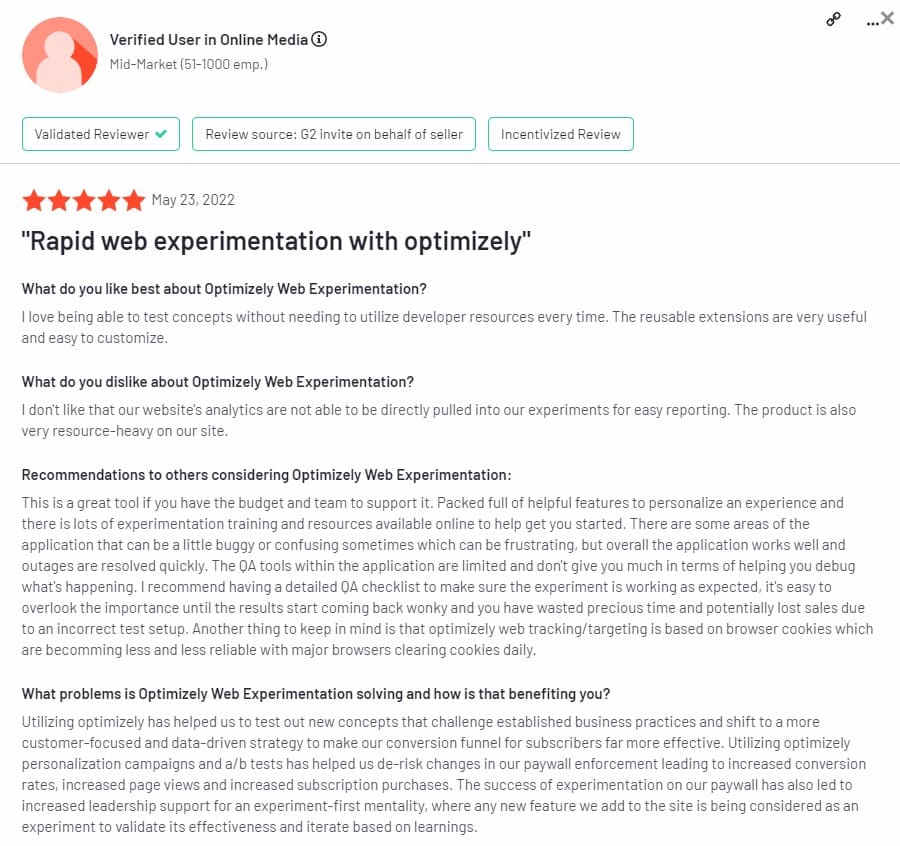

8. Optimizely

Optimizely is a popular experimentation platform that offers a wide range of rich full-stack features.

Ratings: TrustRadius: 7.9/10, G2: 4.2/5

Pricing: Contact sales for custom pricing.

Free plan/free trial available? Yes, with limited features and usage.

Customer support: Optimizely provides a range of customer support options, including phone and email support, a knowledge base, and community forums.

Pros:

- You can test feature rollouts on any device

- Integration with many third-party tools

- Feature flagging is free to use

Con:

- Can be expensive

Metrics to Track for Full Stack Experimentation

Full stack experiments can have far-reaching effects. And those effects can have unintended subsequent consequences (second order effect). A solid strategy is to keep an eye on all these.

To measure the impact of your full stack experiments, here are some metrics to track:

- Site performance metrics: These measure how your experiments impact your website user experience. An example is page loading speed.

- Behavioral metrics: Such as abandonment rates, complaints, or click-throughs that can indicate trends. These measure the impact of changes on the customer experience.

- Platform assessments: This indicates the impact of experiments on parts of your application, including volume and speed metrics.

- Business process impact: Order completion rates, average order value, and activity counts are examples of metrics to monitor for impact on business processes.

- Key support processes impact: How are backups, security, and recovery impacted?

- Integration metrics: This indicates the impact of your experiments on the performance of key website services.

Conclusion: How Does Full-stack Experimentation Change an Organization Technically and Culturally?

Due to its all-encompassing nature, full stack experimentation causes significant changes in organizations. We’ve gathered nuggets from peer-reviewed research to show you exactly what those changes mean.

From a technical standpoint, organizations can experience improved product quality. In Microsoft’s Bing, for example, the results of improving their search engine by experimenting generated millions of dollars in revenue (Kohavi et al., 2013).

The rapid iterations that full-stack testing brings enable product teams to fail fast and learn from mistakes. As a result, new features can be released more quickly and at a higher quality.

Moreover, implementing the full stack experimentation culture can lead to new skills development, such as data analysis, programming, and A/B testing. But even better, team members develop a taste for experimenting and risk taking, which are vital ingredients for innovative thinking and learning (Jones, 2016).

You also have broken down silos and improved collaboration among cross-functional teams. When disagreements come up among team members (which they often do), they settle through experimentation and feedback rather than endless debates or appeals to authority (Rigby et al., 2016).

This opens up a flourishing cycle of data-driven decision making and innovation throughout the organization. Organizations like this are, on average, 5% more productive and 6% more profitable than their competitors (McAfee & Brynjolfsson, 2012).

Plus, the employees are usually more satisfied here. Because they have the freedom to test their ideas and take ownership of their work (Ryan & Deci, 2000).

These organizations can also disrupt themselves and their industry with a full stack experimentation culture. They stay ahead of the curve and ensure their own survival for decades (Christensen et al., 2015).

You can see this working out for companies like Netflix, eBay, Amazon, and Google.

The Lean Startup methodology, which popularized the concept of ‘validated learning’ through iterative product releases and the incorporation of customer feedback, inherently uses full stack experimentation (Ries, 2011). Eric Ries, the mind behind Lean Startup, emphasized the importance of conducting small, quick experiments throughout the entire product development cycle to minimize waste and learn quickly.

Full stack experimentation indeed aligns with the Lean Startup’s principles of “build-measure-learn”. It allows startups to not only validate their assumptions about the product, market, and customer behavior but also adapt swiftly in response to those insights. This rapid feedback and adaptability significantly reduce risks and inefficiencies while fostering innovation (Blank, 2013).

On the other hand, full stack experimentation can push lean startups towards a higher degree of customer centricity. It encourages data-driven decisions, limiting biases and assumptions while increasing the likelihood of meeting and exceeding customer expectations (Maurya, 2012).

References

Blank, S. (2013). Why the lean start-up changes everything. Harvard Business Review, 91(5), 63-72.

Christensen, C. M., Raynor, M. E., & McDonald, R. (2015). What is disruptive innovation? Harvard Business Review, 93(12), 44-53.

Jones, G. R. (2016). Organizational theory, design, and change. Pearson.

Kohavi, R., Deng, A., Frasca, B., Longbotham, R., Walker, T., & Xu, Y. (2013). Trustworthy online controlled experiments: Five puzzling outcomes explained. In Proceedings of the 19th ACM SIGKDD international conference on Knowledge discovery and data mining (pp. 786-794).

Maurya, A. (2012). Running Lean: Iterate from Plan A to a Plan That Works. O’Reilly Media, Inc.

McAfee, A., & Brynjolfsson, E. (2012). Big data: The management revolution. Harvard Business Review, 90(10), 60-68.

Ries, E. (2011). The Lean Startup: How Today’s Entrepreneurs Use Continuous Innovation to Create Radically Successful Businesses. Currency.

Rigby, D. K., Sutherland, J., & Takeuchi, H. (2016). Embracing agile. Harvard Business Review, 94(5), 40-50.

Ryan, R. M., & Deci, E. L. (2000). Self-determination theory.

Written By

Uwemedimo Usa

Edited By

Carmen Apostu