You Need an A/B Testing Learning Repository to Run Experience-Informed Experiments (Experts Say)

Does your testing program feel like it’s lacking structure or impact?

Are you running tests with no plan of attack and unsure what to prioritize or where to work next?

Perhaps you get lost trying to remember which tests you’ve tried before, what worked, and what failed?

Or maybe you’ve just joined an existing program and have no idea what they’ve run already, how it performed, or where to start?

It can be easy to get lost in the weeds when A/B testing but fortunately, there’s a simple way to solve all of this.

We recently interviewed 5 professional CROs and one thing was immediately clear: If you want to run experience-informed experiments and grow your testing culture, you need to have a centralized insights repository.

What Is a Learning Repository?

Simply put, it’s a singular location to store all information about past tests that you’ve run.

- The target page,

- The hypothesis and what you implemented,

- The test elements and variants,

- The results,

- Its impact on important metrics, etc.

It can be as basic as a folder on a laptop or an Asana project, but a learning repository is much more than just a glorified filing system…

An ‘experimentation learning repository’ allows the wider business and stakeholders to see what experiments have been conducted to date and what learnings have been gained. The repository provides the reader with the ability to consume the content at their own time and speed which is important in a global business.

– Max Bradley, Web Strategy & CRO at Wiz

This means easy access to your entire team of all your past tests, which can actually help you to build that testing culture.

How?

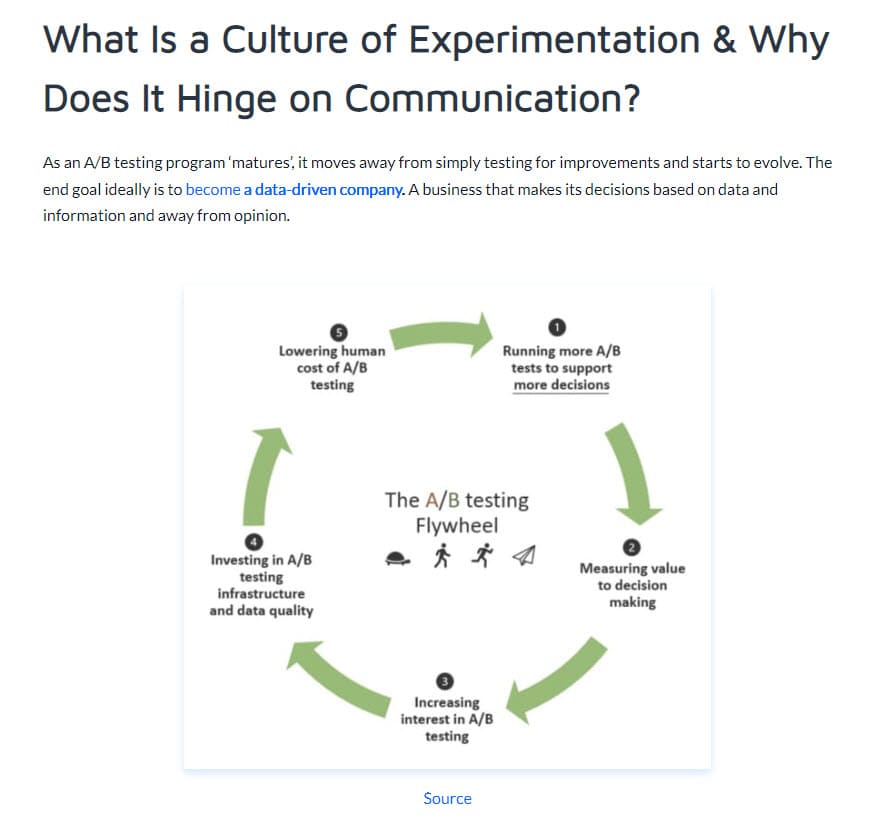

The more people are involved in testing and seeing tests run, the faster they adopt the mindset.

By seeing what tests have already run and their results, can spark new ideas and angles for anyone who has access to this information. This can then lead to an aha! moment in different areas of the company outside of the testing team, which they can then test for themselves or bring forward to the testing team.

For example, if the paid ads department identified the most effective language that got a lift in page tests, then they may want to try this in their ad copy, paid search, or social media, as it clearly resonates with the audience.

Not only does access to past tests open up new ideas with external team members, but it also reduces the time spent trying to find important information.

There’s more to a learning repository than just company-wide access and time-saving though:

Learnings are the core principle of building meaningful and effective products, and the only way to collect meaningful & validated learnings is by running experiments and collecting all those learnings in a central repository. The learnings in this repository can be used in many exercises like for example: opportunity/solution trees, where you work towards a certain user goal with several different solution approaches.

The key to running experience-informed experiments is to have a central repository that is accessible, structured, searchable and properly updated/managed. Doing this in a successful way can have an exponential effect on the productivity and quality of the overall experimentation program output.

– Matthias Mandiau, CTO of Vanar Blockchain

Like Matthias says, the more we can understand our audience, the better we can deliver products and services for them.

We may not get the insight right away, but it can set us on the right path, and from there we can improve further and offer the best product and user experience.

Better still?

Continuing to learn and manage the results from our tests can help us avoid costly mistakes…

You’ve Run Experiments, Now Avoid These Mistakes

Having a place to store and access past data is great, but it’s not the only benefit.

In fact, during our interviews, we found a number of common testing program mistakes talked about again and again — almost all of which were rectified by having a learning repository.

Failing to Respect “Expensive” Company Data

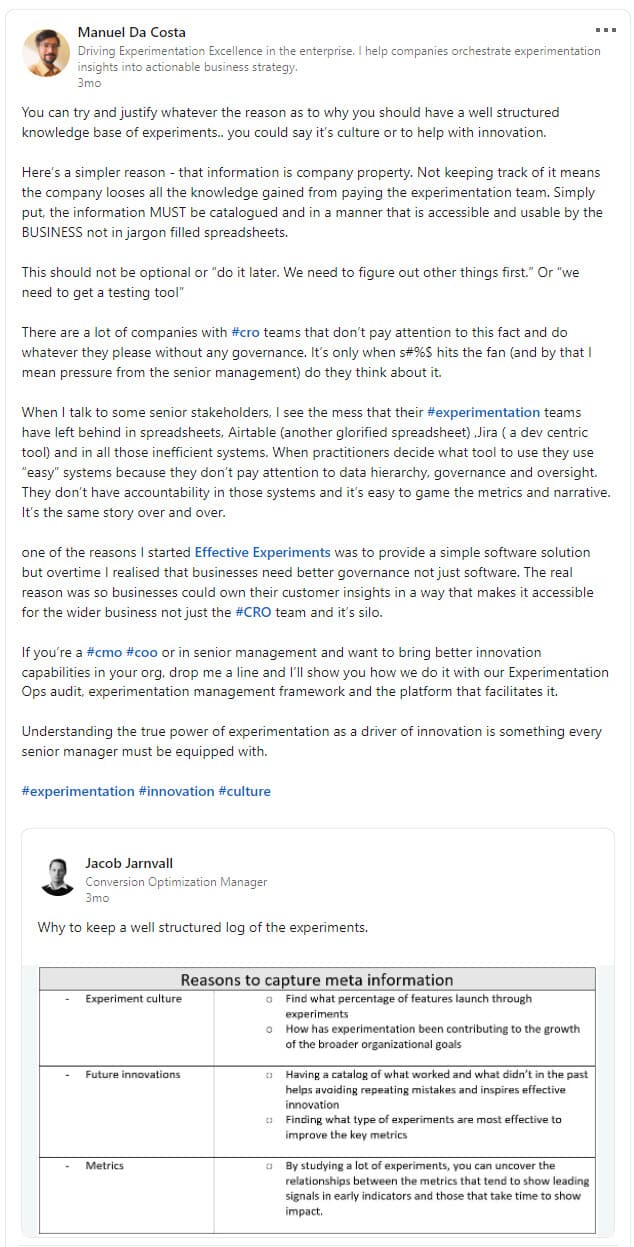

One of the most convincing arguments for a learning repository is the simple fact that all data from tests is company property.

You’re spending valuable time and money collecting that data. The last thing you want to do is not store it or use it, or worse, never come back to it to help you plan new tests and learn from past ones:

Why invest so much money in an experimentation program when the major output of it (learnings) is not being taken care of?

A good overview is key in order to understand what has already been worked on, what should now be the focus, and what should soon be worked on. Also this way the knowledge does not depend on people, because people come and go in companies.

On top of that it allows better knowledge sharing, better onboarding, preparing better business cases, better research…

Having a mile-high view of your testing program can help you to not only test better but also train new staff or even team leads. (And bypass the issue of losing all insights when the CRO manager leaves the company).

How?

Well, now new members can see what has been tried before, what worked, and maybe what had the potential to work but wasn’t implemented correctly or could be iterated on further…

Missing Out on Developing a Company-Wide “Gut Instinct” That Is Experience (And Data) Informed

There’s nothing wrong with using your gut to come up with test ideas. Sometimes you simply have an intuition from data or experiences that can help you to form a hypothesis and get an initial test completed.

However, you need to watch out for always relying on this gut instinct all the time, and not paying attention to research and data instead.

One thing I tell my team (at Bouqs) is… when you don’t have data (logged learning/artifacts from testing), you have to make decisions based off of either your gut, or the gut instinct of stakeholders. A database of learnings creates a

gut for all of us that we can use as a gut check, but one that isn’t just our own or biased.

– Natalia Contreras-Brown, VP of Product Management at The Bouqs

The biggest companies with the most mature CRO programs are almost all data-driven. They make choices based on what the data tells them is most important and it is often why they become the market leaders.

Don’t become guilty of testing on gut choices and opinions only, and don’t focus on metrics alone. Take the time to find the contextual insight from each test result. Follow the information and, most importantly, track and save what happened and why you think it happened, so you don’t make the mistake of forgetting what you’ve tried and why it worked.

Repeating Tests That Have Been Run Before

We’ve hinted at this already, but without tracking what you’re doing it becomes very easy to either re-run a test idea you’ve already tried, or miss a test idea because you think it has already been run.

A learning repository helps avoid running experiments again that have been previously conducted. It also reduces the time spent answering queries on results and learnings from stakeholders for the experimentation team.

Be nice to yourself and your future team and keep a record of what you’ve been running and all the details of each test.

It could be that you want to run something similar but without the details of what you tried before, it can be easy to miss out or spend another 30 days getting data you should have saved.

Poor Communication or Lack of Access to Information

One of the biggest differences in mature CRO teams is their ability to communicate or access information quickly and easily. Not just in the ease of access, but in how efficiently they can provide the right information.

Having a well-documented and organized learning repository can cut down communication barriers and even help to not sideline testing workflows, while you search for information and put things on pause.

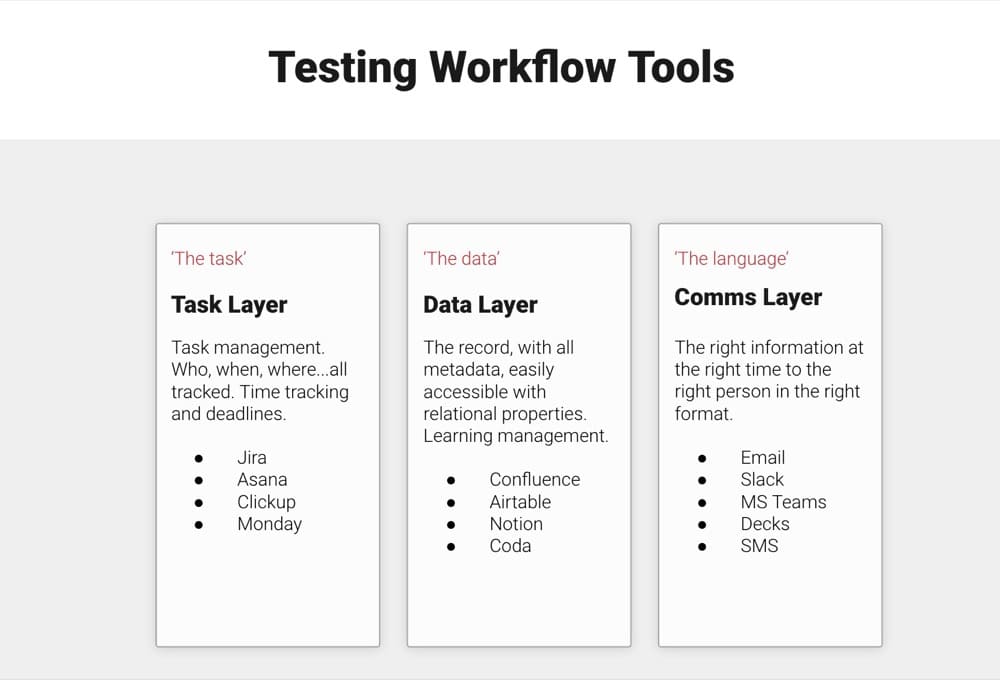

(An experimentation repository) is a knowledge management system that holds all data to a detailed degree, and is accessible by the team generally, but most importantly facilitates communication artifacts (comms layer) to be produced for the right people in the right format at the right time.

It is the ‘data layer’ in the testing workflow, as seen here:

– Ben Labay, CEO @ Speero

Leaving Learning (and Money) on the Table

Don’t forget that the majority of tests fail. It’s only through iteration and learning from past tests that you improve and get those ROI-affecting wins.

Without keeping track of what you’ve run and where you’ve been, it becomes very hard to move forward and see progress, or to even feel confident in your testing process.

Stefan Thomke said it well: “despite being awash in information coming from every direction, today’s managers operate in an uncertain world where they lack the right data to inform strategic and tactical decisions”. Experimentation validates and validation helps us to learn. It’s vital to collate those learnings in a repository that’s easily accessible to review those learnings for future decisions.

– David Mannheim, Founder of Made With Intent

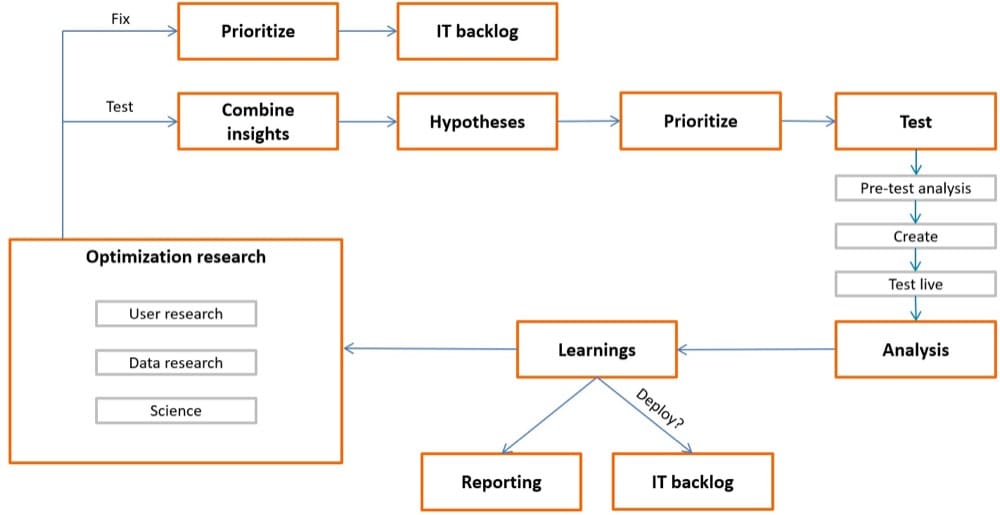

Here’s a visual representation of the ideal process from Ruben de Boer:

Learning and improving from every test you run is incredibly important. Not only is it directly tied into the success of your future tests, but it can even help you to expand out current wins into other areas or even other sites.

Almost every other experiment that we run at H & M in our product team is backed by a documented learning of a previous experiment or other research methodology.

This habit of learning and reiterating comes up again and again with almost everyone we interviewed.

At Brainlabs, about 40-50% of our experiments for Flannels, a well-known menswear store, were iterations of one another. This demonstrated the power of learnings within a single experiment and how that can evolve into something else; because we’re always learning from how practical stimulus can impact a variable.

Let’s give you another example.

Say you have a lead capture page and the layout you use works well for one page. You could then adapt it to others on your site to see if it increases lift there too.

What if you’re an agency with multiple clients in similar niches?

Insights and wins from one company could lead to ideas and designs that may work for your other clients also…

What worked once, has a good chance of not having been fully exploited yet:

- It may work again with a greater intensity (for the same customer)

- It may work again somewhere else (for the same customer)

- It may work again for someone completely different

In other words, past wins have predictive potential which can speed up optimization (more wins, greater probability of success, greater magnitude of effects).

– Jakub Linowski, GOODUI.org

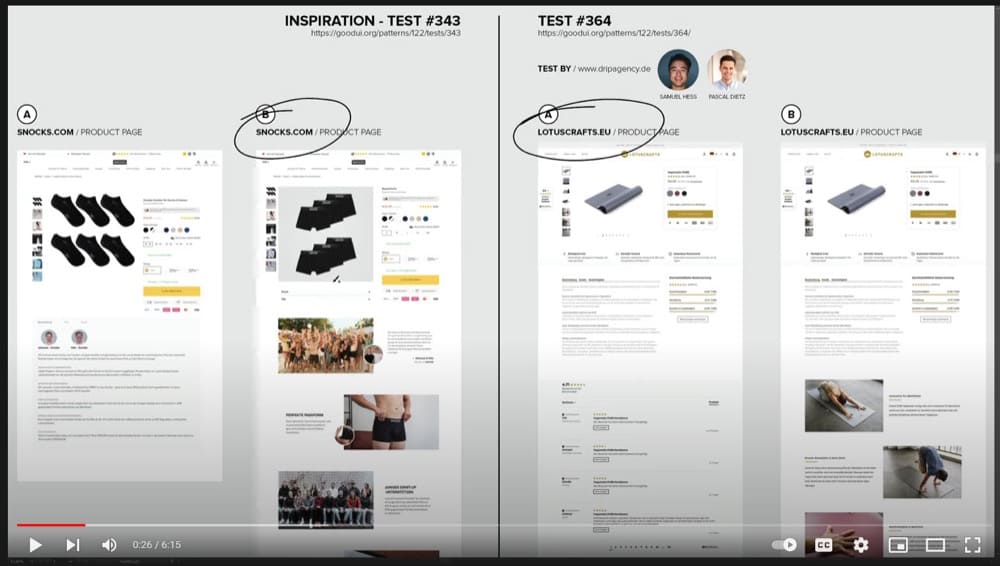

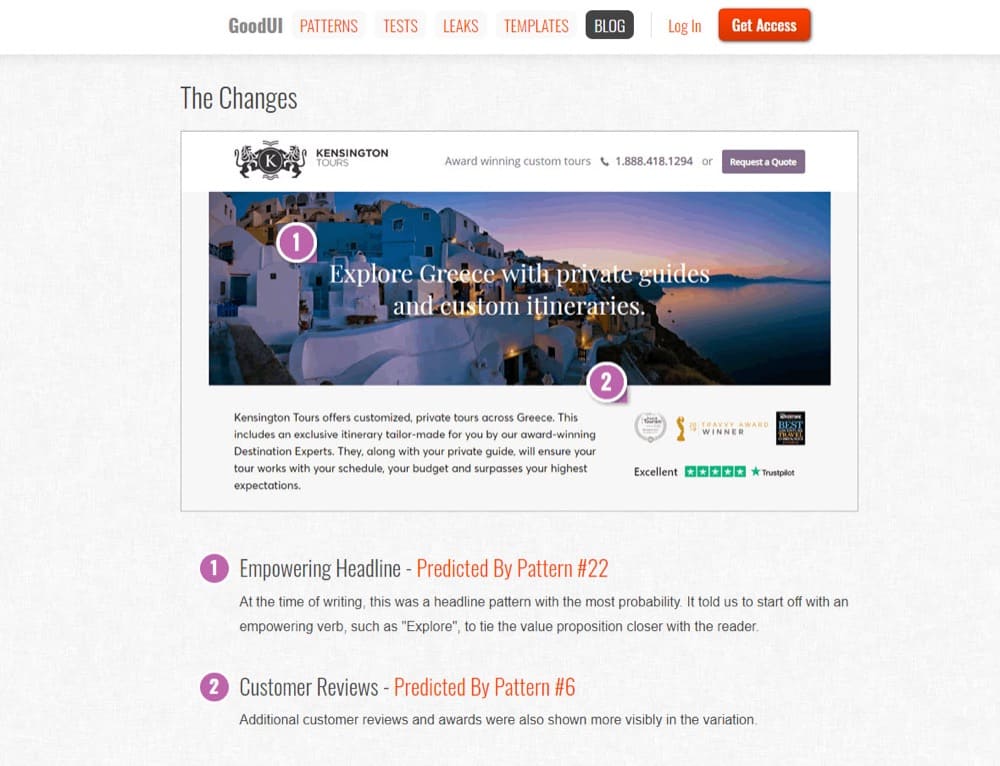

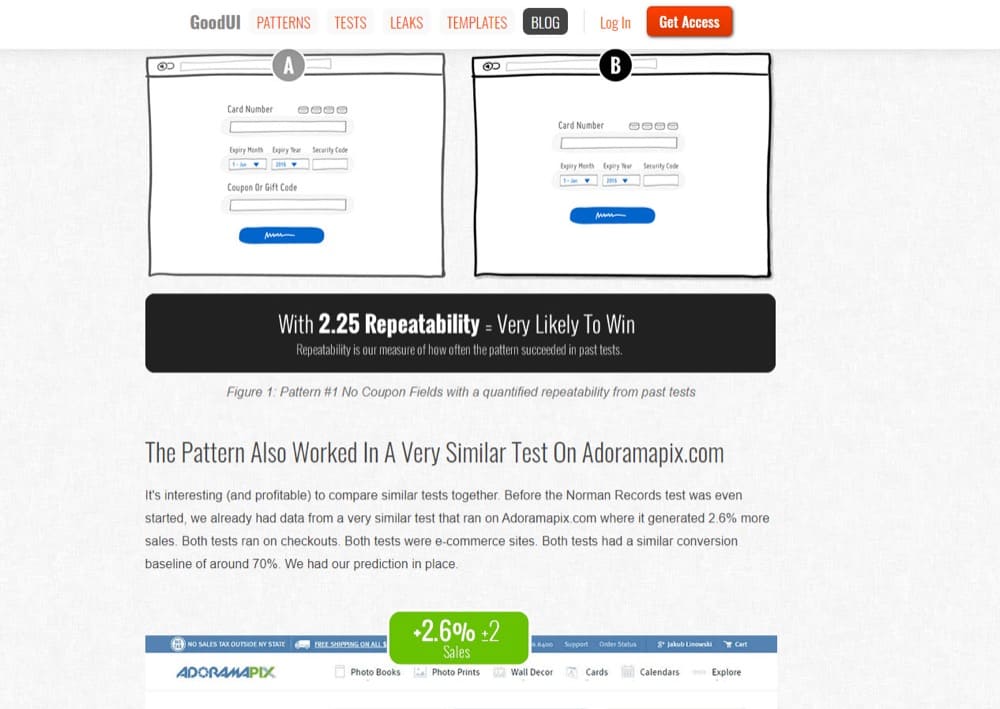

Drip Agency did exactly this with 2 of their clients.

They took winning page layout designs from one client, then tested them on another to immediate lift in conversions.

GoodUI did something similar. They took winning test ideas and information from one client and used it as a basis to test other pages on the site.

This got them a 42% lift in conversions!

They then took it a step further.

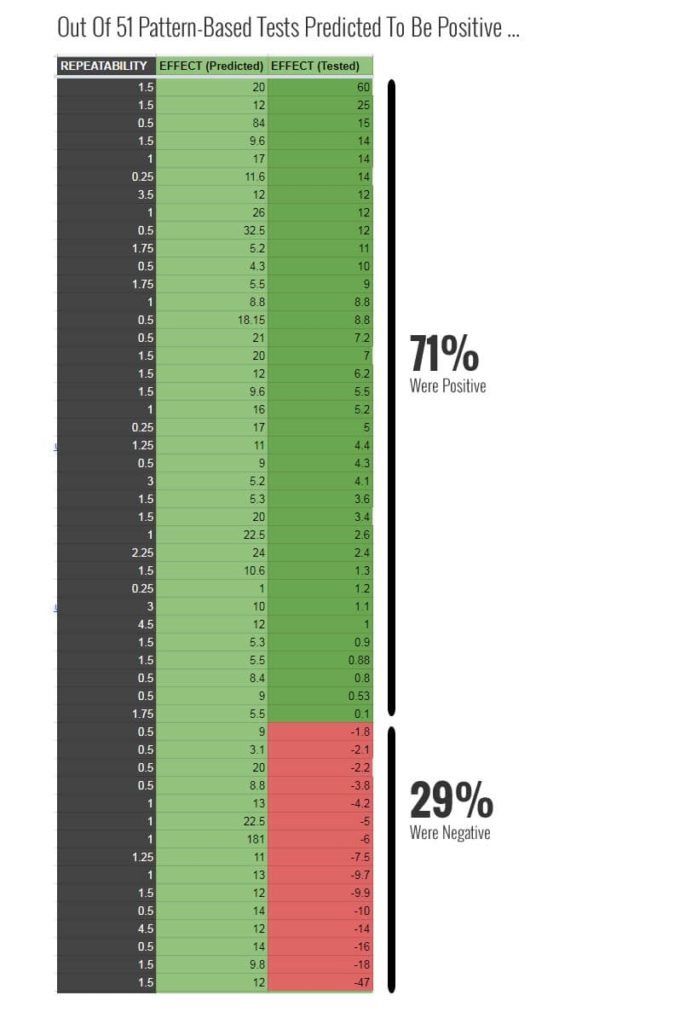

Rather than just implementing winning designs for one client, they ran a case study to see if you can predict winning tests based on previous campaigns across multiple websites.

The theory being that certain UX designs may work across other industries.

And so they ran 51 tests using previous winning design layouts and achieved a 71% success rate when using previous winners to help design new tests on other sites.

Pretty cool, right?

Well here’s another reason why a learning repository is so helpful and it’s why good testers look at past winners.

If you think about it, GoodUI is basically a type of learning repository, in that it’s a database of past tests and winners across multiple sites and industries.

The thing is, they will often see designs reused on other sites and notice that particular patterns will provide lift. Maybe someone else ran the test but it worked, etc.

Being the smart cookies that they are, GoodUI uses these particular patterns as inspiration for tests to run for new clients…

And it makes sense, right? Once you’ve fixed technical issues and decided on a page to test, it doesn’t hurt to look at past test elements on similar pages and what worked.

You can use other sites for inspiration when starting out, but all the experts agree. You need to start building your own repository as soon as you can.

Invest in an Experimentation Repository

Jakub Linowski said on LinkedIn that if you look at professions such as doctors, engineers, athletes, and the military, you’d see most of their successes and growth are built on the learnings they’ve generated from past experiments.

With that mental image, you can imagine how fast the CRO field can grow as a whole when experiments don’t always have to start from level 0. But without a learning repository, that’s exactly the case.

And many in-house CRO teams are guilty of this.

If you bring this idea closer to home, it is clear how building on what you already know is a solid way to compound the efforts of your experimentation program.

You can learn more about creating a learning repository and data archiving practices by watching this clip from Convert’s A/B testing course.

Repository = Higher Chance of Experiment Success

Only when you have the mindset of learning tests (and past tests) do you actually value what experimentation offers i.e. an idea of what works and what doesn’t, given context and circumstances.

20 “good decisions” in UX, UI, Product, Marketing (thanks to experimentation) can help flex decision-making muscles and sharpen instinct. The more you see your opinions shot down or validated, the more of a compass you have to find out what works and what your audience needs.

Those who test for the sake of conversion rates miss out on this big picture. It’s about learning what your audience needs, and not about being right.

Let’s show you how:

How to Structure Your Learning Repository

A learning repository is meant to eliminate data silos, foster collaboration, and strengthen communication.

If yours isn’t structured properly, it’ll be extremely difficult to meet those basic requirements. But the thing about structuring your learning repository is that there are options. They work differently for different organizations.

That’s not an issue because we’ll describe the 3 models to you and then you can picture which would align perfectly with your team, department, or organization. Even better, experts recommend one specific model.

There seems to be a winner in terms of experimentation team structure: Centre of Excellence.

- A Centre of Excellence (CoE) enables and equips department-specific experimentation teams

In this model, the experimentation team is the CoE for the entire organization’s experimentation program.

So, they’re sort of a concierge for anyone in other departments who wants to run experiments. You go through them and they help get it set up.

Then they oversee the integrity of these experiments and keep everything aligned with the broad experimentation goals of the organization.

Structuring this way today will remove downline issues tomorrow. Because everything is kept on the same track, easy to monitor, and building a learning repository this way is easy to coordinate.

The major challenge here is it wouldn’t be as accessible as a decentralized model.

- Decentralized units sit across departments and get everyone onboard

Decentralized units are in various departments and run the actual tests. This is a great strategy because they have the most know-how of their own growth loops and metrics, so they’re in the best position to design and execute high-impact experiments.

In this model, everyone can run tests — regardless of their department and experience level with experimentation. When combined with CoE, it attracts some admirers…

I’m a big fan of decentralised experimentation, working alongside a centre of excellence to quality control and evangelise AB testing. In my experience, these structures work best for those cultures that truly embrace the power of experimentation. In terms of a learning repository, this should follow suit; team members should be empowered to run experiments and highlight the learnings from the fruits of their labour.

The responsibility of maintaining the learning repository thus also follows suit.

- Managed by one person to collect and maintain experimentation learnings

However, unlike the CoE-alone model, the decentralized model’s learning repository is tougher to coordinate.

It helps to have a designated role that’s responsible for documenting and maintaining learnings. And there’s something beneficial about this:

Managed by the program/project manager (keeping track) and the primary test strategist (who inputs the ‘stories), but if properly done, it can have inputs at all stages, as inputs come through as form entries such as issue forms, new campaign trackers, new test solution ideas, etc.

– Ben Labay, CEO @ Speero

Matthias Mandiau concurs with Ben.

I prefer a hybrid between centralized/decentralized:

The repository always needs to be accessible (cloud).

Need to be able to combine different research methodologies to create strong hypotheses.

Setup and management of the repository and ownership should be with 1 team/manager (centralized). Good to follow up on high level macro experiment performance KPI on central level.

Every product team that works with experimentation should have a stakeholder (data analyst/CRO) who has the responsibility to take proper care of all collected learnings that are uploaded in the right format to the central repository. Also good to follow up on product team level micro experiment performance KPI within the decentralized team. Could be a dashboard that is linked to GA or the repository for example.

At Zendesk, one manager is in charge of maintaining the repository, but the inputs come from the “test owners”:

Currently we have the repository managed by one team within the business with one person taking the overall ownership of it. Test “owners” are requested to add the learnings post test completion into the respective area of the repository.

And that’s how one person (or a small team) managing a decentralized-type model can keep the experimentation learning repository efficient and quality-assured.

It’s similar to what GoodUI is doing — drawing on learnings from across verticals and customers to create an excellent resource of experimentation insights.

So, even if you don’t have a learning repository yet, you have a well of knowledge to draw from. Convert plans come with a GoodUI discounted subscription.

How to Document Your Learnings

Just like any organized filing system, your learning repo cannot be a place to dump data. There needs to be a definite and proper manner of documenting learnings so that it is useful to everyone.

This will mean an intuitive visual representation of data, jargon-free data stories, and an easy method to locate specific tests when needed.

Here’s how.

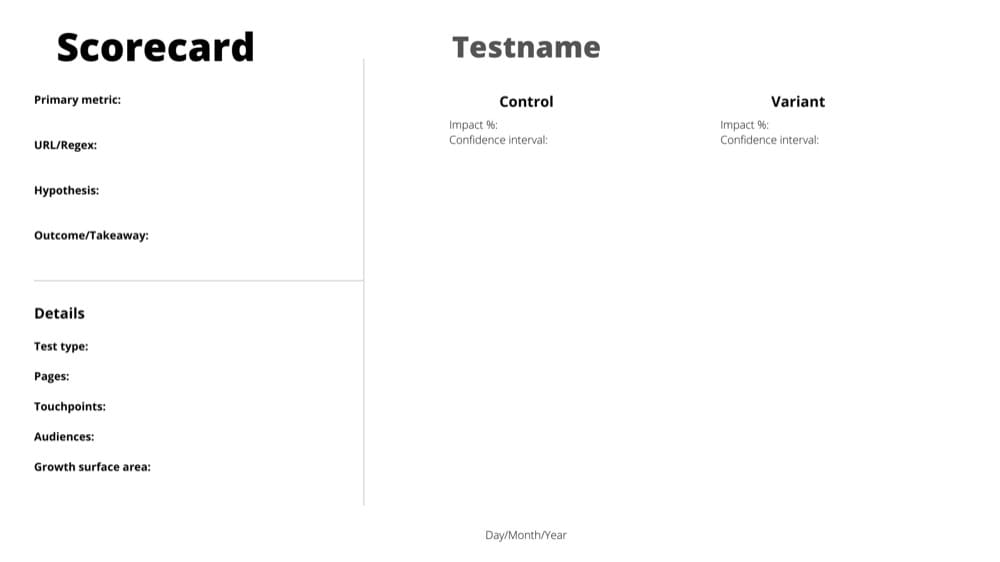

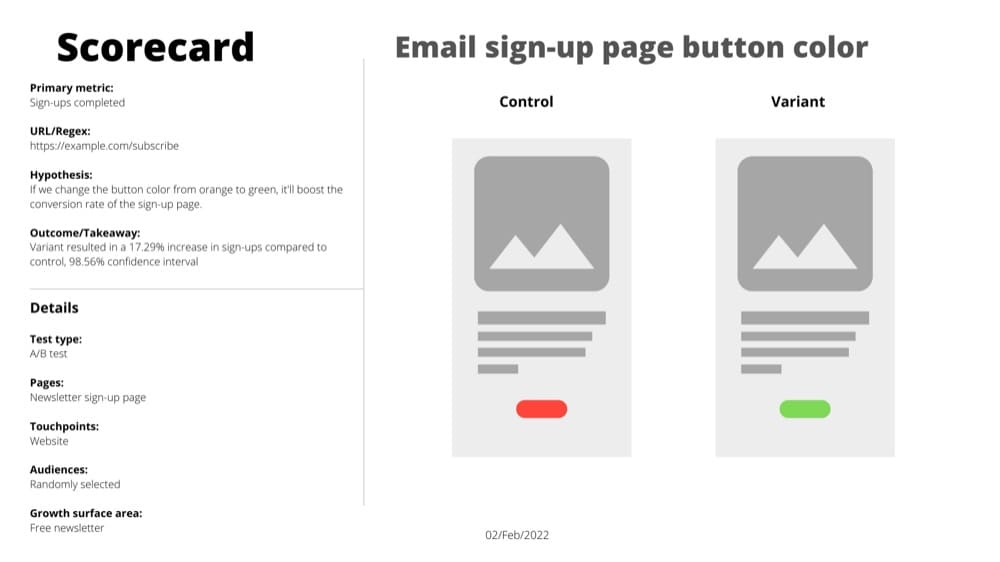

Capture and Record All Metadata From Tests

The obvious first step is collecting the data from tests. But what data do you collect? It’s easy to say collect everything, but that can easily get overwhelming — and discouraging.

So, what’s the data to include in your experiment repository?

It’s the full metadata of a test concept. And it should primarily be tracking inputs based on the questions that need to be asked, which are standard in categories:

- Test types

- Pages

- Touchpoints

- Audiences

- Growth surface areas

But not standard WITHIN these categories, e.g., audiences will differ depending on the company, but all companies will test on different segments of audiences.

And what does this look like?

Let’s explore each of those categories so you get a clearer picture:

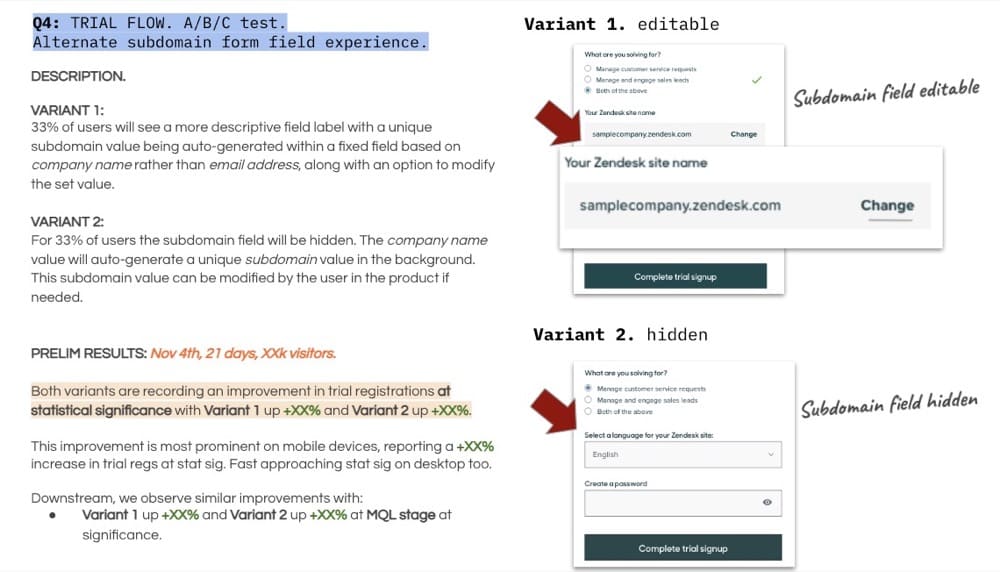

- Test types

What kind of test was it? A/B test? MVT? A/B/n tests?

- Pages

Here you specify the page or pages on your site where the test occurred. Is it the homepage? A specific product page? Your SaaS brand’s pricing page?

- Touchpoints

Touchpoints are areas in your customer’s journey where they interact with your brand. This could be an ad, product catalog, blog post, marketing emails, your app, etc.

- Audiences

Who is your target audience for the test? Are you targeting specific segments based on any criteria? Behavioral data? Goals? Visitor source? Geolocation? Or they’re all randomly selected?

- Growth surface areas

This refers to an interaction or feature that encourages users to stick around your site or dig deeper into their relationship with your brand. So, it’s sort of a hook that boosts the value of each visitor. This can be a free trial offer or a demo.

To enrich your metadata, you’d also want to include:

- Dates

- URL/Regex

- Iteration on previous test — link to a prior test that’s the foundation of the test you’re reporting on.

And you seal this up with more impactful info. Include…

- Screenshots of A and B

- Isolated or not (# of changes)

- Impact % with confidence intervals

- Metric type

The more, the better. But keep it relevant and useful.

Here’s another example from Max Bradley:

For a basic tracking report or repository, Convert’s report will also show you the winner and what you tested for.

Respect Different Learning Styles While Preserving Information

You have to appeal to the various ways people prefer to consume information when storing experimentation data.

There are certain things to keep in mind when doing that…

We’ve tried a lot of different methods. As an agency, we’re dealing with multiple different stakeholders from different businesses at any given time. Therein lies the challenge – people take in knowledge and learn differently. Are they visual, auditory or kinesthetic learners? Where are they on the Myers-Briggs key? How to communicate to your stakeholders is almost as important as the content in which you’re communicating. From my experience, I’ve learned that a learning repository should be two things to succeed:

- Themable and memo’d so it’s easily filtered

- Succinct for scannable learnings.

You can provide as much information about the reported experiment as possible. And do so in various formats. That’s why you need screenshots, video recordings, and a story to give context to all the numbers.

Remember to keep it relevant, concise, and scannable.

Tag Your Tests to Maximize Learnings

Tagging your tests keeps everything easy to reach.

It also helps you understand the purpose of a test at a glance.

If your repository is growing consistently, one day, you’ll have thousands of tests recorded and hundreds of people using it to swipe ideas, extract insights, and inform decisions.

The learnings will be more useful if people can get a sense of the position of each test in your organization’s solution spectrum.

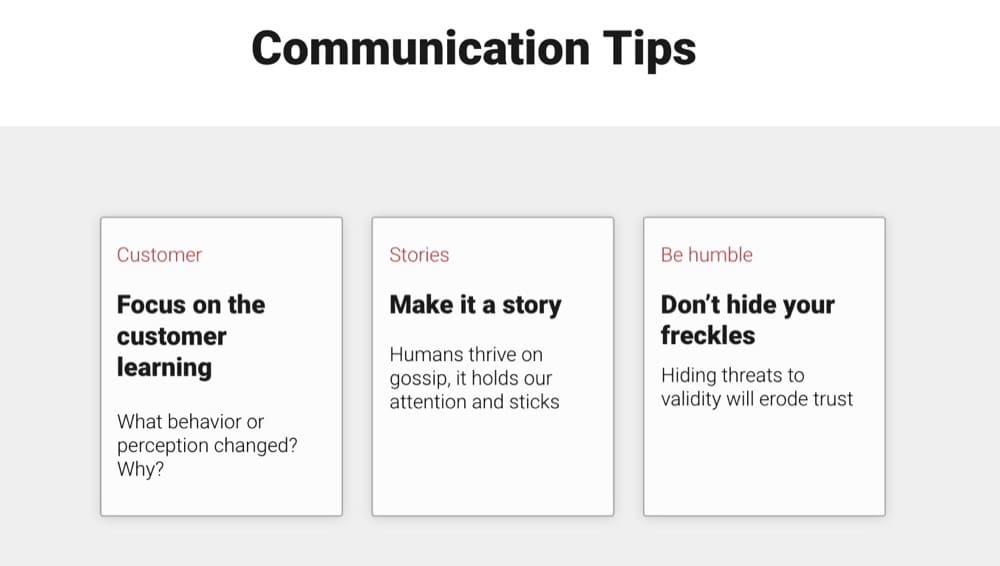

How to Communicate Your Learnings for Maximum Effect

Your learning repository is only going to benefit your experimentation program when people actually use it.

So, besides making it accessible, present it to the rest of your team and organization in a way that makes sense and sparks interest in experimentation.

But how do you do that? These are numbers and some industry-specific terms that could be misunderstood. How do you get people from across various disciplines to appreciate the learnings you’ve gathered?

Communicating A/B tests can be tricky, but if you follow these steps you’ll knock down those barriers and get the most out of your learning repository.

Make It a Habit

First off, if experimentation is a foreign concept where you are, making a habit out of going through learnings may take some time. So, pack some patience with you on this journey.

Your assignment here is to make this part of your usual weekly activities. It could be part of the inspiration part of brainstorming or a game you play to close meetings. For instance, your own version of “what test won?”

It’s part of the grand picture of building a culture where experimenting is a norm…

Make it a habit to run through learnings with the team in a consistent, fun and inspiring way. It needs to be part of the business as usual. At least once a week there should be a meeting to go through hypotheses and experiment results. Every sprint should contain at least 1 or 2 experiments and making experimentation a habit creates a positive culture.

Besides featuring it in team meetings, repository tests are also very easy to share in learnings newsletters. Being in the habit of adding them here makes it easier for sitewide adoption of experimentation as well.

Be Humble & Transparent to Build Trust

It may seem cool to convince people that experimentation has all the answers, but that would be misleading. Help your colleagues understand how it all works, what the numbers represent, and how much confidence you can place in a certain insight you’ve drawn.

Don’t present insights as if they come with 100% certainty. Instead show them this is one of the best ways you can make decisions, as opposed to “gut feeling” and best practices.

Doing the opposite will make them lose trust in the learning repository you’ve built and it could be abandoned to gather dust.

Here are some more tips from Speero’s Ben Labay on how to communicate results:

Don’t hide tests that lost. Instead, use that as an opportunity to show the double-sided benefit of testing.

If you got a win, you’ve gotten insight into what works. If it lost, you’ve learned what doesn’t work so you avoid making a change that hurts key metrics.

In both cases, you’ve learned about something that can be improved upon.

When you present, structure it in a story that shows the real-world application of the insights and invite stakeholders to participate in writing the story, just the way Max does:

We present the most recent learnings on a bi-weekly basis to our wider team and on a monthly basis to the wider business. We try to be as transparent as possible by presenting tests which lost as much as those that won. In addition to this, we ask for all reviewers to submit their ideas or ask any questions they have in our dedicated Slack channel. Where possible, we also look to demonstrate the benefit in actual terms in addition to the percentage uplift, this makes it more impactful to readers.

Don’t Gate Learning, Involve Everyone

Experimentation is not just for marketing, optimization, production, and/or growth teams. Being a data-driven organization means saturating the bulk of decision-making processes with insights extracted from well-done tests.

And your learning repository sits right in the middle of the whole mission.

So, get everyone on board.

Yes, people will have varying levels of experience in the field, but don’t let that hurt the program. As long as each team has someone or people with deeper experience with experimentation, you’ve got something going.

It’s also encouraging to have some people in the team who are more familiar with experimentation and some who have less experience with it. Having at least 2 or 3 people in the team who are experienced with experimentation makes a big difference.

The influence spreads naturally this way.

Invest in a Fresh Pair of Eyes & Cross-Pollination of Ideas

Sometimes a different perspective will give you ideas you didn’t think about. So, don’t let your experimentation learnings rest in a bubble.

Be willing to share it with other experts in your field and see what you can learn from their feedback.

Maybe there’s something that didn’t work on one customer segment, but there’s a slightly different version of it that can work for another?

Or maybe you’ve stumbled on something that everyone is debating about and now you can join the conversation to learn more?

You’ll be thrilled by what you can discover when you involve other CRO experts. Even coaching calls can be part of your strategy to get a different point of view.

Coaching – www.goodui.org/coaching – one or two monthly calls with experimentation teams to: review test plans, provide feedback on test designs, and surface what has worked for others.

– Jakub Linowski

Make the Learning Shareable

Some people use Notion, others use Google Slides, and some CRO experts suggest using Miro boards, but the purpose is the same…

Communicating test data and insights with the rest of the team (or any other stakeholder) in order to make sure the learnings get enough reach.

When you use online tools like Notion, Google Slides, Miro, and any other one that you find appealing to your team, you make it easy to access the repository from any device, and from anywhere.

This is great for remote teams and for getting the fresh pair of eyes we talked about earlier.

It’s portable and can easily be shared. Now you’re really giving your experimentation learnings wings to make a full impact.

Use Insights to Plan Tests

One extra (but priceless) perk of keeping records of tests in a repository is upcycling.

Let’s say you run a test and it doesn’t yield anything earth-shattering. But you’ve recorded it in your learning repository.

10 or 20 tests down the line you unravel a fresh insight that sheds light on that non-earth-shattering test from months ago. Do you know what you have in your hands now?

Without a place to turn to for details on this old test, you get your insight and move on to the next thing. But now you have more information that enriches an insight you had before.

Now you can “upcycle” the old test, design a better one, and see what you find. Your experimentation program just keeps getting better and better. That’s the compounding effort we spoke about earlier.

Another thing is, you don’t have to be the one who ran the old test. The person who did is communicating with you through their entry in the learning repository.

Take Bets

This is one way to add excitement to testing. Who said it had to be boring?

When you’ve presented experimentation learnings to your team (as you now do regularly), and you’ve decided on the next thing to test, you can take bets on how it’ll turn out.

Not only are you exercising team members’ judgment on how prior tests predict the outcome of future tests, but you’re also getting a vested interest in experimentation.

This is an experience Laura Borghesi, VP of Revenue Marketing at Wellhub, shared in this video.

When you’ve made it a habit to check out the learnings on a weekly or bi-weekly basis, built trust, got everyone on board, and gotten vested interests in experiments, you’ll see your repo get more traffic. And usage.

The next question is: What do you need to build a repository?

Learning Repository Tools Breakdown & Comparison (+ A Checklist of Desirable Features to Get Started)

Now you understand how important a learning repository is to your testing program and you know how to use it to hit your testing goals.

But how can you make it even better with tools? What tools are those? Can you buy them or build your own?

That’s what we’re going to cover now:

- What features your repository needs to have (and which are a bonus),

- What tools or services you can use, and

- How to set up a basic repository with simple tools.

So let’s break it all down…

Your learning repository tool should be able to let you:

- structure your experiment data when documenting,

- facilitate easy collaboration and sharing, and

- give you a mile-high view of your entire experimentation program.

Anything more is a plus, depending on what matters most to you or your team.

Use these features to build your own bootstrapped version. But honestly, you don’t have to build it. You can cobble it together instead.

Get Started With Your Own Learning Repository

Now, let’s show you how you can build yours in 4 steps:

1. Use Simple Tools When Starting Out

There are some great tools out there that you can use to build a repository:

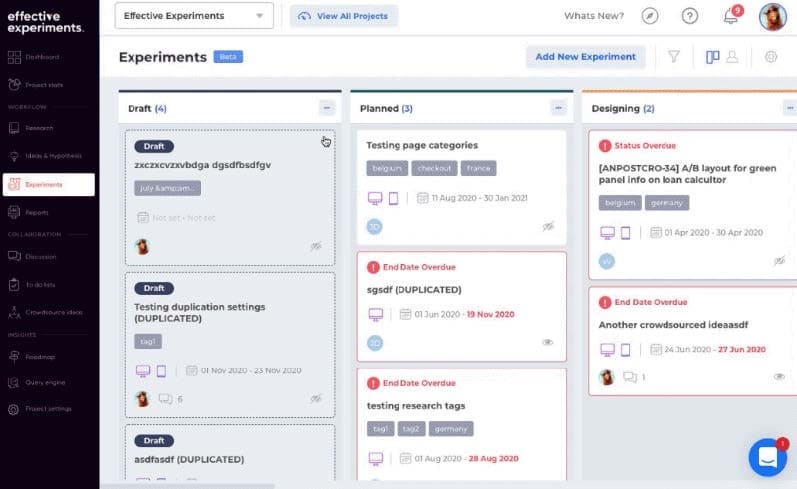

Effective Experiments

Effective Experiments is a tool for documenting experiment data with additional features that help you scale your program and get access to CRO experts for training and consultation.

Its features include:

- Experimentation workflows and processes

- Enhanced quality of data

- Experimentation program insights (so you get that mile-high view of your entire program)

- Collaboration and communication tools

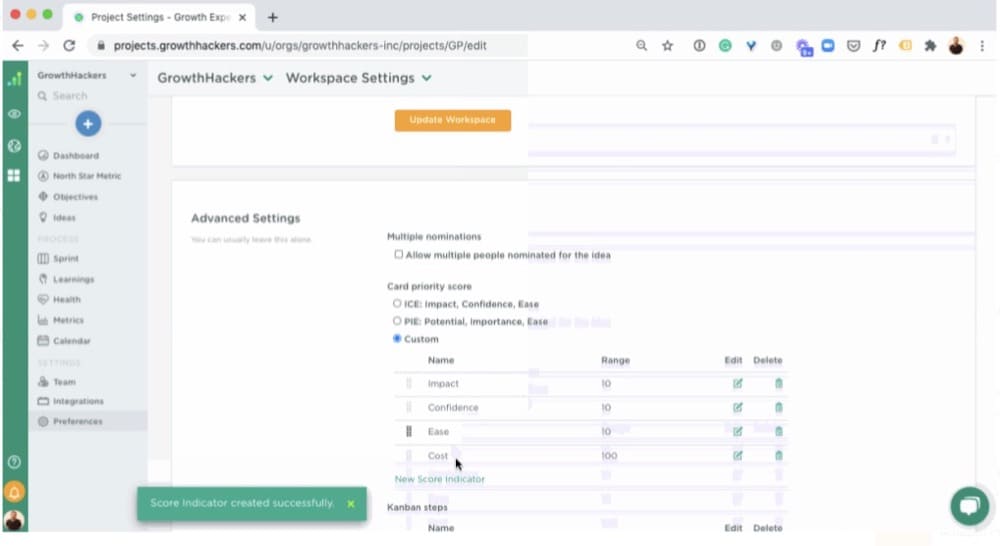

GrowthHackers Tool

Experiments by GrowthHackers helps you build your experimentation learning center in a structured process based on the Growth Hacking methodology.

The key features you’ll find are:

- A dashboard for strategy and metrics

- Collaboration and communication tools

- Hypothesis builder

- Progress reports

- Experiments status tracker

Both of these will work great for you. However, almost all of the experts that we spoke to recommended just using simple tools when starting out — especially if your budget is tight.

Why?

Because you don’t need to have a ‘perfect’ repository from day 1. Instead, you just need something that you can store tests in and use ASAP.

This will help you to start seeing results and learn from your A/B testing, but it also helps you to get buy-in and provide proof that a learning repository is an asset that can work for you before you invest cash in more expensive options.

Airtable. Quick and easy and free. Otherwise, I like Effective Experiments for bigger programs if they want a tool that bridges the different layers.

– Ben Labay

Build a repository that can be shared across the business easily (we utilise Google Slides for example). Try to keep the repository to one slide per experiment where possible, this will make it easier for the user to digest and for you to present on calls. The repository should be kept up to date so that there is always something new to digest. I would also recommend categorising your repository and providing an index, readers can then drill down into the area of interest.

– Max Bradley

And as we mentioned earlier, even Notion can do the trick.

2. Set the Right Expectation Once You Start Using Your LR

The rep experimentation has among non-experts is somewhat way too optimistic. Blame it on the marketing it’s gotten over the last decade?

Well, you can’t change that.

What you can change—or at least, influence—is what stakeholders in your organization think about experimentation. Because if they walk in with the wrong expectation and your learning repository doesn’t deliver, it’s going to be tough to keep it alive.

From the start, let them know that experimentation gives insights; that most times, it won’t give them definitive pathways to higher revenue as they may have heard.

Start the repository from day 1. Start to educate stakeholders that experimentation is about validation and learning, not necessarily money. The more that you demonstrate “we learned X from this test” or “Y from that test”, the more aligned you and your stakeholders will be.

– David Mannheim

3. Build Buy-in With Proof

If nobody or only a few people are documenting their tests and sharing insights across departments, you’ll need to show why everybody else needs to join the effort.

Successfully demonstrating the benefits of a learning repo for C-suites’ support is only the first step. The next thing you’ll have to do is communicate these benefits to other stakeholders as well.

Matthias shared a fantastic way to go about that:

Start small, lean & agile. Get experiments running, collect learnings and spend good effort on documenting them. Make a decent report after every experiment and also have frequent sendout (monthly, quarterly, yearly). Focus on the big fish experiments with positive impact & strong learnings at first. Positive results motivate people to get started with.

Once you have collected a couple of strong impactful learnings it can also be useful to show negative results and explain what negative impact there would have been if we implemented this without experiment. Then explain how important these learnings are for business and for taking better product decisions.

4. Once It’s Working, Start Looking at Specific Tools (Learn and Act First, Purchase Later)

When you’ve set up a simple repo on Google Slides or whatever free platform you choose and completed steps 2 and 3, then you can invest money in a tool.

Don’t jump the gun and pump money into a learning repository until you’re sure there’s an encouraging ROI to realize.

Conclusion

If there’s one big takeaway you get from this article is that you should begin to view experimentation learnings as a company asset. This asset will save you time and money. And it will power a more data-driven optimization program in your organization.

Don’t just run a test, get a win or fail and then hurry to your next idea. Taking the time to communicate and structure what you’ve discovered in your learning repository will give structure to your experimentation journey.

On top of that, it’s the smoothest way to pass the baton, assess the impact of tests, and promote an exciting experimentation culture.

And how do you start getting all these?

Do as the experts say: Start simple.

Written By

Trina Moitra, Daniel Daines Hutt, Uwemedimo Usa

Edited By

Carmen Apostu