Client-side Testing Vs. Server-side Testing: Both Win.

When it comes to running experiments, optimizers can choose between client-side and server-side testing.

While you can run almost every client-side test on the server-side and a few light-weight backend experiments via client-side testing (using split URL or redirect experiments), doing so won’t be as feasible or robust as you’d like… because, for any hypothesis, only one of the two works the best.

And choosing the right one needs careful consideration. There are many aspects to weigh when making this choice. Look at the setup’s impact on speed and SEO, the effort and time requirements for the experiment life cycle, the experiment’s goal and more.

Let’s go over these factors and see how client-side testing differs from server-side testing and the pros and cons of each.

Client-side Testing Vs. Server-side Testing

What’s the difference between client-side testing and server-side testing?

In client-side testing, once a user requests a page, your server delivers it. BUT, in this case, your experimentation tool implements some Javascript inside your user’s browser to alter the content delivered by the server so that the end user gets the appropriate variation based on your targeting rules. (The browser is the “client.”)

In server-side testing, on the other hand, once a user requests a page, your server determines the version to deliver and delivers just that. Your experimentation tool works on the server and not inside your user’s browser.

Because client-side testing only happens with browser-level JS execution, you can only test surface-level things such as layouts, colors, and messaging with it. Some optimizers dub such tests as “cosmetic” tests.

However, that would be discounting client-side testing.

Client-side Testing Might Seem Simple, but It’s Potent.

It’s easy to dismiss client-side A/B testing as the “easy testing” that anyone can do. Agreed: it’s easy to implement. And sometimes, it can be as small as testing a different CTA button color or copy.

But whether it’s this or something as big as testing a redesign or a revamped page, client-side testing impacts a business’s bottom line.

What is client-side testing?

In a nutshell: Client-side testing means the optimization takes place at a browser level. Based on the targeting rules you set up, the visitor’s browser will modify the content to deliver the intended version.

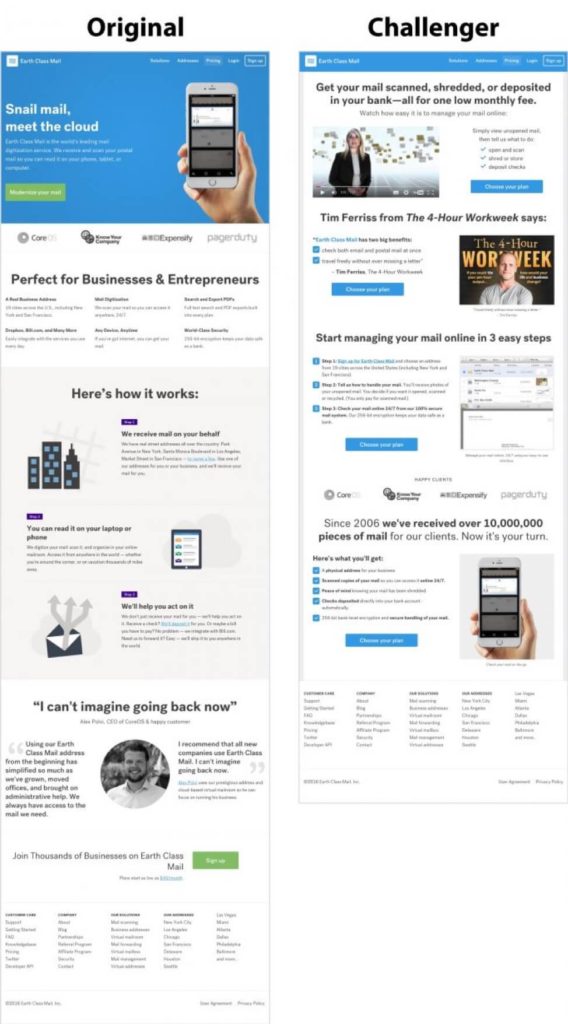

In this case study, a SaaS company used Convert Experiences as their client-side A/B testing tool to increase growth in leads by 61% on their homepage:

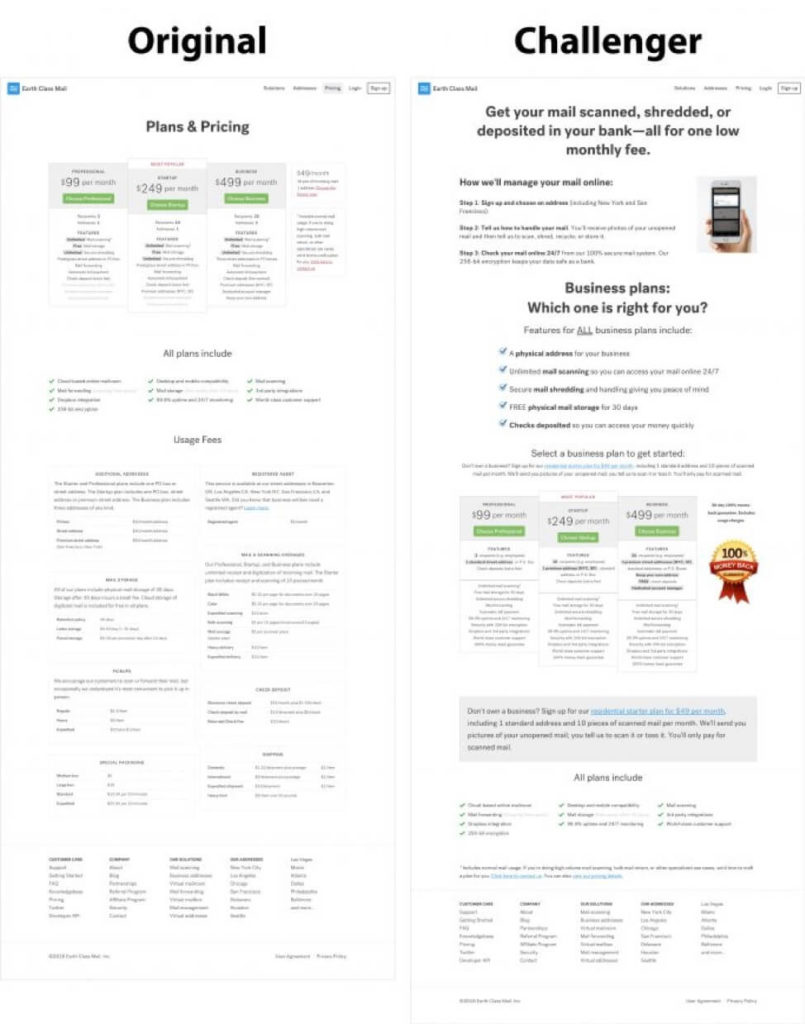

Here’s another A/B testing experiment using Convert Experiences as the client-side testing tool for the same SaaS company on its pricing page that led to a 57% increase in leads:

Most conversion optimization success stories you see online are client-side tests that successfully optimized an experience at the surface level and won big.

But Server-side Testing Indeed Lets You Test More.

When you need to test deeper than the frontend, you need to go with server-side testing.

What is server-side testing?

Server-side testing is a type of experiment where the web server determines the version of content to deliver. In server-side testing, all optimization is implemented directly in the servers rather than inside visitors’ browsers.

Let’s put this into perspective with a few scenarios.

If you were an eCommerce business, you’d be able to use a client-side A/B testing experiment to learn if a redesigned search bar could increase your in-store searches (and result in more sales).

But if you wanted to test a new search algorithm that could bring up more relevant search results (and which would, in the long-term, result in more sales), you’d need to run a server-side A/B testing experiment.

If you were a B2B SaaS business instead, you’d be able to run a client-side experiment to determine if a certain UVP works better on your homepage. Or if a long-form copy could beat a short-form one.

But if you wanted to test a faster backend and see if it could improve retention or engagement, you’d need to run a server-side experiment. If you wanted to test a new onboarding sequence, again, you’d need to go for a server-side experiment. Because besides supporting your new onboarding workflow, server-side testing would also let you orchestrate a multi-channel experiment spanning across emails, SMSes and others that take place over different devices.

Likewise, if you were a B2C SaaS business, you’d be able to run a client-side experiment to learn if a certain pricing plan could work better than the others.

However, if you wanted to test a better recommendation engine, you’d have to go for server-side testing.

As you can understand from the different use cases of server-side testing, it’s geared more toward building better products rather than winning immediate conversions. Unlike client-side experiments that focus on immediate sales or conversions, server-side experiments focus on optimizing the product or solution so that the lifetime customer value increases.

You could say that if client-side testing is for marketers, then server-side testing is primarily for product and engineering teams. And A/B testing tools like Convert Experiences offer both client-side and server-side testing to accommodate both marketing and engineering teams.

Because testing such deep product-level changes takes a lot more than simple browser-based JS manipulation, it can’t happen inside the browser and needs to be tackled at the server level.

While server-side testing has its unique use cases, some companies use it for running even the cosmetic tests — tests that would run perfectly glitch-free even on the client-side.

They often do so to avoid “flicker” or the “Flash of Original Content” phenomenon. Flicker happens when the experimentation tool changes the original content served by the server after the end-users have already seen it. Imagine your users seeing a certain headline and then seeing it change in a flash to another one. (Yes, flicker can seriously compromise a user’s experience!)

At other times, they do so for improved speed. While testing doesn’t slow down a website or cause serious performance issues, it adds a second or two to a website’s perceived loading experience. Server-side can make this speedier.

Occasionally, a company might run a server-side experiment in place of a client-side one because of privacy or security concerns. As the audience targeting happens at the server and the experimentation code resides on the server in server-side testing, companies get better control on its privacy and security aspects.

But implementing a server-side experiment isn’t feasible always, especially when a client-side would do just as well.

Implementing Server-side Experiments

In client-side testing, you only need limited design and development resources to build your experiments and execute them. You won’t even need these if you’re only making text changes or changing the color of a button. All you’d need to do is:

1. Log in to a tool like Convert.

2. Use the WYSIWYG editor and build the variations.

3. Set up the experiment (set the audience targeting conditions, experiment duration, sample size and split, confidence level, etc.)

Grab the JS code and add it to your website.

And done.

You’d then seek development help to roll out the winning version if the control happened to lose.

Server-side testing, however, isn’t so straightforward.

Here, you’ll have to:

1. Create your experiment in Convert Experiences

2. Develop and deploy all the variations of your experiment on your server.

3. Map your server-deployed experiences in Convert Experiences using custom code (by using your experiment’s id, the ids of the variations as set in your experimentation tool and more).

In such server-side experiments, your code needs to tell the server which variation to show to a current user. You could use cookies to facilitate this. For example, to implement an A/B server-side test with Convert, you’ll have to set up a cookie with the following data:

The server will then read your cookie and

serve a version (and all the subsequent sessions) accordingly.

Because your server determines what version to send to a user, the targeting happens at the server (and not inside the browser as with client-side testing). Your testing precision will depend on how well you can code your targeting conditions on your server. With client-side testing though you can laser target your audience for all your experiences.

Also, server-side testing can get more complex in a multi-server setup and also when a CDN needs to be integrated.

4. Run the experiment.

5. Roll out the winning version and roll back the losers.

You might also need to clean up your servers, post the final rollout/rollback.

As you can see, the life cycle of a server-side experiment is long and complex unlike that of a client-side one. That’s why going with server-side testing needs some deliberation.

In General, You Wouldn’t Run a Server-side Experiment If a Client-side One Would Do…

Running even a single server-side experiment is challenging because developing and rolling it out is a more resource-intensive and time-consuming process.

Besides, if you use server-side testing to test changes that can be easily validated client-side, hitting a good testing velocity and a robust experimentation program will be difficult.

Also, for such experiments, opting for server-side experimentation when you have a few great client-side A/B testing tools that let you run them flicker-free without impacting your SEO or speed doesn’t promise the best use of your testing bandwidth.

Server-side experiments should be preferred only when they make a strong case for the given hypothesis. And they do so quite a few times because many experiments that impact a business’s bottom line metrics can happen only on the server side.

So tell us… have you run any server-side tests? If so, what was the most difficult part of the process? Oh, and if you want to run a server-side A/B test, check out Convert (it’s free for 15 days!)

Written By

Disha Sharma

Edited By

Carmen Apostu