How to Work with A/B Testing Tools for Optimization Success? Top 6 Factors Explained

Investing in the right A/B testing tool for your business is only the first step. The real magic lies in your processes and strategy.

But what processes and strategies position your optimization program for the best possible results?

In this article, we’ll show you 6 ways you can make your A/B testing tool work as hard for you as possible and get the highest ROI.

But before we start, there are some key aspects to keep in mind when using A/B testing tools. These aspects have to do with:

- The limits of the tools

- The limits of the vendor team behind the tool, and

- The limits of your own optimization processes and culture which may introduce snags in the successful use of the A/B testing platforms.

Now, let’s dive into those 6 ways to extract maximum benefits:

1. Start with Education (Don’t Skip This)

This is where you invest in the people using the tools. Because, whether free or paid, the gains from your A/B testing tool are only as good as the hands wielding it.

An A/B testing software in the hands of a beginner tester is not the same as that same software in the hands of an expert.

Ace optimizer Simar Gujral of OptiPhoenix understands this. He conducts tool training for new hires but goes beyond “how to use an A/B testing tool” to emphasize process training and strategy.

Jonny Longden of Journey Further says 80% of your investment should be in people and 20% in technology:

So, your first step in getting maximum ROI benefits from optimizing is educating yourself and your team on the right A/B testing processes and strategies.

You can get started with CXL’s CRO and A/B testing program. In fact, as a Convert customer, you’ll get access to this CXL training program.

Training is what prepares a tester to:

- Come up with great hypotheses to test

- Prioritize testing high impact areas first

- Read the data correctly and extract valuable insights

- Find test ideas

- Test the right things

… and more.

It is the foundation of what’s right about top-performing A/B tests. Because…

The Tool is Only as Good as the Hypothesis

Let’s face it: Your tool will only do your bidding.

And if your bidding is grounded on poor hypotheses, A/B testing won’t give you the results you desire.

There are ways to build a great hypothesis, but that’s only possible when they are created from sound data. This is more than just the collection of data, but also its processing and interpretation. So, the attitude towards the data analysis that informs your hypothesis should be that of business growth.

Use your data to find problems within your product or business and reveal opportunities for improvement. That’s where to start.

This is also an educational springboard for future hypotheses. Learning from tests illuminates new insights into what works and what doesn’t. The common mistake here is seeing a failed test as a failure.

All of these experiments help you see further into your strategies—to avoid a wrong turn or to go forward with what works—either way, you’re using your tool correctly.

Create a strong hypothesis using our free A/B testing hypothesis generator.

The Best Hypothesis Can Get Derailed Without Proper Execution

Even if your hypothesis is on point, you may not get the most out of it without proper execution.

This could happen for a number of reasons.

For instance, you might be

- Running the tests for too long

- Not running the tests long enough

- Running too many tests at the same time

- Testing at the wrong time, or

- Setting up the test the wrong way.

A major contributor to the problems here is an absence of one or more of 3 things:

- The right experimentation mindset and tactics

- Quality technical and theoretical know-how

- Sufficient understanding to use the tool.

For the first two, top-quality education and hands-on experience cover it. For the last one, your A/B testing tool owes you an onboarding session that removes all misunderstandings unique to your use case.

Here at Convert, we’ve found a strong correlation between customers who have robust technical training and seek out tool education with our experts and the chance of getting value from our app.

Ensure that the app onboarding you are provided is user-centric and not feature-centric. You want a vendor who has taken into account the following factors when designing onboarding:

- The core value you seek from the tool

- The steps you have to take to experience that core value

- The friction you experience along the way

- Your ease of use.

And when it’s time to push your winning experiments to live, it might get stuck in the development queue. Maybe there isn’t a dedicated developer on the optimization team or the main web development team is busy on “bigger” projects.

But these small changes can have big effects—and you’ve proven it too—so they deserve equal attention from developers. Split your product dev queue so that small fixes get the same attention that big and urgent tasks do.

2. Use the Tool’s Flexibility to Your Advantage

Another way to use your tool to the best of its ability is to take advantage of its full range of features—but only as it applies to your unique use, of course.

You can use:

Integrations

Integrations are the backbone of creating an interconnected stack of teamed-up tools to supercharge your A/B testing, marketing, or conversion rate optimization efforts.

It could be to extract data from multiple tools of your stack and inform hypotheses, send this data to as many platforms as possible including Google Analytics, or run A/B tests on your email marketing campaigns.

Here’s what Silver Ringvee, CTO at Speero, says about this:

Make sure you don’t keep your data in isolation within your testing tool. I’d recommend pushing your experimentation data to as many relevant tools and destinations as possible. This way you can dig deeper into variant groups in your analytics tool, analyze the user behavior using something like Hotjar, and calculate the impact on long-term metrics like LTV or churn within your data warehouse.

Self Service Payment

This one is especially true for businesses that are just starting out with A/B testing and don’t really have an endless budget to get by. The aim is to steadily improve your testing velocity.

So make sure that the tool allows you to purchase more tested users as needed, without pausing your plan till you upgrade to a higher-priced tier (after tiring conversations with sales reps).

It would be ideal if you can upgrade to access features you may only need sporadically when you want to. And downgrade when your testing program is going through a lull so that you can use the budget to upskill your team. Don’t miss out on this opportunity.

Also, pricey tools come with a big promise that quickly devolves into hype. Don’t get sold on the bells and whistles that will let you test everything. You rarely need to do that.

Instead, focus on the tool that will let you test what your business needs. And this is where Education and Test Strategy come in.

3. Check Up on Tool Introduced (Validity) Threats

Before you put all your trust in your results, make sure you fully understand how your chosen tool works and how you’ve implemented the test.

The goal here is to see if your data has been corrupted in some way, so that you’ll know how much confidence to place in the results.

Does the A/B Testing Tool Affect Your Core Web Vitals?

Make sure you’ve implemented your A/B testing tool in a way that does not decimate your search engine rankings. You don’t want the SEO vs. CRO debate. The two go hand in hand.

There’s almost no change you’d make to your site for CRO purposes that will upset your standing with Google. Any change you make will usually affect keywords, page content, and user experience. That’s just 3 of the 200+ ranking factors.

And since you increase conversions on those pages, you’re also sending positive signals to Google that people love your content. There’s a lower bounce rate and the number of visitors is increasing.

According to Rand Fishkin from Moz, as long as you’re not making insane changes to your page, you should see CRO and SEO as teammates — not opposing factors.

Does Your A/B Testing Tool Cause Flickering?

You don’t want the blink skewing test data. Pick a tool that does not flicker.

Flicker can ruin the integrity of the data you collect from experimentation because it becomes obvious to your website visitors that something strange is going on with your webpage. When they get a glance at the original version before the variant appears, it raises question marks in their minds. It goes without saying that showing different versions of your page to the same visitor mars user experience.

You need to deploy the tool code in a way that explicitly supports no blinking. Here’s how you can do that.

Here’s what the experts have to share about some of the real-world effects of flickering in website optimization:

Even though we do enjoy having personalization in our experiences, in many cases we don’t want to know we’re being personalized to, the reason being is that we want to be in CONTROL, according to the self-determination theory, we want to have autonomy and “CONTROL” of what we’re doing.

Therefore having the flicker effect where it shows the control for seconds and then it changes to the variant; we can’t go back to the first version even if we try to reload the page or go back since the cookie has been saved. It causes mistrust and anxiety. “Why can’t I go back to what I’ve seen before?” “I liked the first page I saw, how can I go back?”

In a nutshell, it causes mistrust to the brand that has this issue, it increases bounce rate and loss in conversions.

Carlos Alberto Reyes Ramos, Speero

Flicker affects your tests in MANY ways. Any time you can entirely remove flicker in your experiments – do it. There are strategies you can take to ensure flicker doesn’t happen – I recommend taking those strategies as MUCH as you can, especially for tests involving components above the fold. Alternatively, if you know a specific component you want to test is more prone to flicker – consider redesigning your test so it still tests the hypothesis but doesn’t modify that particular component.

I’ve been part of experiments where we were doing price promo testing. Can you imagine if your flicker took so long to run, that a user saw one promo, then it flickered to another promo? I’d be furious as a user. If the flicker is bad enough, you honestly have to factor into your experiments whether a test lost due to the hypothesis being proven wrong, due to the flicker, or due to both! You’re testing two variables in this case, not one.

If you’re concerned about how much of an impact flicker may be causing to your site, you may opt into testing the same landing page experience but introducing flicker. This way, you’ll be able to test flicker and analyze the results in a ‘non-inferior’ way to see the impacts flicker may be causing for that specific element.

Generally, this is a last-ditch effort if flicker is unavoidable – you should always do your best to be running experiments which do not have any kind of flicker.

Shiva Manjunath, Speero

Does Your A/B Testing Tool Take Privacy Factors into Consideration?

Often when the A/B testing tool does not use first-party cookies, because of tracking prevention across browsers, existing site visitors may be recounted in reports or, worse still, exposed to both the treatment and the control.

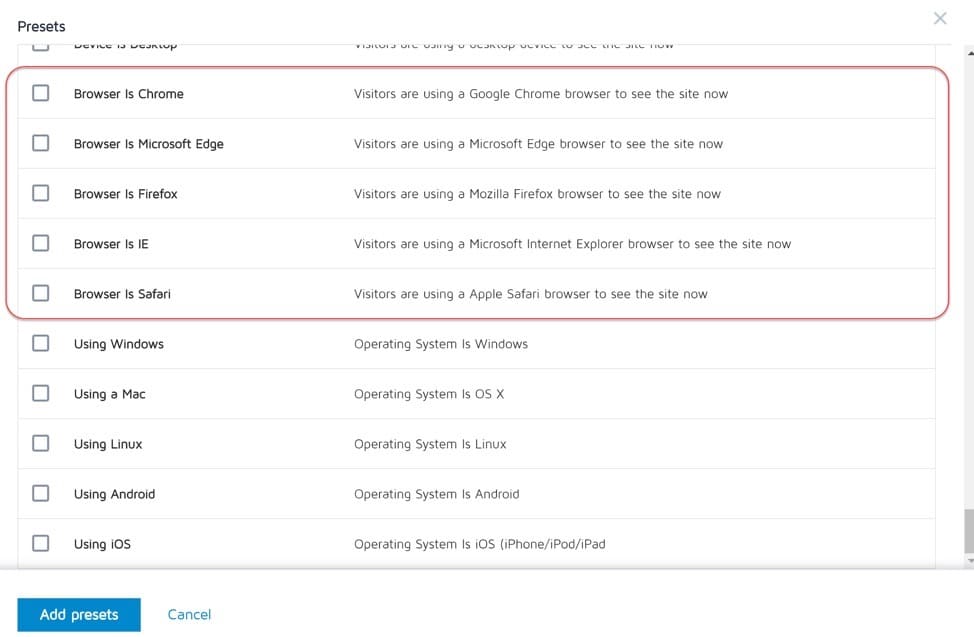

This tracking prevention includes:

- Intelligent Tracking Prevention (ITP) from Safari

- Enhanced Tracking Protection (ETP) from Mozilla, and

- Tracking Prevention from Microsoft Edge

Depending on what percentage of your traffic uses these browsers, the magnitude of the effect they will have on your marketing analysis will vary.

Because of these preventions, the browsers retain cookies for different lengths of time. From as long as 30 days to as short as 24 hours. Since cookies help tools recognize users, this affects the new user count and messes with conversion rate calculation.

Say, for instance, the cookie shelf life is 24 hours, the same person visiting your site 2 days apart will be recorded as 2 different users.

If this happens to 100 users and 50 of them end up converting, the conversion rate (which is the number of conversions divided by the number of users) will be 0.25. Because those 100 users are recorded as 200 different people. The conversion rate is supposed to be 0.50.

This impacts various user-related metrics. For you to continue getting the data you need for your tests and marketing analysis with as much accuracy as possible, choose a tool that has solutions for a cookieless world.

Does Your A/B Testing Tool Cause Cross-Contamination between Control and Treatment Groups?

Another thing that can ruin the integrity of your test results is cross-contamination between control and treatment groups. The outcome of your test is not supposed to be influenced by those of another test.

If you intend to run multiple tests on a website or webpage, your tool needs to come with a valuable feature: collision prevention.

If your test redesign does not take into account the spillover of impact from the control group to the variant group (which is common in social media experiments) and you’ve not prevented the collision, then this is a legitimate A/B testing pitfall.

4. Look Under the Stats Hood & Consider Hiring Dedicated Talent

The tool is not the number one place to invest most of your testing budget. If that’s your strategy, you may even struggle to prove the ROI of your A/B tests.

If your organization is on the path to fully imbibing an experimentation culture and making data-driven decisions all the way, even if you’ve successfully democratized data and gotten all hands on deck in that area, it still makes perfect sense to invest in a dedicated talent for that purpose. And if you can afford it, a team.

This is because experimenting doesn’t yield desired results in the long term when it’s just a side task for someone on your marketing team. Even just a 50% tester will always outperform a 1% tester.

You’d also want to focus more on the leadership and communication skills of your talent to promote that testing culture in your organization. Coming up with great hypotheses and running sound A/B testing, split testing, or multivariate testing are skills that can be learned.

When it comes to your A/B testing tool, you want to be able to trust the results you’re getting. Go with an option that’s open about their statistical approach.

Whether Bayesian or Frequentist, your dedicated talent with solid statistics background should be able to understand how those numbers are calculated. That way you can extract much more accurate insights and get full value for the money invested in your tool.

Even if you’re using one of the best free A/B testing tools, Google Optimize, you need this information. Unfortunately, all you can learn about GO is that it uses Bayesian but won’t share its prior considerations with you. This is a lack of transparency and a big issue.

Maybe it is time to consider transitioning to more trustworthy testing?

On the other hand, with Frequentist stats engines, collaborators may look only at statistical significance levels and draw incorrect conclusions. Ah, the illogical sin of peeking! You’re supposed to wait until it hits the sample size.

What you can do about this is set rules against peeking. You don’t want people running with erroneous conclusions that impact the quality of decisions.

Always go for vendors with transparent stat engines.

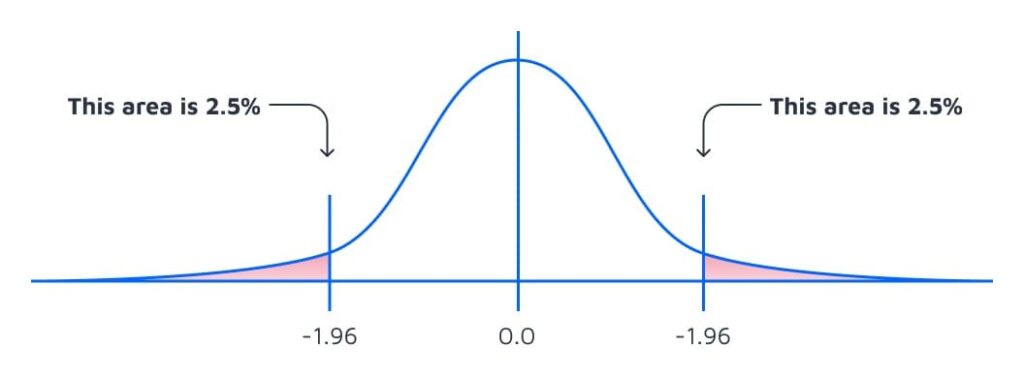

Convert Experiences uses a 2-tailed Z-test at a .05 confidence level (95%) (that is .025 for each tail being a normal symmetric distribution) with the option to change this between 95%-99%.

5. Set Your Tool Up for Use & Adoption

One of the barriers for other members of your organization adopting a testing culture is that some of these tools come with a rather steep learning curve.

But you can make things a little welcoming and easier to grasp to an average user. Here’s how:

Start Right

You can easily overwhelm others if there are lots of features that seem too technical to even bother.

If you aren’t using these fancy features, go for a lightweight tool that cuts feature bloat. VWO’s products are well set-up for this (Yup, a competitor… but this aspect of theirs is really amazing).

Use Your Tool’s Project Management Features

This is a fantastic way to include others and seamlessly work on A/B tests as a team.

Also, other people can utilize the same tool for different purposes. For example, Convert has the ability to have multiple projects in one account, with each project capable of handling unlimited sub-domains.

Nomenclature Is Important

Set up in such a way that anybody on your team can hop on the tool and get an idea of what’s been happening. Familiarity, in this case, breeds adoption.

Creating a naming convention for your tests might seem like overkill when you first start out, but as your test velocity increases and iterations of old tests rise, you’ll be glad you did.

The name of an A/B experiment should be short and descriptive. A good name contains the following information.

- Author (developer or team – only relevant if multiple teams are working under one account)

- Targeted page(s), page type, or page group

- Changes (a short description of what changes are being tested)

- Target audience(s) (device group, traffic source, geolocation, etc.)

- Version information

Some examples:

Amazon marketing – Benefits HP ATF – Mobile – V2 (HP stands for Home Page and ATF stands for Above The Fold)

Amazon marketing – Promote Link to Reviews on Landing Pages – Desktop

Amazon Product – Priority 1 – Stationary Compare feature PDP – Mobile (Relaunch).

Silver Ringvee, CTO, Speero

Make Sure You Are Using Vendor Support Well

Understand all the ways you can reach the support team. You’ll need a highly responsive and knowledgeable team to provide you with the assistance you need when running tests.

Figure out if email, chat, and phone support are available in your plan.

Check out this A/B testing tools comparison. (Note that Convert offers all 3 support options with all plans.)

Also, use your CSM as an accountability partner. Let them know your goals, so they can point you in the direction of the right resources, tools-wise. If you don’t have dedicated marketers or experimentation talent or need consultations, often your vendors know the pros who are great at using their tool. And would be happy to make the introduction.

6. Measure the Right Output For Your A/B Testing Program

It seems you can’t get much-needed attention to an A/B testing program if you don’t attribute it to revenue gains. Usually, executives demand exact numbers to validate the need for an A/B test.

“What percentage lift should we expect? And how much will that add to this year’s revenue?”

But that is not what experimentation is designed for. A/B tests are meant to add a measure of certainty or confidence to ideas — whether a hypothesis is true or not.

In fact, that approach can lead to problems such as:

- Setting expectations that cannot realistically be met,

- Attributing gains to A/B testing alone and ignoring other key players,

- Seeing a failed test as a total failure, instead of insights into what works (profit growing) and what doesn’t (risk mitigation),

- Creating inaccurate extrapolations of test results.

That being said, you don’t want to use your A/B testing tool to track link clicks. Instead, choose the right A/B testing goal. And leverage the power of your tool’s Advanced Goal options to get granular about what you are tracking & why.

Here’s how to choose the right goals and metrics you should be tracking:

- Start with the goals that matter to your business. That way you can choose A/B testing goals that align with the goals of the business.

If the goal of the business is to increase revenue by acquiring more customers, your experimentation should be geared towards generating leads.

- Pick your primary and secondary goals. Primary would be those that directly link to the goals of the business, such as app downloads and demo requests.

Secondary goals support primary goals because they are micro-conversions that usually lead to the achievement of the secondary goal at some point in the future. These could be engaging with your content or signing up for newsletters.

The idea here is to recognize factors that contribute to achieving the main business goals.

- Decide on what to measure. These are your key performance indicators (KPIs) — metrics that indicate where you are in relation to the main business goals.

When you recognize this, it’s easier to actually measure and improve those metrics that directly impact positive growth for the business.

If you don’t set up your goals correctly, you will either celebrate micro-goals that don’t move the needle or constantly invest in “Big Sky Ideas” that are difficult to calibrate, design, deploy, and end up looking like failures. The balance lies in the middle.

Next Steps

Using your A/B testing tool to 100% capacity isn’t always going to be possible, but using it to get the maximum benefits for your business is something you can strive towards.

The first step, if you haven’t chosen one yet or don’t feel confident with what you currently have, is to find the right tool for your needs. Then, with these 6 strategies, it’s all the way up from there.

Written By

Uwemedimo Usa

Edited By

Carmen Apostu