A/B Testing: A Guide You’ll Want to Bookmark

A/B testing is a MUST for any growth-driven organization. It doesn’t matter what industry you are in. If you have an online property as one or more of your customers’ touchpoints, you want to make it a well oiled-machine that converts the greatest amount of visitors with the least amount of effort.

A/B testing allows you to do this.

A/B testing is part of a larger conversion rate optimization (CRO) strategy, where you collect qualitative and quantitative data to improve actions taken by your visitors across the customer life-cycle with your company.

This A/B testing tutorial will go over everything you need to know about A/B testing to make you a better CRO expert, no matter what your specific role is. This includes:

- Definition and benefits of A/B testing.

- What and how to test.

- How to overcome common A/B testing challenges and mistakes.

Let’s dig in…

What is A/B Testing and How Does A/B Testing Work?

What is A/B Testing?

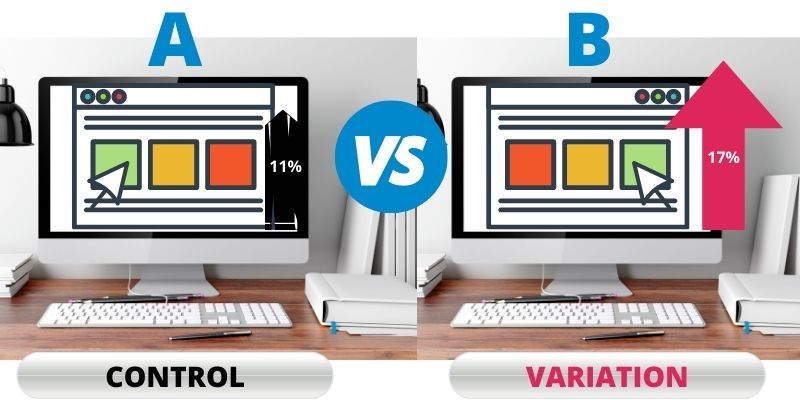

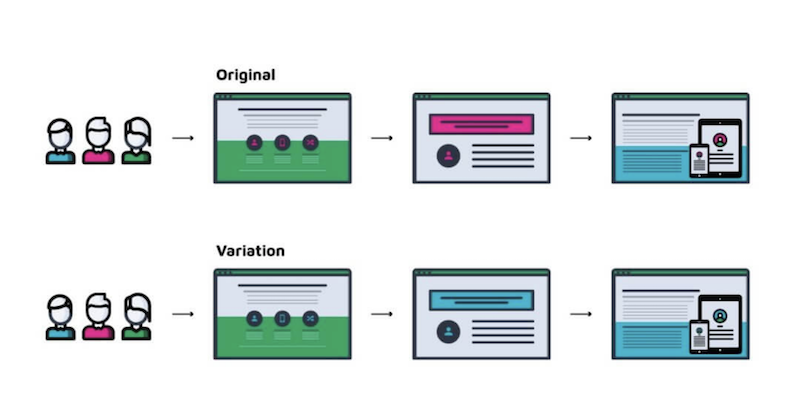

A/B testing is an experiment that compares two or more versions of a specific marketing asset to determine the best performer.

In “A/B”, “A” represents the original version of the element, also known as the control, while “B” refers to the variation meant to challenge the original. Hence, the other name for B: challenger.

You can run this test on your call to action button, messaging, page headlines, or the position of page elements (like forms). It works for any graphic or text element on your website or app.

The reason for A/B testing is to understand what improves performance and what doesn’t. It’s a way to make data-driven decisions in a product and marketing strategy.

Traffic is split at random so that one group sees version A while the other sees version B. Statistical analysis is used to determine the winner of each test.

Once you set up your A/B test, your visitors will see either your control page or a variation of that page.

It is random who sees which page.

Metrics like the number of conversions, currency transactions for each page, etc, are calculated and tracked.

NOTE: This guide focuses on testing website elements, but the concept of A/B testing can also apply to systems and processes.

Based on the results, you can determine which page performed best. Answering the question: Did visitors have positive, negative, or neutral reactions to the changes on the page?

Doing many tests means compound data that you can use to decide how to optimize.

A/B testing is one test in your experimentation program.

Before we jump into the details of A/B testing, let’s take a quick look at other types of experiments.

What Are the Different Types of Tests?

A/B testing is one of the most common forms of experimentation. But it is not the only type. It’s important to lay these out, as a testing program runs in unison with the elements of your entire experiment program.

It is like you are a research team, gathering data from many areas and a variety of ways to get a 360° view.

Let’s review the different tests before we dive deep into the world of A/B testing.

What is A/A Testing?

An A/A test is an A/B test that compares identical versions against each other.

A/A tests are used to check quality control, baselines, and to ensure statistical calibration of the testing tool used. In this test, A is not the control, rather, the entire test is a control.

Since there are no differences between your two versions, expect your A/B testing tool to report this lack of difference.

Why and When Do You Run An A/A Test?

One reason to run an A/A test is to determine your baseline metrics like conversions before implementing a test.

This makes the data you collect during testing much more valuable, as you have a benchmark to compare it against.

The second reason, as mentioned above, is to check the accuracy of your A/B testing software.

What is A/B/n Testing?

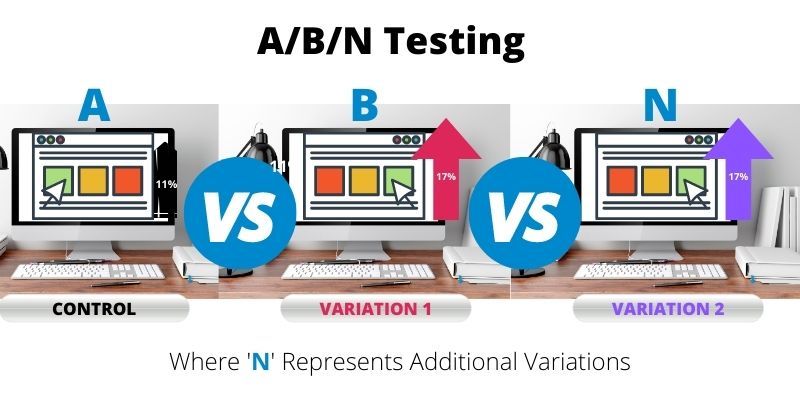

A/B/n tests are another type of A/B test used to test more than two variations against each other. In this case, “n” represents the number of different variants, where “n” is two or greater with no limits (nth).

In an A/B/n test, A is the control, and B and nth changes are variations.

A/B/n tests are great to use when you have multiple versions of a page you want to test at once. It saves time, and you can get the highest converting page up quickly.

However, remember that for every additional variant added in an A/B/n test, the time to reach statistical significance will increase.

People commonly use the term A/B/n interchangeably with multivariable testing, but they are different. A/B/n test compares the performance of one change, for instance, the wording of a headline. You would test variations that include different copy for that one element (the headline).

As you will see in the definition for the multivariate test, it is different from an A/B/n test.

A/B Testing vs. Split Testing vs. Multivariate Testing

Although there are fundamental similarities between A/B testing, split testing, and multivariate testing, they have their unique method and use cases.

Multivariate Testing (MVT)

Multivariate testing (MVT) is similar to A/B testing. A/B tests work with one element or page at a time, but multivariate tests do this for many variables at the same time. It is a way to see how a combination of different changes performs when put together.

As an illustration, imagine you were running a multivariate test on your lead gen pop-up to see what combination of headline and CTA converts better. You would create 4 versions of the pop up like this:

Version 1 = Headline A and CTA A

Version 2 = Headline A and CTA B

Version 3 = Headline B and CTA A

Version 4 = Headline B and CTA B

You will run an MVT of all 4 versions at the same time to see what happens.

What is Split URL Testing?

Split URL testing is similar to A/B testing. The terms are used interchangeably, but they are not the same.

While A/B tests will pit two versions of an element on a page against each other to see if the challenger improves performance, reduces it, or has no effect, split testing works on a wider scale.

Split testing checks if one totally different version of the page works better than the original. So instead of testing one element, it tests the whole page. For instance, if you want to see if a new lead gen pop-up design brings more email sign-ups than the original one.

They are more complex than front-end A/B testing. They can require some technical know-how (how to build a website).

For example, if you are looking to overhaul your home page, split testing is ideal. You can quickly identify which tool optimizes conversions.

Benefits of Split Testing

A challenge associated with testing is, websites need a base threshold of visitors to validate a test. However, split tests run well for low-traffic sites.

Another bonus, it is easy to see the winner. Which site got the most conversions?

Multipage Testing

Multipage testing, aka funnel testing, allows you to test a series of pages at one time.

There are two ways to perform a multipage test:

- You can create new variants for each page of your funnel, OR

- You can change specific elements within the funnel and see how it affects your entire funnel.

To get a clear understanding of the difference between A/B testing, multivariate testing, and multiple page testing, check out this article:

Now that you understand the various types of tests, time to share the merits of A/B testing.

Why Should You A/B Test?

Building the case for A/B testing is a simple equation of logic: if you don’t test, you don’t know. And guessing in business quickly turns into a losing game.

Your business may see hopeful spikes of success, but they will quickly turn into “Spikes of Nope” if you don’t have a formal way of experimenting, tracking, and replicating what works and what doesn’t.

Even if you guess correctly 50% of the time, which is rare, without data and an understanding of why it worked, your growth will stagnate.

A/B testing lets you put on your data-driven glasses to make decisions steeped in growth and optimization. There is no other system that will give you the data you need to make optimization-based decisions.

It’s not surprising the nickname for A/B testing is:

“Always Be Testing.”

– A/B Testing

Why A/B test? Here are a few benefits of implementing A/B testing in your business.

17 Benefits of A/B Testing

- Optimize ROI from existing traffic and lower acquisition cost.

- Solve the prospect’s pain points.

- Create/design a conversion-focused website and marketing assets.

- Make proven and tested modifications.

- Reduce Bounce Rate.

- Increase the consumption of content.

- Test new ideas with minimum investment.

- Ask data-driven questions.

- Perpetuate a growth mindset within your organization.

- Take the guesswork out of website optimization.

- Enhance User Experience on your site.

- Learn about your prospects, clients, and your business.

- Test theories and opinions.

- Improve marketing campaigns.

- Decrease ad spend with tested ad elements.

- Validate new features and design elements.

- Optimize across user touchpoints.

This is not an exhaustive list but you can see the many benefits of A/B testing.

So, now that you know the benefits of A/B testing, let’s look at how to perform an A/B test.

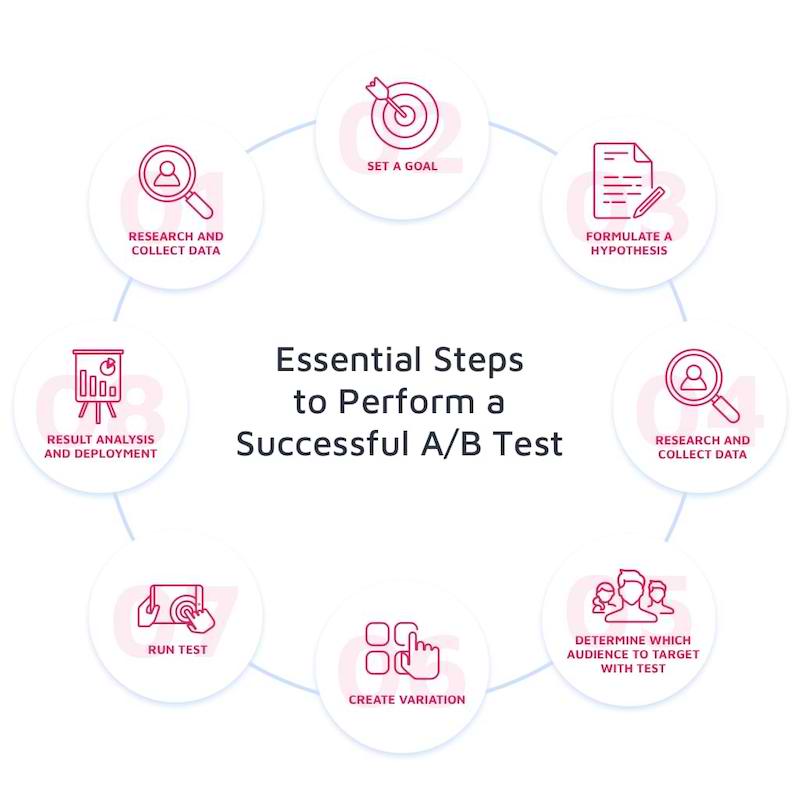

How Do You Perform an A/B Test? The A/B Testing Process

Just like scientists and doctors follow specific protocols, the same is true for A/B testing. This is a continuous process of going through each step to refine your marketing by extracting data from your test results.

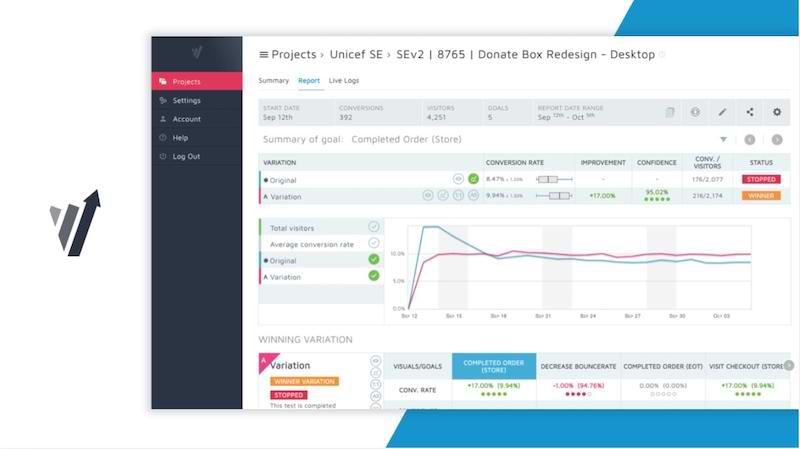

We will go over the general steps that lead to successful A/B testing, using Convert Experiences software as an example where it applies.

Essential Steps to Perform a Successful A/B Test

Step 1: Research and Collect Data

You want to be strategic about what you test. The best way to do this is to investigate your current analytics. How is your site performing? What are your current conversion goals on each page? Are you meeting them? Can they be improved?

Use quantitative and qualitative tools to find and analyze this data.

Qualitative Analysis in A/B Testing

Qualitative analysis in A/B testing is collecting and interpreting non-numerical data to extract information. Qualitative data is usually sentences and natural language descriptions like customer feedback, results from surveys and polls, and session recordings.

This provides generalized and subjective information for the brainstorming stages of your A/B testing program. They explain the “why” behind your quantitative data and can help formulate hypotheses in A/B testing.

Questions to Ask Yourself Before You A/B Test

Here are a few additional stimulating questions you can ask during the research process:

- What are your top-performing pages? Can you make them perform even better?

- Where are people leaving your funnel?

You can think of this as a hole in your bucket, you want to seal it as soon as possible. - What pages are performing poorly, high visits but high bounce rates?

This may show the page is not meeting searchers’ intent. Can you make changes to get people to stay on the page longer? - Leverage the 80/20 rule. What minor changes can you make (the 20%) that will cause higher (the 80%) conversions?

Quantitative Analysis in A/B Testing

Quantitative analysis in A/B testing involves the collection, cleaning, and interpretation of numerical data into insights. As the name suggests, you’ll be working with quantitative data such as page views, bounce rates, and the number of new users.

This comes in handy when you want to check some performance metrics against your KPIs. And also a key ingredient of statistical analysis in A/B testing.

You can find these opportunities through research. Once you are armed with information, time to set a goal.

Step 2: Set a Goal

Define a clear goal. How will you measure the results of your test?

Many goals can be set and they will be unique to your organization. Your goal may be to increase the number of clicks on your CTA button, while another business may want to increase the number of sales, or the number of email sign-ups.

Make sure the goal is specific and clear. Any member of your team should be able to read your goal and understand it.

How to Choose an A/B Testing Goal?

Without a goal, it’s pointless to start A/B testing. You need measurable makers that show how your site is meeting preset objectives — that’s your A/B testing goal. If you don’t have that, what results are you looking for?

To choose an A/B testing goal that ensures your time and cost investments in experimentation are worth it, follow these steps:

1. Know your business in and out: Figure out what the business is set up for and what the organization as a whole wants to achieve. Usually, this is revenue growth in a for-profit organization.

2. Find the goals that align with the business objectives: If a news outlet wants to make more money, it’ll be interested in engaging more users. Hence, email newsletter sign-ups will be valuable to them. A YouTube channel that wants to get monetized needs 4000 hours overall watch time.

3. Identify the KPIs and metrics you need to measure goal achievement: For the news outlet, they will want to look at how many new email newsletter sign-ups they get and the open rates. The YouTube channel will be concerned about new subscribers and average view duration.

4. Choose the goal type based on the above 3: This could be form submissions, click-through, comments, page views, profile creations, social media shares, etc. See more A/B test goal types here.

Now that you have a clear goal, time to create a hypothesis.

Step 3: Formulate Hypothesis

Now that you have a clear goal, it is time to generate a hypothesis. Your hypothesis expresses your goal and why you believe it will have a positive impact. It gives your test direction.

Hypothesis Generation in A/B Testing

An A/B testing hypothesis is an explanation for an observation you’ve made from the qualitative or quantitative analysis that you haven’t tested and proven yet. It’s like saying “this is why this is happening” and then testing to see if you’re right or wrong. You can create as many of these for the same observation as you can.

But wait, there’s more that goes into generating a hypothesis for your A/B tests. And if you want to test the right thing and extract useful insights, you need to get the hypothesis correct.

Like in the definition of hypothesis, you start with an observation of carefully collected data, analyze it, and figure out what change can produce a certain outcome.

You can use a free hypothesis-generating tool like Convert’s Hypothesis Generator to frame a credible hypothesis for big learnings and lifts.

If you don’t want to use a hypothesis generator, you can also use a Hypothesis Generation Toolkit that promises to help you identify what to test with the promise of giving you “data scientist wings.”

You may come up with multiple hypotheses. In this case, you can prioritize them.

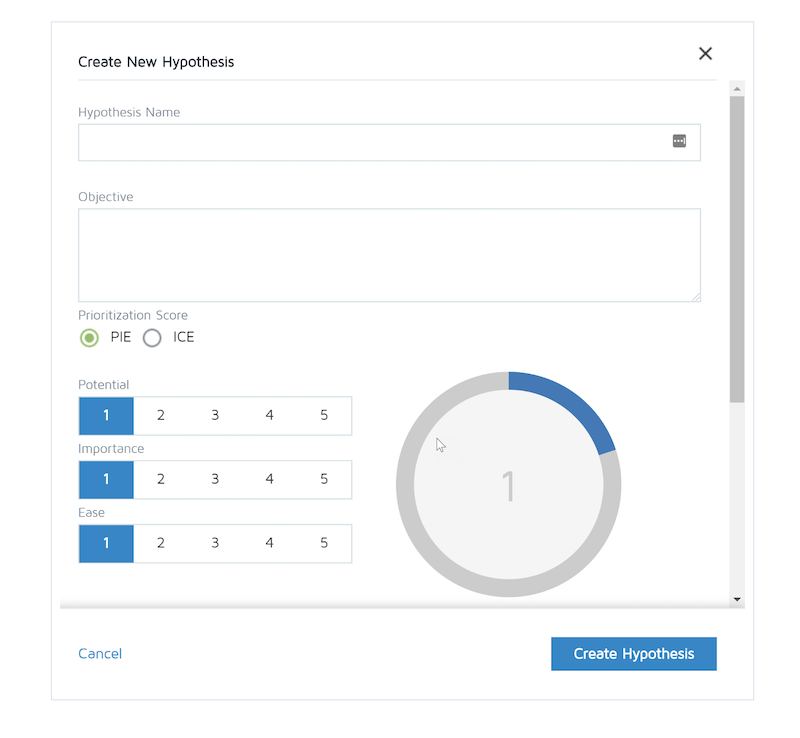

Prioritization in A/B Testing

What is prioritization in A/B testing? When you have multiple hypotheses, you have the choice of which to test and which to save for later. So you have to set their priority.

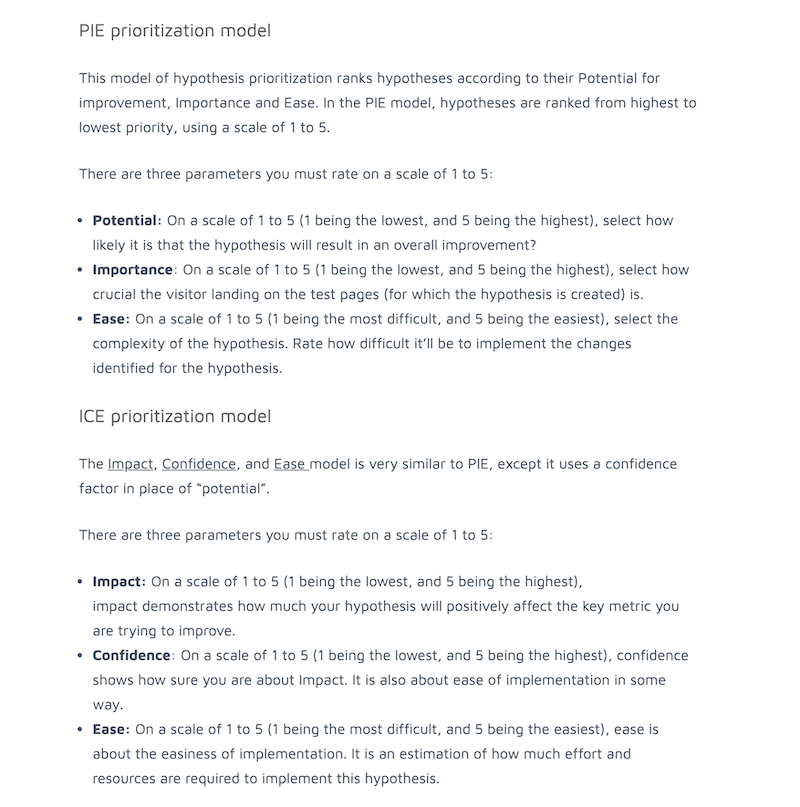

Prioritization in A/B testing uses a scientific method, as opposed to randomly selecting, to choose which hypothesis should be tested first. There are two popular models for this: PIE and ICE.

The PIE model scores your hypothesis based on potential, importance, and ease. While the ICE model scores based on impact, confidence, and ease. A different version of ICE is impact, cost, and effort.

Organizations develop their own internal prioritization models that fall in line with their unique culture. Some score based on more than 3 parameters. For instance, the PXL framework has 10 attributes.

And there’s more: RICE, KANO, and even IE (impact and effort).

You can create your own A/B testing prioritization model that’s unique to you and your organization, depending on what you value.

Or you can use Convert’s prioritization tool (PIE and ICE) within the software. It will help you organize how confident you are about it winning, the impact it will have, and how easy it will be to implement.

The models are as follows:

Based on your responses and observations, choose what will make the most sense for your next action. Less effort, bigger result.

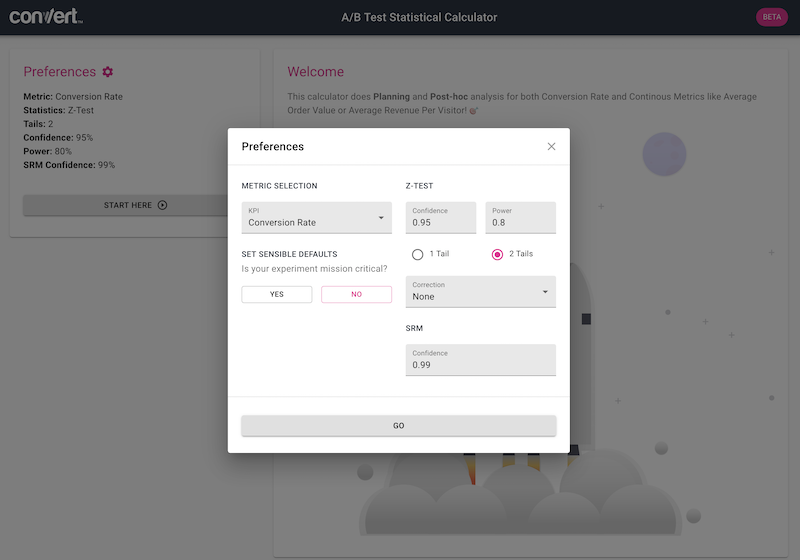

Step 4: Estimate your MDE, Sample Size, Statistical Power, and How Long You Will Run Your Test

Identify the sample size, which is the correct amount of visitors that need to be bucketed to experiment for accurate results.

Even if your A/B testing software calculates this for you, have a general understanding of these statistics. It will help you spot issues in your testing for abnormalities.

For example, what if you know your test should run for 28 days, but you completed it after only 14 days? Or maybe you estimated you need around 20,000 visitors to reach statistical significance, but you see your test is still running with 120,0000 visitors. In both instances, you want to take a close look at your test.

Step 5: Determine Which Audience To Target With Test

Some tools, like Convert Experiences, allow you to segment the audience that will see the test.

Step 6: Create Variations

Creating your variation should be easy since your research revealed what to test, why you are testing it, and how you want to test your hypothesis.

It is even easier using a testing platform like Convert, you simply make the change you prioritized in Step 4 in the editor, or via code. This may be changing a headline, copy in a CTA button, hiding an element, the possibilities are endless.

Step 7: Run Test

Now you are ready to run your test!

This is the point where your visitors are selected randomly to experience your control or the variation. How they respond is tracked, calculated, compared.

Step 8: Result Analysis and Deployment

Along with the planning phase, this is probably the most important step in the testing process. It is like getting a list of stocks that have the greatest yield in the market but then never buying. It is not the information that is powerful, but what you do with it. With A/B testing, it’s what you do after the completion of the test that will translate into higher conversions.

Analyzing your A/B test results will give you the information you need to take your next step. Ultimately your results should lead to a specific action. Remember, research and data are only as good as their applications.

Another thing to remember, there are no bad test results. They are always neutral, giving you the information you need to better understand and connect with your customers.

Luckily, top testing software like Convert makes it easy to implement the winner of your test and lets you know if there is a statistically significant difference between your control and variance.

Let these results inform your next test.

How to Read & Share A/B Testing Results?

Sharing your A/B test results in an easily digestible manner triggers a cycle of trust that brings opportunities to test again and again.

More often than not, the stakeholders who want to know what you’re up to when “A/B testing” wouldn’t be familiar with the concept. How do you communicate your ideas and findings in a way that makes sense to them?

You need to understand your audience and prepare a presentation targeted directly at them. Don’t fall into the trap of sharing screenshots of data. You have to put meaningful interpretations right next to this data.

Ensure you address the “why” of the tests, their impact, and recommended next steps. Your A/B testing presentation should include an overview, details, outcome, insights, and impact.

Besides a presentation, you can also do this with a document, infographic, or a combination of all of them. Keep it simple and engaging by cutting off fluff and unnecessary numbers and talk about what appeals to your people.

But what if you don’t understand the results of your A/B test? Read this article to make sense of your A/B testing results.

Now that you know the theory of testing, and how to set up an A/B test, let’s look at the various elements that you can test.

What Can You A/B Test?

The short answer would be, TEST EVERYTHING. But doing that wouldn’t be strategic or a good use of your resources.

A/B Testing Examples

Here are some A/B testing examples to inspire yours:

- A CRO agency designed an A/B test to find out why their client’s eCommerce sites had high cart abandonment. Then, they designed a challenger that corrected those problems and ran the test with Convert Experiences. They found a variation that hit a 26% increase in revenue per user.

- BestSelf Co had a huge bounce rate on their product landing page, so they hired SplitBase to figure out how it can be fixed. After using HotJar to study user behavior, they found the headline could be the problem. So they created two variations that beat the original and chose the winner with 27% more conversions.

- InsightWhale, a CRO agency, worked on the form on a travel company’s deal page that in spite of the huge traffic wasn’t getting any attention. They use Google Analytics to understand the audience and Convert to implement two solutions to the problem in a test. They found one that added 26% more conversions.

These are just a few examples, but you can do A/B tests on your website and apps to fix areas where you’re losing customers. Find the problem, suggest a solution, and test that solution before implementing.

Here is a list of the most common elements that move the dial in a positive direction for your business if you get them right.

A/B Testing Copy: Headlines, Subheads, and Body Copy

Take away all the fancy images, colors, backgrounds, animations, videos, etc, and you are left with plain copy.

Boring?

Maybe. But guess what?

Copy sells. Copy converts!

Imagine you visit a website that had nothing but buttons, photos, and not an ounce of text on it. It would be also impossible to share your message let alone convert a visitor.

Now think about the opposite scenario, a no-frills website with only text, no colors, videos, or images. Could you make a sale? Could you get your visitors to take action?

Of course!

While the site would be simple and maybe aesthetically lacking, using the right words could make the difference.

There are two main types of copy you can test. They are:

- Headlines and sub-headlines

- Body

A/B Testing Headlines

Great copywriters spend hours crafting the perfect headlines. You will not make one conversion if you cannot gain your visitors’ attention.

Imagine your headline is like the window of a brick-and-mortar storefront business. Your window treatment has the power to attract or repel. In the virtual space, your headline does the same thing.

Marketers have found changing a headline can single-handedly increase conversions. That’s why seasoned copywriters spend hours coming up with the perfect headline.

For headline success, keep it concise, and be sure to tell your visitor what’s in it for them if they click and read more.

Use A/B testing to test the actual words, tone, font, size, etc.

A/B Testing Body Copy

Once you entice your visitor to click with your headline, you will need to hold their attention and give them what you promised. This is the job of your body copy.

Every line of copy should encourage the visitor to continue reading it or take the Call-to-action you assigned.

Even if your body copy is selling a product, offer value, even if the person doesn’t buy.

You can A/B test copy format, style, emotional tone, ease of reading, etc.

A/B Testing Content Depth

In addition to actual words that make up the content, the length of content also plays into conversions, and therefore, can and should be tested.

As a rule of thumb, write as much copy as you need to move your prospect through the buying process, but not a word more.

Approach length not as a standard word count, but as, “what points do I have to make to satisfy the prospect and move them to action? Can I say it more concisely without compromising my message?”

When you A/B test content depth you are looking at, “What happens if I add or delete copy (change length)? Does it increase or decrease conversions?

A/B Testing Design and Layout

While the copy is important, the form does matter.

Have you ever walked into someone’s house or a space that had a weird layout? Didn’t it make you feel strange? In some cases, you probably wanted to turn right around and leave.

Well, the same thing happens with your website visitors.

It is this element of layout that contributes to how long people stay on your site (aka Bounce Rate).

So A/B test different designs. This may include colors, element placement, overall style and themes, ease of flow (does the page move logically), and types of engagement elements. This may also include removing elements from the page.

Design and layout are crucial for homepages, landing pages, product pages. Pages where you want prospects to take massive actions. This may include filling out forms, which can also be A/B tested.

A/B Testing Forms

You want your visitors to give you information. The way you do this online is via forms.

It may not seem intuitive, but changes in the way you collect information from your prospects can change the way they respond. Changing one field can increase or decrease the percentage of sign-ups by 5%-25%.

Filling out a form may be the main CTA you have on a page. If so, finding the perfect balance between getting all the information you need, without causing too much friction in your prospects can feel like art.

A/B testing different form copy, styles, location, with pop-ups, without pop-up, colors, etc. can give you valuable information about what reduces resistance so your customers fill out and submit the forms on your pages.

You can test:

- Form length (how many fields do you need vs how many your users find comfortable)

- Form design

- Form headlines

- Form position on the page

- Submit button color, text/copy, and font size

- Required fields (are you preventing users from submitting unless they provide information they don’t want to?)

- Trust indicators (GDPR compliance, testimonials, past clients, etc.)

When you A/B test your forms you can unravel opportunities to understand your audience and improve conversions. See how you can integrate Convert Experiences with Zuko to test the forms on your websites.

A/B Testing CTA

Determining what Call-to-Action your prospects and visitors respond to the most can single-handedly increase your conversion rates. This is why you have done so much work building a website and testing, right? You want your visitors to act.

People commonly A/B test colors of CTA buttons, that’s a start, but don’t stop there. You can also test the copy inside the button, placement, and size.

Be relentless with your testing of CTAs until they are 100% dialed in. Then, test those.

A/B Testing Navigation

Navigation is another element associated with the flow of your webpage. Because they are commonplace requirements, their importance can sometimes be overlooked. However, your navigation is tied to the UX of your site.

Make sure your navigation is clear, structured, easy to follow, and intuitive. If I am looking for product pricing I don’t expect it to be under the testimonials tab.

While experts website designers suggest placing navigation bars in standard locations, like horizontal across the top of your page, you can A/B test different placements. You can also test different navigation copy. For example, changing ‘Testimonials’ to ‘What People are Saying.’

A/B Testing Social Proof

Prospects are naturally skeptical of the accolades you give your business. They are more likely to trust their peers, people who they relate to and identify with. This is why social proof in the form of reviews and customer testimonials is important for conversion rate.

A/B testing your social proof can help you determine where it is best placed on your page, which objection it should address, what layout contributes to better conversion (with a picture or without, title, location, first name, or first and last name). You can test many variations.

A/B Testing Images and Videos

Images, videos, and audio add engagement elements to a webpage. They each can be A/B tested for optimization.

A/B Testing Images

Images can convey messages in seconds and reiterate ideas simply. This is why they are so powerful.

Imagine you visit an ecommerce store. Think about what your experience would be without product images?

Testing images is as simple as trying various images. Don’t stop there, be creative. Test themes of images, like color palettes, with people, without people, copy of captions, etc.

A/B Testing Videos

Video adds value. But only when it’s watched. Just like your headline, you want to make sure the thumbnail is attention-catching.

Besides A/B testing the thumbnail of a video, you can test various videos. Which one gets played most often? Watched the longest?

For ecommerce websites, product descriptions have the tough job of replacing “touch-it, feel-it, taste-it, try-it-on” merchandise and the help of a salesperson.

Optimizing this copy could increase your sales and conversion by percentages (the sky is the limit).

A/B Testing Landing Pages

You create landing pages for one purpose – to convert visitors. So it makes perfect sense to A/B test them to discover opportunities for greater conversions.

A/B Testing Product Pages

Your product page has various elements on it like images, videos, CTA, headline, product descriptions, social proofs, and other page copy. All these are meant to work hand-in-hand to drive conversions. But what if they’re performing poorly or not performing at their best?

You can make changes to improve the results from the page. And you can only do that when you’ve tested that change to be an actual improvement and not the opposite.

A Dutch retailer working with Mintmindsran a test on their product page that optimized the product carousel and unveiled impressive award-winning results.

A/B Testing Value Propositions

Did you know you can also A/B test your value propositions? Not all of them work the same, especially for your specific brand and audience combination.

Remember the second example we mentioned above? That had to do with the value proposition in the headline of a product page that increased the conversion rate by 27%.

When SplitBase was called in to figure out why BestSelf Co’s product page was getting a high bounce rate, they went straight to understanding why. Using heatmaps, they found people don’t get past the fold.

They also ran polls and surveys to get more insight and found people didn’t understand the benefit offered.

So they hypothesized that fixing the copy in the headline to include the benefit of the product will improve conversions. One variation gave them that impressive conversion rate.

Have you nailed your value proposition yet? Maybe you should find opportunities to improve it.

Now you know how to set up your A/B test, the elements you can test, and the benefits of testing to your optimization. But what if your company doesn’t have a formal experimentation program?

A/B Testing on Social Media

A/B testing on social media refers to when you run a control post A against a variable post B to determine how they perform. Depending on the outcome of the test, you’ll decide on which format to go with on your next social media campaign or content calendar.

When A/B testing two social media posts, you’d have to ensure your analytics are properly set up. Major platforms like Facebook and Twitter have their in-built analytics. But you can also use Google Analytics.

Come up with different versions of your post like one with an image vs another with a video. Use your experiments to see which performs better according to your KPIs. Try more A/B testing examples for social media.

A/B Testing on Email: Best Practices

You’ve probably heard that for every $1 you spend on email marketing, you get $42 back. That’s a huge ROI. But only possible if you’re maximizing the conversions from your emails — which occurs when you’re A/B testing emails.

Just like with landing pages, there are elements in your emails that have a direct influence on conversion. And if you want to optimize this, you have to do it the right way.

For instance, how long does an email list have to be to use A/B testing? It is best to start with at least 1000 subscriber lists. Other email A/B testing best practices are:

- Limit the sample size if you want to test extreme changes

- Test CTA, headline, body text, images, design, personalization, subject line, etc.

- Decide on your KPI before you start (open rate, click-through rate, conversion rate)

- If you’re doing it manually, test versions A and B at the same time to get accurate results

- Let go of biases or gut instincts when collecting your data, and

- Test only one variable at a time.

Starting Your A/B Testing Program

Best case scenario, you already have a robust experimentation program, with full C-suite buy-in and the support of peers across departments.

But if you don’t, don’t let that stop you from testing.

However, you are going to want to formalize your testing program. You will need:

- Designated A/B testing specialist(s)

- Company and peer support

- A/B testing software

- Understand the statistics involved in A/B testing

- Identify areas to test

- Start with pages that already convert well and have a good amount of visitors. Then, test all the elements mentioned in the last section.

Hiring A/B Testing Talent

If you want to hire an A/B testing specialist, you have 3 options:

- Get a freelancer,

- Hire a conversion rate optimization (CRO) agency, or

- Hire a conversion rate optimizer to join your company

While the first two options have pretty straightforward approaches, the last one requires some recruitment tact. You’d want to know the qualities to look for, what interview questions to ask, and how to prepare your organization to receive the talent you decide to hire.

Your ideal A/B testing talent should be:

Curious: A star optimizer always wants to know why things are the way they are. They’re always looking for answers and have a scientific approach to searching for those answers.

Data-driven and analytical: In search of answers, they are not satisfied with opinions and hearsay. They will tear a problem into atoms and search for its data-backed solutions.

Empathetic: They are eager to understand people and learn why people do what they do so that they can communicate with people in the best way possible.

Creative: They can discover innovative solutions to problems and confidently carve a fresh path to get things done.

Eager to learn always: A great A/B tester is not stuck in their ways, they’re willing to let their beliefs be challenged, and welcome new ideas.

Where can you search for such talent? On LinkedIn, you’ll find them under the title “CRO specialist”, “Conversion rate optimizer”, or “CRO expert”. You can also check AngelList and Growth Talent Network.

A/B Testing Interview Questions

Now, what A/B testing interview questions would you ask them to see if they’re the right fit for your team and deliver great results?

The goal with these questions is to:

- see how conversant the candidate is with conversion rate optimization

- their personal experience with it

- their unique testing philosophy

- their work process, and

- if they have a sufficient enough understanding of the limitations of experimentation.

Questions to see how good they are with CRO:

- How important do you think qualitative and quantitative data is to A/B testing?

- When should you use multivariate tests over A/B tests?

- How do you optimize a low-traffic site?

- What do you think most CROs aren’t doing right with A/B testing?

- Do you think A/B tests on a web page affect search engine results?

Questions to learn their personal experience with testing:

- What was your biggest challenge with A/B testing?

- What is the most surprising thing you’ve learned about customer behavior?

- What is your favorite test win (and test failure)? And why?

- When should you move from free A/B testing tools to paid ones?

Questions to understand their testing philosophy:

- When are the limitations of A/B testing?

- Why do you think most sites don’t convert?

- How would you go about evangelizing a culture of experimentation in our organization?

- What’s your take on audience segmentation and how it affects testing accuracy?

Questions to see how they work:

- What is your process for coming up with test ideas?

- How do you prioritize your list of test ideas?

- What tools do you prefer for running A/B tests?

- How would you start implementing experimentation to optimize our website?

- What would you do if an executive suggests A/B testing when you think it isn’t necessary?

- How do you get baseline metrics of a page before testing?

Best Practices for Picking and Using A/B Testing Tools

Getting an A/B testing tool is not the entire journey. You won’t start getting insights to unravel insane conversion rates immediately.

There are limitations you have to navigate. And here’s how to go about that:

- Educate your team on the best process and strategy. Without the right hands on your tools, you won’t get the return on investment you’re looking for. You may even end up implementing faulty insights.

- A/B testing tools come with a lot of features and integrations. Be sure to check out which ones appeal to your unique use case and take full advantage of them.

- Your tool can affect your results significantly. Check to ensure you’re not using it in a way that affects SEO, it doesn’t cause flicker, takes privacy seriously, and has collision prevention.

- Learn about the statistical model your tool is using. Know how it calculates the results you’re getting. This is essential for understanding how to make decisions with what you get.

- Encourage an experimentation culture in your organization and make it easy for people to start using the tools.

- Ensure you’re measuring the right output — bear in mind that A/B tests are meant to provide you with a measure of certainty about your hypothesis.

Top A/B Testing Tools & Prices

There are free, open-source, freemium, and paid A/B testing tools out there. They are not all created equal. The perfect fit for your business is something you have to put some research into, so your A/B testing tool doesn’t hurt your business — because it can.

Don’t fret, the search for your ideal tool doesn’t have to be difficult. Use this article to learn how to buy an A/B testing tool that’s right for you.

For free A/B testing software, you can check out the most popular one: Google Optimize. You can also look at Nelio and Vanity.

But these have their limitations. When you need more testing power, go for paid tools.

Here are the top 5 and their prices:

- Convert Experiences – Starts at $699/month, $199 for every 100k visitors after that

- Convertize – Starts at $49/month for 20k visitors, $199 per 100k visitors

- Optimizely – Custom pricing, but reportedly starts at $36,000 per year

- Sitespect – You’ll have to talk to their sales team first for a price

Don’t judge a tool by the price alone. Check out this pricing guide of 50+ A/B testing tools to fully understand where your investment is going.

How Convert’s A/B Testing Software Can Help You Refine Your Testing Program and Boost Conversions

The best way to experience our tool is by playing around with it. Check it out for free so you can see the full functionality of a powerful A/B testing tool used by Jabra, Sony and Unicef.

Convert is good for the non-coder and with a robust code editor for coders and developers alike.

The best way to check out a tool is by exploring it. Get full access to Convert for 15-days for free. A/B test, check out all our integrations and see why so many optimizers chose Convert Experiences as an Optimizely alternative.

Access our A/B testing tool for over two weeks to do all the testing you would like.

A/B Testing with Google Analytics & Google Optimize

The Google Optimize and Google Analytics combo for A/B testing is great when starting out. You get to understand and apply the basics of optimization at no cost.

Google Optimize itself is a free A/B testing tool from Google that used to be an extension of Google Analytics called “Content Experiments”. You can run A/B, A/B/n, multivariate, and split URL testing; create variants of your website with an editor, and even edit your source code.

Then you can integrate it with Google Analytics to track data and get insights.

But since it is a free tool, there are limitations such as:

- Can only run 5 tests at a time

- Cannot set more than 3 goals

- Only tests websites, not apps

- No tests for longer than 90 days, etc

When you want to overcome these limitations to take your testing campaigns to the next level, you’ll have to opt for the paid options.

Culture of Experimentation: How to Build One?

Many brands—big and small—have seen significant growths in conversions and revenue when they champion data-driven decisions and you’ll like to see yours do the same. If you’d like to create a culture of experimentation in your organization, get ready to influence an attitude change.

The new attitude has to be one where

- Data is accessible

- Curiosity is the norm

- The leadership backs testing

- Opinions aren’t valued so highly

- Anyone is free to propose and launch tests, and

- Most decisions have to be proven before implemented

If you’re the only one who sees the value of testing in your company, this may be an uphill task. But when you start incorporating experimentation in your own decisions, share your wins in a digestible manner, and invite others to participate, it smooths the path.

The sections that follow will provide more in-depth information on A/B testing to move you from beginner to expert.

What is A/B Testing in Marketing?

A/B testing has a huge role to play in marketing. There is an opportunity to improve the efficiency of almost every customer-facing marketing asset. And A/B testing is a truth-seeking tool in a marketer’s hands to figure out how.

For instance, in user/customer acquisition…

A/B Testing vs. Acquisition

When it comes to acquisition, how can you make your marketing funnel more efficient in bringing new users and customers from brand awareness down to a purchasing decision, app installation, demo request, etc.?

How do you know what is responsible for poor performance or how you can improve your current conversion rate?

Test it. Test to learn what your target audience responds to.

Sometimes more traffic is not the answer. Think of bringing paid traffic to a broken funnel. That’s money down the drain.

Even the ads you put out need a high-quality score if you want to reduce your CPC or CPA. A funnel that repels more traffic than it should ruins the quality score of paid ads. A high converting landing page is essential, and to get that, you need A/B testing.

And there’s more to A/B testing…

Surprise Benefits of A/B Testing

- A/B testing is about buying data: 75% of A/B tests present a loser. But that doesn’t mean there isn’t an opportunity to learn from your A/B test results regardless of the outcome.

Your results can help you learn more about your target audience to provide a better marketing experience that boosts conversion rate. Getting a winner shouldn’t be the goal of A/B testing; getting information should.

- A/B testing gives a deeper understanding of the behavior of customers: There are visitor sentiments you cannot figure out any other way except through their behavior. A/B tests give you that priceless insight through sentiment analysis of visitors in segments.

Maybe that no-brainer change you intend to make may not settle well with your audience. You’ll find out when you test it first before pushing it to live.

Can removing the navigation on your landing page improve conversion rate? Well, one person found users felt trapped when they did that and it increased their bounce rate instead.

- A/B testing gives ideas to improve ops – like customer success: You may think that your instincts, a marketing guru, or even your customers are the best place to get info that works for digital marketing. But are they really?

Data-driven decisions have repeatedly beaten all 3 of those many times before. Even the smartest changes turn out to perform worse than the original, ugly outdated landing pages convert better than a “perfect” page, and old marketing sometimes beats modern best practices. Test to be sure.

A/B Testing & Web Development

Web development—just like any other product development—without tested and proven changes, is just guesswork.

Your investment is too precious for guessing, right? Of course. That’s why there should be a strong link between your web development and A/B testing.

For anything from fixing bugs to revamping your entire website, it’s best to make moves backed by data so you don’t hurt your business. Remember that even best practices may not hold up next to an idea that seems dumb.

And since you’re unable to read the mind of your customers, you cannot be sure you’re doing the right things. You may make damaging changes to your website instead of increasing the ROI of your web development investments. The best way to find out is to run experiments. Make conversion rate optimization an integral part of your web development.

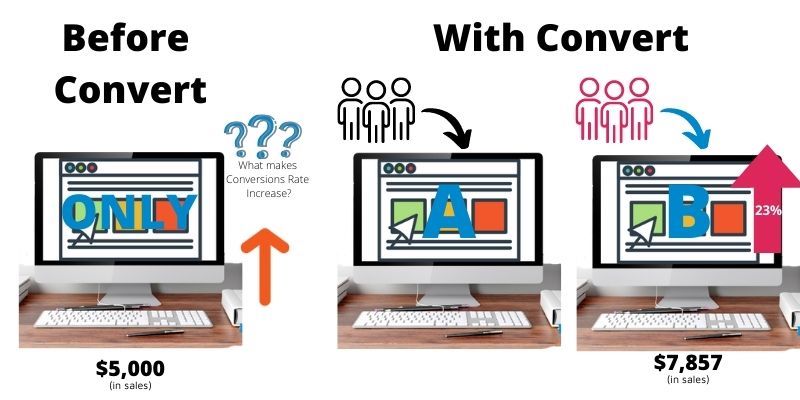

The ROI of A/B Testing

No doubt, A/B tests can lead to higher conversion rates and revenue growth. But how can you accurately calculate the ROI of an A/B test? How much revenue gain can you attribute to an experiment you carried out?

This path is slippery. It is difficult to accurately attribute a certain gain to an A/B test. That would be a gross oversimplification of reality. The point of A/B testing isn’t revenue. A/B tests provide priceless insights that inform smarter decisions. So, it is like buying data.

That said, the closest we can get to figuring out A/B testing ROI are these metrics:

- Revenue per session (RPS)

- Average sales lift

- Cost of running A/B test

- Multiplier for traffic split

- Value of the variant change

- Value gained from testing duration

- Forecast incremental revenue if variant goes live

- Overall A/B testing program value

These will help you provide the numbers to make the case for experimentation and get executive buy-in.

A/B Testing Success in FAANG Companies

Facebook, Amazon, Apple, Netflix, and Google are at the forefront of ultra-efficient marketing. That’s why we love to learn from them. Even Microsoft and LinkedIn are doing amazing jobs too.

There’s a lot to learn about A/B testing from these tech giants. Netflix, Microsoft, and LinkedIn are very open about their experimentation strategy and the successes they’ve garnered over the years. Google and Amazon are renowned data-driven companies. And if you’ve been around for a while, you’ll be familiar with the various tests at Facebook.

But Apple isn’t very open about its testing culture. Although they support mobile app A/B testing in Apple TestFlight.

Another noteworthy mention is Booking.com who has a strong experimentation culture, reportedly running in excess of 1,000 concurrent experiments at any given moment.

The Netflix experimentation platform is a popular topic on The Netflix Tech Blog where they’ve discussed how A/B tests helped them:

- Completely redesign the Netflix UI

- Provide personalized homepages, and

- Select the best artwork for videos

They tap into A/B test-generated data to deliver “intuitive, fun, and meaningful” experiences to their over 81.5 million members.

LinkedIn has a culture of experimentation. They make it an important part of their development process — everything from homepage redesign to algorithm changes in the back-end.

Just like LinkedIn wants the best ROI based on data-proven product development strategies, Amazon uses A/B tests to deliver optimized listings to users. And it boosts sales.

Our success at Amazon is a function of how many experiments we do per year, per month, per week, per day.

— Jeff Bezos, Founder and Executive Chairman of Amazon

Across all their products, Search, Android, YouTube, Gmail, and many more, Google makes data-driven decisions. Already a digital behemoth, but Google isn’t relaxing. There’s almost no change that affects user experience that isn’t evaluated via experimentation at Google.

In 2000, Google ran their first A/B test to figure out how many results they should show per page. The test failed because the challenger loaded slower than the original. But they learned something from it: 1/10th of a second could make or break user satisfaction. In 2011, they ran more than 7000 A/B tests on their search algorithm.

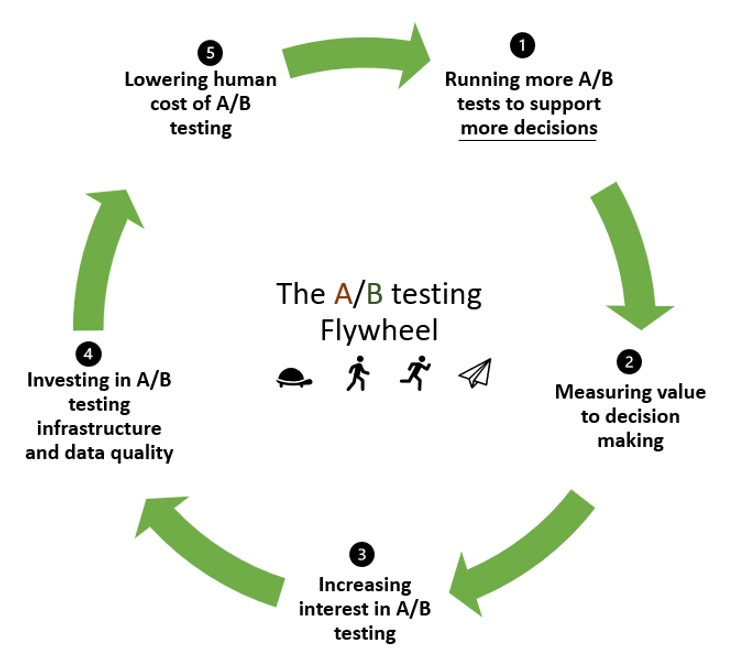

Bing of Microsoft isn’t left out, running up to 300 experiments per day. They even say “each user falls into one of 30 billion possible experiment combinations.” There’s a strong testing culture in Microsoft. So much so that they developed their A/B testing Flywheel:

A/B Testing Flywheel (Source: Microsoft)

The idea is that an A/B testing program should kick-start a loop that consistently feeds itself to inspire one test after another — all to improve customer experience. This is part of the reasons Bing has experienced growth, such as increased revenue by search from 10% to 25% a year.

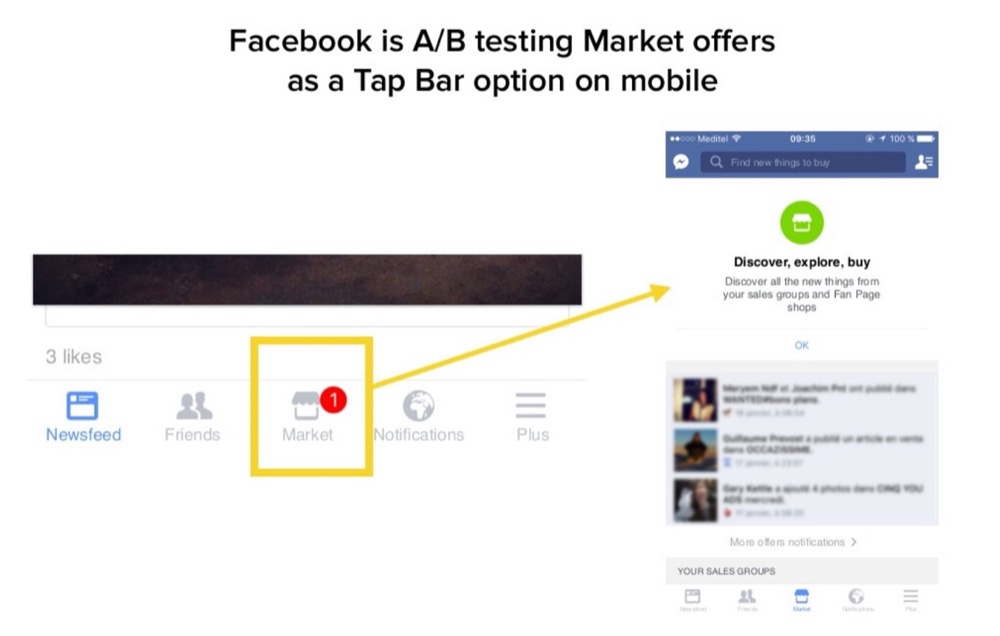

Facebook has used A/B tests in many capacities in the past. One example was to fix a common problem in huge products: feature discoverability. They tested placing the market icon in a more visible location.

Source: Maxime Braud via SlideShare

Fun fact: when Microsoft asked their users what they wanted added to Office, they found 90% of the requested features were already there.

― Des Traynor, Co-Founder of LinkedIn

The Future of A/B Testing

It is better to learn about the future of A/B testing from those who are at the forefront of the industry, the hands-on experts and professional conversion rate optimizers.

Here’s what they have to say:

Over the past few years, CRO has been undergoing some significant changes. Since CRO extensively becomes a more complex process, I think that in five years, it will face significant changes in personalization.

People want to know what data you are collecting and why. Privacy and personalization will be everything since people want to feel like you understand them and that you’re addressing their needs.

— Richard Garvey, CEO of Different SEO

While in the past CRO involved a lot of experimentation of creating different landing pages in order to see which best drives desired actions, such a trial and error approach is inefficient and time-consuming.

Over the next 5 years, AI will better be able to track cursor movement on webpages as well as iris tracking in testing. We will be able to garner a plethora of data through focus group testing on this which can revolutionize the way that landing pages are designed.

— Michael Austin, Marketing Manager at G6 Consulting Inc

With Google announcing that third party cookies will soon be a thing of the past, marketers have to find different ways to personalize the browsing experience and collect visitors’ data.

I think that the future will bring more rights to the visitors and that collecting any data will become increasingly difficult.

We’ll have to find new ways to capture leads and it’s going to become a race for gating the best content so visitors can leave their precious information.

— Petra Odak, Chief Marketing Officer at Better Proposals

CRO is beginning to change. And will very apparently change over the next 5 years, in two fundamental ways. One, CRO is becoming more empirical and data-sophisticated. Two, it’s now more commonplace for companies to utilize experimentation far beyond testing website UI/UX changes (to the point that there’s been some discussion around picking a new term for the practice, like “customer experience optimization” or “customer value optimization”).

In many ways, this is just a reflection of what the FAANG (Facebook, Amazon, Apple, Netflix, and Google) companies have known and practiced for the last decade. Having realized the imperative of testing everything across the business and running as many experiments as technically feasible, FAANG companies have hired scientists to develop what are quite literally fundamental advancements to the field of statistics. These advancements allow them to run more experiments, run experiments faster, and measure increasingly complex outcomes (e.g. Bing measuring ‘did a user successfully find what they were searching for?’, or Netflix measuring how different approaches to loading videos on slow connections impacts user frustration).

— Ryan Lucht, Senior Growth Strategist at CRO Metrics

Top A/B Testing Considerations for CMOs

At the helm of marketing affairs, you’re probably pressured to produce results that drive sales. For you, conversion is the goal — to generate more leads for the sales team to work with.

When it comes to implementing A/B testing for that purpose, what should you be thinking about?

We’ve outlined 4 of the most popular A/B testing marketing considerations:

1. Is A/B Testing Needed?

A/B testing isn’t always necessary to boost your conversion rates. For instance, if your product needs a complete revamp, that’s beyond the converting powers of A/B testing. The right product has to be in place first of all.

2. Are You Willing to Do What It Takes to Run a Testing Program?

A testing program requires more than one test. A proper strategy is to use data from one test to inspire the next one. One-off tests rarely yield spectacular results.

3. Data Is King in Testing

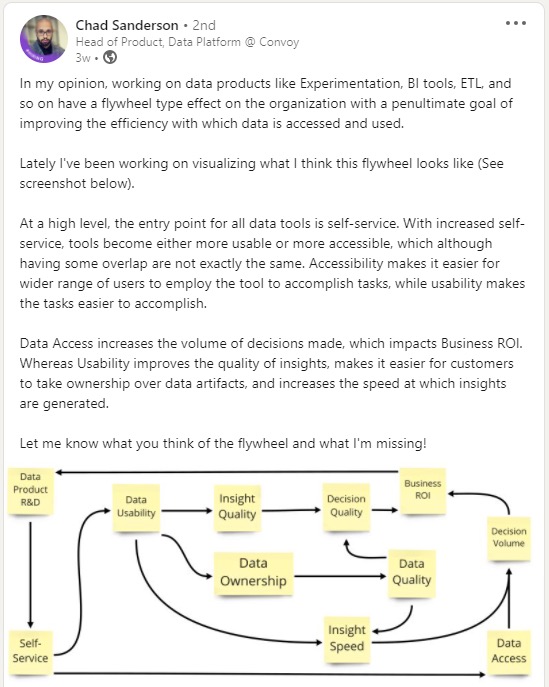

You must have solid data access and processing capability in place where the right data is available to anyone who wishes to ideate for tests. Chad Sanderson envisioned a flywheel that outlines the usability and accessibility of data and how it encourages more data-driven decisions.

Without the right data culture, your hypotheses won’t be tenable. And the data from A/B tests won’t drive further improvements either.

4. The ROI of Testing

It takes time for the results of A/B testing to show. And as already discussed in the section on ROI, it isn’t necessary for the ROI to be a revenue contribution, directly. In some cases, testing can drive innovation. In others, retention. Learn to see the impact of testing on metrics that sit downstream.

Now, let’s highlight some challenges experienced when doing an A/B test.

What Are the Challenges of A/B Testing?

Challenges are inherent in all kinds of testing, A/B testing is not excluded from this. Challenges can always frustrate, but knowing what to expect can ease that reaction. Especially when you understand how powerful A/B testing is in your “Get More Conversions” arsenal.

Here are a few challenges you may encounter with A/B testing and how to combat them.

A/B Testing Challenge #1: What to Test?

Just like when you have a lot of things to do on your To-Do list – Prioritize, Prioritize, Prioritize!

Prioritization, data, and analytics drive what to test.

For specific tips, review the section “What to Test.”

A/B Testing Challenge #2: Generating Hypotheses

It may seem simple, but coming up with a data-driven hypothesis can be a challenge. It requires research and a solid interpretation of data. It’s important not to make an “educated guess” based on your knowledge. Make sure your proposal is data generated.

Check out the section on generating a hypothesis to get specific guidelines on hypothesis formulation.

A/B Testing Challenge #3: Calculating Sample Size

Statistical calculations may not be your cup of tea. However, understanding how to calculate sample size, and understanding why it is important to the success of every A/B test is critical.

Take the shortcut approach and let your testing tool do the heavy lifting for you. But understanding how sample size influences your testing will give you an advantage.

A/B Testing Challenge #4: Analyzing Your Test Results

This is the fun part of A/B testing, because this is your opportunity to get insights into what worked and what didn’t, and why. Why did the form with more fields get a significant lift? Why does the ugly picture above the CTA continuously beat out the variation?

Whether your A/B test meets your hypothesis or not, reflect on all the data. Pass or fail, there are jewels of information to gleam in every A/B test developed within a strict A/B testing protocol.

Poor interpretation of results can lead to bad decisions and negatively affect other marketing campaigns you integrate this data into. So take the step of post-analysis and deployment seriously and don’t get caught in this trap.

A/B Testing Challenge #5: Maintaining a Testing Culture

What good is your knowledge of A/B testing or all your fancy A/B tools if your culture doesn’t support experimentation? It will be a challenge getting co-workers and members of the C-suite to test.

It also will prevent you from iterating, which is the foundation of A/B testing. Keep testing.

A/B Testing Challenge #6: Annoying Flickering Effect

Flicker occurs when your visitor sees your control page for a few seconds before it changes to your treatment or variation page. It may not seem like much, but the brain processes this data (seeing the original page) and it changes how the visitor interacts with your variation, ultimately affecting the results of your A/B test.

You might be losing customers & revenue because of your A/B Testing tool!

– Claudiu Rogoveanu, Co-Founder & Chief Technical Officer at Convert.com

As little as one second of blinking or Flicker of Original Content (FOOC) damages your reputation. It frustrates traffic and negatively impacts conversions by 10% or more!

If your variations aren’t performing well because of the persistent blink, this whitepaper will show you new ways to increase site speed, optimize images, and streamline code to beat FOOC.

A/B Testing Challenge #7: Cumbersome Visual Editors

Some testing platforms have clunky visual editors that make it difficult to change your control.

Convert’s visual editor is smooth, seamless to use.

You can play around with this visual editor for 15-days. Free. No credit card required. Full access.

A/B Testing Challenge #8: Difficulty Creating Testing Goals

Difficulties to create goals and select elements to track clicks. No clear flow when setting up experiments. This goes back to the importance of testing. Following the above step-by-step guide should help minimize this occurrence.

A/B Testing Challenge #9: Keeping Changes

What happens when the winner of your test is variation B? It means you have to implement the change on your website.

With many tools, there is no straightforward way to keep changes on the platform until they implement the changes on their server.

Since all teams will be on board before any testing begins. Alert the team responsible for making changes that depending on the winner of the test, a request for them to change an element may come down the pike soon.

A/B Testing Challenge #10: Separating Code

No simple way to separate code when there are multipage experiments.

A/B Testing Challenge #11: Excluding Internal Traffic From Results

Marketers know this all too well. Google Analytics data is flooded with metrics that represent internal traffic. This also happens with your A/B testing. It is difficult to exclude internal traffic from experiment reports.

A/B Testing Challenge #12: Advance Testing Requires Technical (Dev) Support

If you have lots of traffic, an enormous site, require segmentation, or special code, you will need to get your Dev team involved. More of an issue if you don’t have a development team or your development team doesn’t have the capacity to support testing efforts.

If the latter is the case, it may be time to restructure your experimentation program.

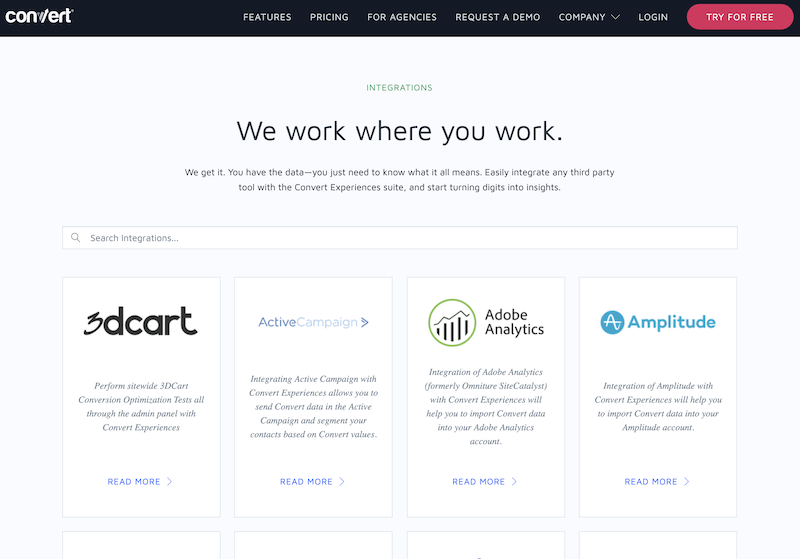

A/B Testing Challenge #13: Third-Party Integration

You never use just one tool. Many times you may want to see data from your test (or import data into your test) from third-party applications.

Not all testing platforms allow you to do this seamlessly.

Convert integrates with over 100 tools.

A/B Testing Challenge #14: QA the Experiment

As you can see, there are many areas that can create issues in your A/B test if you are not careful. That’s why quality control of your experience is important. Unfortunately, many people overlook this step and rely heavily on it. Especially when you rely heavily on fancy tools.

Yes, let the tool do the work, but doing a QA look before pressing the start button is never a bad idea.

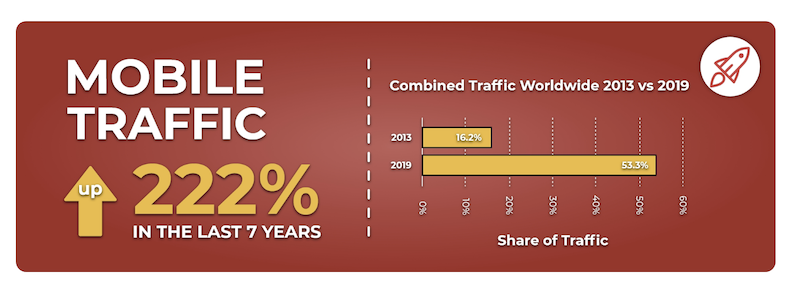

A/B Testing Challenge #15: A/B Testing on Mobile

According to DataReportal, there are 5.15 billion unique mobile phone users. The ability to test on different devices is in demand. And the desire for people to a/b test on mobile is also increasing. Tool accessibility on different devices is critical.

When picking a tool, be mindful of this limitation of accessibility.

As you can see, you can hit many snafus.

There is a close relationship between A/B Testing challenges and A/B testing mistakes. Time to go over potential common pitfalls made in A/B testing and how to avoid them.

What Are the Mistakes to Avoid While A/B Testing?

With so many steps and concepts that have to work together, the possibility of mistakes is plentiful.

Here are a few common mistakes made in A/B testing and how to avoid them.

Mistake #1: Not Planning Within Your CRO Strategy

Worse than this is not planning at all.

Planning is a step in the A/B testing process that you can’t ignore or skip. Planning includes gathering data, reviewing your overall conversion strategies and desired goals, and creating a solid hypothesis.

We know testing can be fun, but you’re not in it for enjoyment, you are A/B testing to drive business growth and find opportunities in your marketing to convert better.

Also, sometimes marketers are tempted to follow the claims and paths of industries gurus. Sticking to your plan will discourage you or your team from doing this. A change in CTA may have gotten them a 24% lift, but your business, website, goals, and conversion focus are different. Having a plan will keep this reminder at the forefront.

Mistake #2: Testing Too Much at Once

The power of A/B testing lies in its head-to-head competitiveness of one element at one time. If you run too many tests at the same time how do you know which one is responsible for positive or negative changes?

If you have multiple elements you would like to test, which you should, use a prioritization tool to focus your efforts. Also, be sure you are using a tool that can handle multiple tests. With Convert, you can run multiple A/B tests on different pages at the same time.

Mistake #3: Not Reaching Statistical Significance

Allow every test you run to reach statistical significance. Even if you think you can identify a clear winner prior to reaching statistical significance, stopping your test early will invalidate the results. This is especially important when using the Frequentist statistical model in your A/B test.

It’s like taking a cake out of the oven too early. It may look done, but when you inspect it you realize you should have let it cook for the full duration of time allotted.

Mistake #4: Testing for Incorrect Duration

As stated in A/B testing mistake #3, you must run your tests for their full duration. When you don’t, you cannot reach statistical significance.

When you think about test duration, think about the “Goldilocks Rule.” You want the length of your test to be “just right,” not too long or too short.

The way to avoid this is to shut off a test only when a winner is declared by your A/B testing software.

Mistake #5: Using Above or Below Calculated Required Traffic

Using different amounts of traffic, higher or lower, can have a negative effect on your A/B test. Use correct traffic numbers to ensure conclusive results.

Mistake #6: Failing to Iterate

To reap the full benefits of A/B testing, you MUST build iteration into your plan. Each A/B test sets you up for another test.

It is this iterative process that allows you to refine your website, identify opportunities, and give your customer the experience they want and deserve, which translates into higher conversions for you.

It is tempting to stop testing after one successful test. But remember the acronym for A/B testing. Always Be Testing. Let this be your model.

Mistake #7: Not considering external factors

Holidays, days of the week, hours of the day, weather, the overall state of society – all these things and more influence how visitors engage with your site.

When analyzing results, factor in these elements.

Mistake #8: Using the Wrong or Bad Tools

Prioritizing experimentation and A/B testing means investing in the right tools. Would you try to build a house with just a screwdriver and a hammer? Sure, you can do it, but it would be an inefficient and poor use of resources.

Think of your business as a house, you are building it from the ground up and need the right tools to do the job.

Don’t fall into the trap of looking at the upfront investment without looking at the ROI of your A/B testing investment.

Taking our house example again, a good drill may cost you several hundred dollars, but calculate what it will save you in time (which is money).

Not sure which tool will give you the bang for your buck? Convert takes the risk out of the decision by giving you 15 days to try our A/B testing tool. No credit card required and all features are available.

The best way to check out a tool is by exploring it. Get full access to Convert for 15-days for free. A/B test, check out all our integrations and see why so many optimizers chose Convert Experiences as an Optimizely alternative.

Mistake #9: Not Being Creative In Your A/B Testing

A/B testing is a beast of a tool if you use it in its full capacity. Unfortunately, many people use it simply to test color variations of buttons. Yes, this is a valid element to test, but don’t stop there.

If you need inspiration review the session on, “What should I test?”

The same thing goes with your A/B testing software. Explore it. What can do that?

Convert provides a custom onboarding session with our support team to not just get you up to speed using the software immediately but showing you ways to use it within your testing program.

Knowing the potential A/B testing challenges and pitfalls makes it easier for you to avoid or fix them if they arise.

These are just a few. There’s more for you to avoid, so your testing campaigns actually yield you the results you want.

Here are a few other common A/B testing mistakes to avoid (and be sure to click on the resource above to get the comprehensive list):

- Making a live change before testing it

- Not testing your A/B testing tool for performance and accuracy

- Allowing your A/B testing tool to flicker during tests

- Only running tests based on ‘industry best practices’

- Testing too many things at once and not being able to track the results

- Peeking — looking to see the performance of a test while it is running (before it reaches statistical significance)

- Making changes while the test is still running

- Getting emotional when a test fails

- Giving up after running only one test

- Not reading the results correctly and by segments

- Forgetting about false positives and not double-checking huge lifts

- Not checking the validity after the test

Conclusion

I hope this article inspired you with real examples and a guideline to follow to start A/B testing today or re-energize your A/B testing campaigns. Test often, test consistently, test within a strategy.

Written By

Nyaima Smith-Taylor, Uwemedimo Usa

Edited By

Carmen Apostu